update README.

Showing

.travis/unittest.sh

0 → 100755

deep_speech_2/compute_mean_std.py

100755 → 100644

文件模式从 100755 更改为 100644

deep_speech_2/data_utils/__init__.py

100755 → 100644

文件模式从 100755 更改为 100644

deep_speech_2/data_utils/audio.py

100755 → 100644

deep_speech_2/data_utils/augmentor/__init__.py

100755 → 100644

文件模式从 100755 更改为 100644

deep_speech_2/data_utils/augmentor/augmentation.py

100755 → 100644

文件模式从 100755 更改为 100644

deep_speech_2/data_utils/augmentor/base.py

100755 → 100644

文件模式从 100755 更改为 100644

deep_speech_2/data_utils/augmentor/volume_perturb.py

100755 → 100644

文件模式从 100755 更改为 100644

deep_speech_2/data_utils/featurizer/__init__.py

100755 → 100644

文件模式从 100755 更改为 100644

文件模式从 100755 更改为 100644

文件模式从 100755 更改为 100644

文件模式从 100755 更改为 100644

deep_speech_2/data_utils/normalizer.py

100755 → 100644

文件模式从 100755 更改为 100644

deep_speech_2/data_utils/speech.py

100755 → 100644

deep_speech_2/data_utils/utils.py

100755 → 100644

文件模式从 100755 更改为 100644

deep_speech_2/datasets/run_all.sh

100755 → 100644

文件模式从 100755 更改为 100644

deep_speech_2/decoder.py

100755 → 100644

deep_speech_2/error_rate.py

0 → 100644

deep_speech_2/utils.py

0 → 100644

image_classification/alexnet.py

0 → 100644

image_classification/googlenet.py

0 → 100644

image_classification/infer.py

0 → 100644

image_classification/resnet.py

0 → 100644

image_classification/train.py

100644 → 100755

59.2 KB

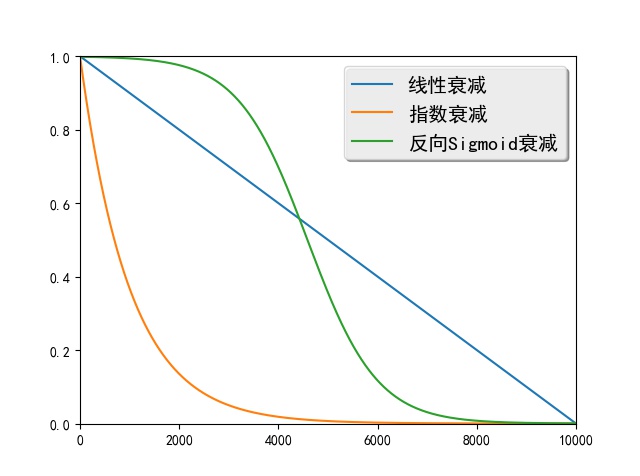

scheduled_sampling/img/decay.jpg

0 → 100644

44.6 KB