add yolov3 for dygraph (#4136)

* add yolov3 for dygraph

Showing

dygraph/yolov3/.gitignore

0 → 100644

dygraph/yolov3/README.md

0 → 100644

dygraph/yolov3/box_utils.py

0 → 100644

dygraph/yolov3/config.py

0 → 100644

dygraph/yolov3/data_utils.py

0 → 100644

dygraph/yolov3/dist_utils.py

0 → 100644

dygraph/yolov3/edict.py

0 → 100644

dygraph/yolov3/eval.py

0 → 100755

288.1 KB

459.3 KB

494.8 KB

380.6 KB

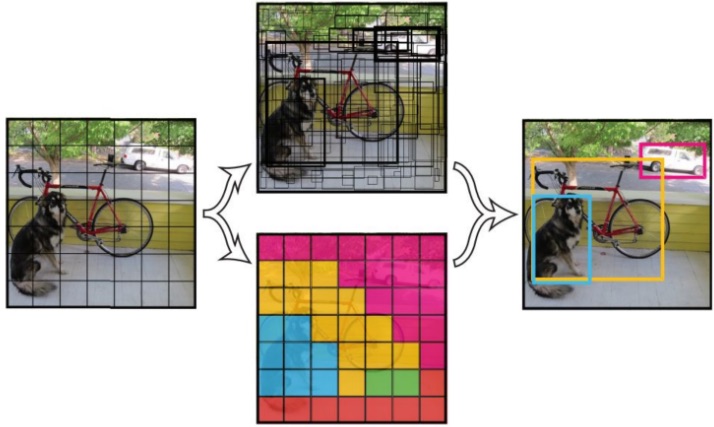

dygraph/yolov3/image/YOLOv3.jpg

0 → 100644

68.4 KB

288.4 KB

dygraph/yolov3/image/dog.jpg

0 → 100644

159.9 KB

dygraph/yolov3/image/eagle.jpg

0 → 100644

138.6 KB

dygraph/yolov3/image/giraffe.jpg

0 → 100644

374.0 KB

dygraph/yolov3/image/horses.jpg

0 → 100644

130.4 KB

dygraph/yolov3/image/kite.jpg

0 → 100644

1.4 MB

dygraph/yolov3/image/person.jpg

0 → 100644

111.2 KB

dygraph/yolov3/image/scream.jpg

0 → 100644

170.4 KB

16.8 KB

dygraph/yolov3/image_utils.py

0 → 100644

dygraph/yolov3/infer.py

0 → 100644

dygraph/yolov3/models/__init__.py

0 → 100644

dygraph/yolov3/models/darknet.py

0 → 100755

dygraph/yolov3/models/yolov3.py

0 → 100755

dygraph/yolov3/reader.py

0 → 100644

dygraph/yolov3/start_paral.sh

0 → 100644

dygraph/yolov3/train.py

0 → 100755

dygraph/yolov3/utility.py

0 → 100644