COCO dataset for SSD and update README.md (#844)

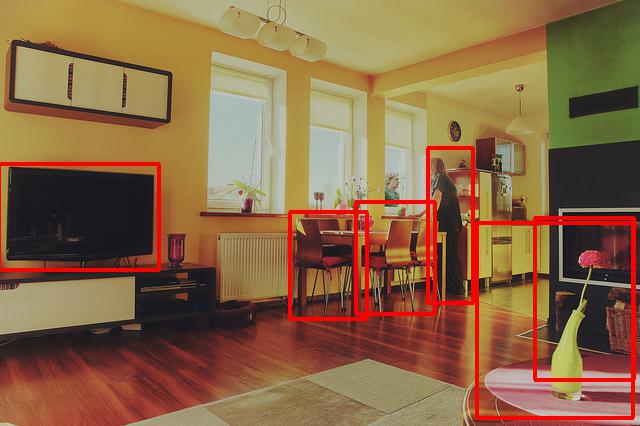

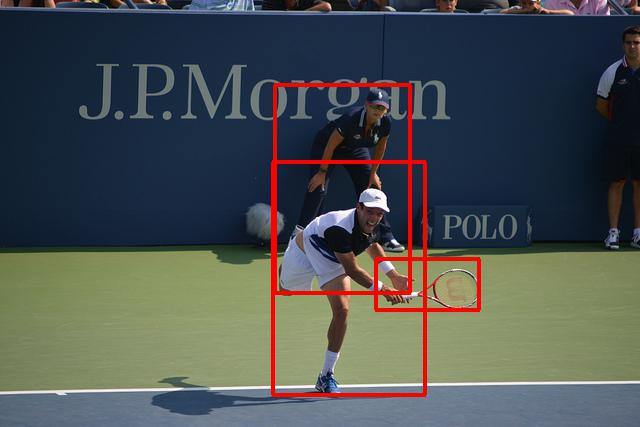

* ready to coco_reader * complete coco_reader.py & coco_train.py * complete coco reader * rename file * use argparse instead of explicit assignment * fix * fix reader bug for some gray image in coco data * ready to train coco * fix bug in test() * fix bug in test() * change coco dataset to coco2017 dataset * change dataset from coco to coco2017 * change learning rate * fix bug in gt label (category id 2 label) * fix bug in background label * save model when train finished * use coco map * adding coco year version args: 2014 or 2017 * add coco dataset download, and README.md * fix * fix image truncted IOError, map version error * add test config * add eval.py for evaluate trained model * fix * fix bug when cocoMAP * updata READEME.md * fix cocoMAP bug * find strange with test_program = fluid.default_main_program().clone(for_test=True) * add inference and visualize, awa, README.md * upload infer&visual example image * refine image * refine * fix bug after merge * follow yapf * follow comments * fix bug after separate eval and eval_cocoMAP * follow yapf * follow comments * follow yapf * follow yapf

Showing

41.1 KB

37.1 KB

28.9 KB

65.6 KB

39.0 KB

fluid/object_detection/infer.py

0 → 100644