fix

Showing

drn/README.md

已删除

100644 → 0

drn/drn.py

已删除

100644 → 0

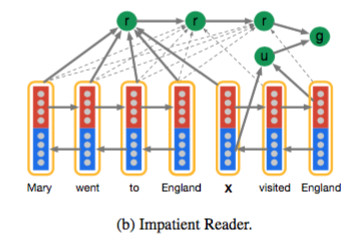

drn/img/pic1.png

已删除

100644 → 0

31.6 KB

drn/img/pic2.png

已删除

100644 → 0

38.3 KB

drn/img/pic3.png

已删除

100644 → 0

37.0 KB

drn/infer.py

已删除

100644 → 0

drn/reader.py

已删除

100644 → 0

drn/train.py

已删除

100644 → 0

drn/utils.py

已删除

100644 → 0

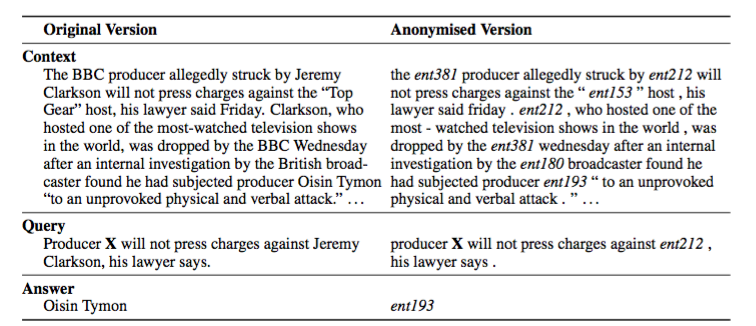

teach_read/README.md

已删除

100644 → 0

44.6 KB

53.4 KB

201.7 KB

49.0 KB

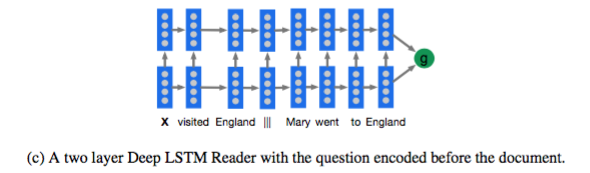

teach_read/infer.py

已删除

100644 → 0

teach_read/train.py

已删除

100644 → 0

teach_read/two_layer_lstm.py

已删除

100644 → 0

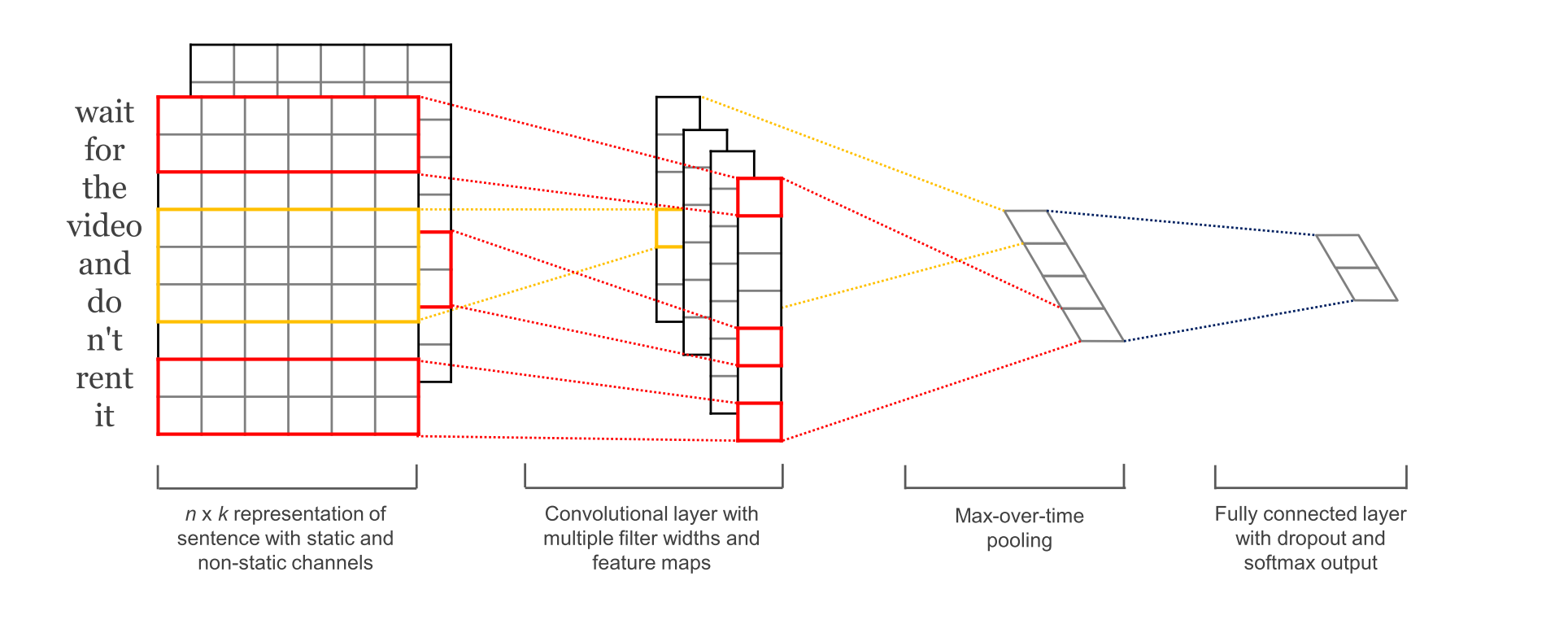

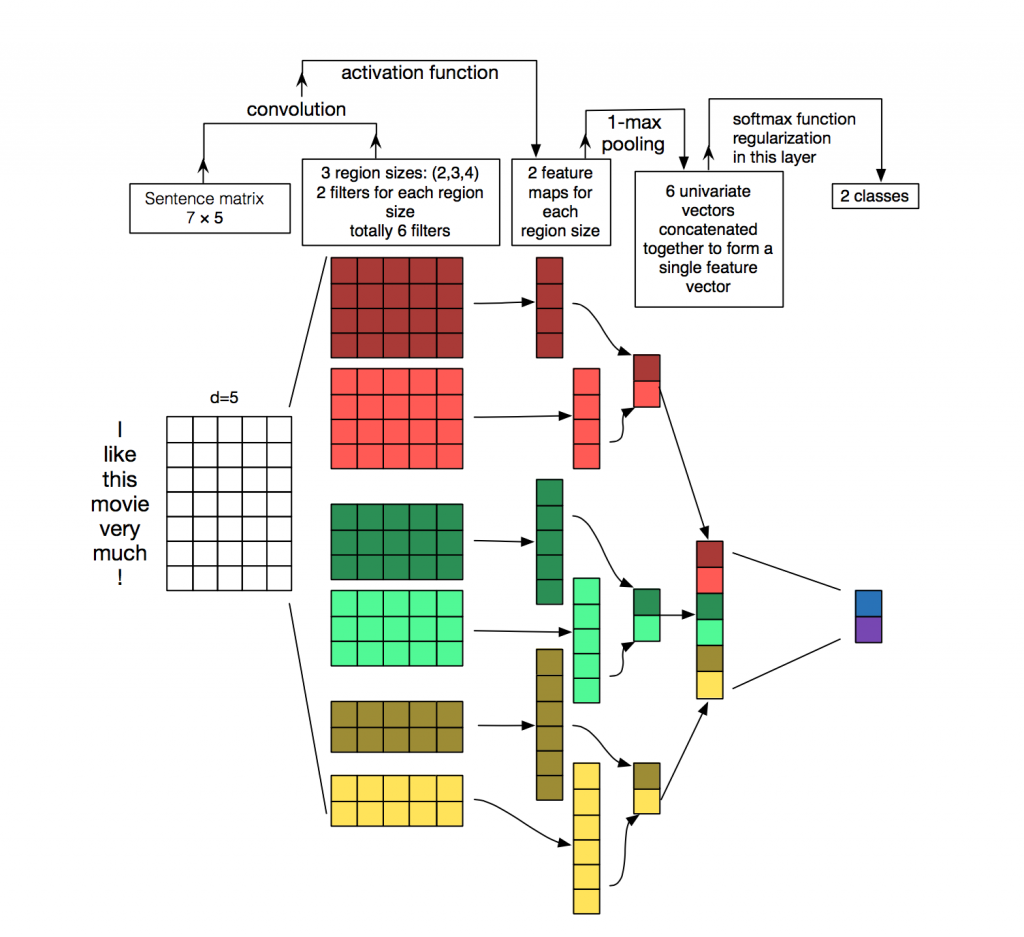

text_cnn_classify/README.md

已删除

100644 → 0

text_cnn_classify/config.py

已删除

100644 → 0

158.5 KB

200.3 KB

text_cnn_classify/infer.py

已删除

100644 → 0

text_cnn_classify/train.py

已删除

100644 → 0