add (#5597)

* add new models and helix files (#5569) Co-authored-by: Nliushuangqiao <liushuangqiao@beibeiMacBook-Pro.local> * Add PP-HumanSegV2 Chinese Docs (#5568) * Create .gitkeep * Create .gitkeep * Create .gitkeep * Create .gitkeep * Create .gitkeep * Create .gitkeep * Create .gitkeep * Create .gitkeep * Create .gitkeep * Create .gitkeep * update PP-HelixFold description (#5571) * add new models and helix files * update PP-HelixFold description Co-authored-by: Nliushuangqiao <liushuangqiao@beibeiMacBook-Pro.local> * Update introduction_cn.ipynb * Update introduction_en.ipynb * Update introduction_en.ipynb * Update introduction_cn.ipynb * add PP-ASR (#5559) * add PP-ASR * add PP-ASR * add PP-ASR * PP-ASR (#5572) * add PP-ASR * add PP-ASR * add PP-ASR * add PP-ASR * add PP-ASR * Update info.yaml (#5575) * add install of paddleclas (#5581) * add gradio for PP-TTS (#5582) * Delete modelcenter/VIMER-UMS directory * [Seg] Add PP-LiteSeg (Doc & APP) and PP-HumanSeg (APP) (#5576) * Add PP-LiteSeg to Model Center * Add APP for PP-HumanSegV2 and PP-LiteSeg * Add PP-Matting (#5563) * update info.yam english (#5586) * update inference of PP-OCRv2 to paddlecv (#5587) * add install of paddleclas * update inference with paddlecv * Update PP-HumanSegV2 info.yaml * Change sub_tag_en in PP-HumanSeg info.yaml * Change sub_tag_en in PP-LiteSeg info.yml * Main (#5588) * add install of paddleclas * update inference with paddlecv * change cv to Computer Vision * Add ERNIE UIE in modelcenter (#5574) * add_uie * fill_yaml * modify_info * add_model_size * imgae_size * image_size * modify * change_en * change_en * Update info.yaml Co-authored-by: NliuTINA0907 <65896652+liuTINA0907@users.noreply.github.com> * add PP-TSM (#5565) * add PP-TSM * remove log file * add description * fix Example in info.yaml * fix notebook * update task to video * add ERNIE-M to model center (#5570) * add ERNIE-M to model center * add benchmark_en info.yaml * remove unused cell * rm image from repo and fix ipynb * add introduction_en and fix some annotations * add task to info.yaml * add model_size and the number of parameters while modify ipynb following comments * rewrite pretrained model into chinese * modify task info * Add det models (#5578) * add PP-YOLO/PP-YOLOE/PP-YOLOE+/PP-Picodet models * add PP-YOLO/PP-YOLOE/PP-YOLOE+/PP_PicoDet * modify info.yml of det models * Update info.yaml * Update info.yaml * Update info.yaml * Update info.yaml Co-authored-by: NliuTINA0907 <65896652+liuTINA0907@users.noreply.github.com> * update PP-ShiTuV2 files (#5562) * update PP-ShiTuV2 files * update PP-ShiTu * add PP-ShiTuV2 APP files * refine code * remove PP-ShiTuV1' APP * correct in info.yaml * update partial en docs * update * Update info.yaml * Update info.yaml Co-authored-by: NliuTINA0907 <65896652+liuTINA0907@users.noreply.github.com> * add PP-TInypose PP-Vehicle PP-HuamnV2 (#5573) * add PP-TInyPose * add PP-TInyPose info.yaml app.yaml * * add PP-HumanV2 * add PP-Vehicle * PP-TInyPose PP-Vehicle PP-HumanV2 add English version * fixed info.yaml * Update info.yaml * fix PP-TInyPose/info.yaml Co-authored-by: NliuTINA0907 <65896652+liuTINA0907@users.noreply.github.com> * Update info.yaml * add ppmsvsr introduction_cn.ipynb (#5554) * add ppmsvsr introduction_cn.ipynb * add benchmark info and download * add en doc * fix info.yaml * Update info.yaml Co-authored-by: NliuTINA0907 <65896652+liuTINA0907@users.noreply.github.com> * Update info.yaml * Update info.yml * Update info.yaml * Update info.yaml * Delete modelcenter/ERNIE-Gram directory * Delete modelcenter/ERNIE-Doc directory * Delete modelcenter/ERNIE-ViL directory * Delete modelcenter/PP-HumanSeg directory * Add ERNIE-3.0 for model center (#5577) * add ernie-3.0 * update ERNIE 3.0 * Delete modelcenter/PP-OCR directory * Delete modelcenter/PP-Structure directory * Update info.yaml * Delete modelcenter/PP-TSMv2 directory * Delete modelcenter/VIMER-StrucTexT V1 directory * Update info.yaml * update PP-ShiTu en docs (#5589) * update PP-ShiTuV2 files * update PP-ShiTu * add PP-ShiTuV2 APP files * refine code * remove PP-ShiTuV1' APP * correct in info.yaml * update partial en docs * update * update PP-ShiTu en doc * refine * add pplcnet pplcnetv2 pphgnet (#5560) * add pplcnet * add pplcnetv2 * add pphgnet * fix * update * support gradio for PPLCNet PPLCNetV2 PPHGNet * fix * enrich contents * Update benchmark_cn.md * Update download_cn.md * Update info.yaml * Update info.yaml * add example * Add modelcenter en * Add PP-HGNet en * Add PP-LCNet and PP-LCNetV2 en * Add modelcenter English * rename v2 to V2 * fix * Update info.yaml Co-authored-by: NliuTINA0907 <65896652+liuTINA0907@users.noreply.github.com> Co-authored-by:zhangyubo0722 <zhangyubo0722@163.com> * Update info.yaml * Update info.yaml * Update info.yaml * update download.md (#5591) * bb * 2022.11.14 * 2022.11.14_10:36 * 2022.11.14 16:55 * 2022.11.14 17:03 * 2022.11.14 21:40 * 2022.11.14 21:50 * update info.yaml * 2022.11.17 16:44 * update info.yml of PP-OCRv2、PP-OCRv3、PP-StructureV2 (#5593) * add install of paddleclas * update inference with paddlecv * change cv to Computer Vision * update info.yml * Update info.yaml * Update info.yaml * Update info.yaml * Update info.yaml Co-authored-by: NliuTINA0907 <65896652+liuTINA0907@users.noreply.github.com> * Update info.yaml * add files * remove ernie-3.0 & ... models * add ernie & ... models Co-authored-by: Nliushuangqiao <liushuangqiao@beibeiMacBook-Pro.local> Co-authored-by: Ncc <52520497+juncaipeng@users.noreply.github.com> Co-authored-by: NZth9730 <32243340+Zth9730@users.noreply.github.com> Co-authored-by: NMing <hww_ym@aliyun.com> Co-authored-by:

zhoujun <572459439@qq.com> Co-authored-by:

TianYuan <white-sky@qq.com> Co-authored-by: Nwuyefeilin <30919197+wuyefeilin@users.noreply.github.com> Co-authored-by: Nlugimzzz <63761690+lugimzzz@users.noreply.github.com> Co-authored-by: Nhuangjun12 <2399845970@qq.com> Co-authored-by: NYam <40912707+Yam0214@users.noreply.github.com> Co-authored-by: Nwangxinxin08 <69842442+wangxinxin08@users.noreply.github.com> Co-authored-by: NHydrogenSulfate <490868991@qq.com> Co-authored-by: NLokeZhou <aishenghuoaiqq@163.com> Co-authored-by: Nwangna11BD <79366697+wangna11BD@users.noreply.github.com> Co-authored-by: NJiaqi Liu <709153940@qq.com> Co-authored-by: NTingquan Gao <35441050@qq.com> Co-authored-by:

zhangyubo0722 <zhangyubo0722@163.com> Co-authored-by: N小小的香辛料 <little_spice@163.com>

Showing

modelcenter/DeepCFD/info.yaml

0 → 100644

modelcenter/ERNIE-ViLG/info.yaml

0 → 100644

modelcenter/PINN-CFD/info.yaml

0 → 100644

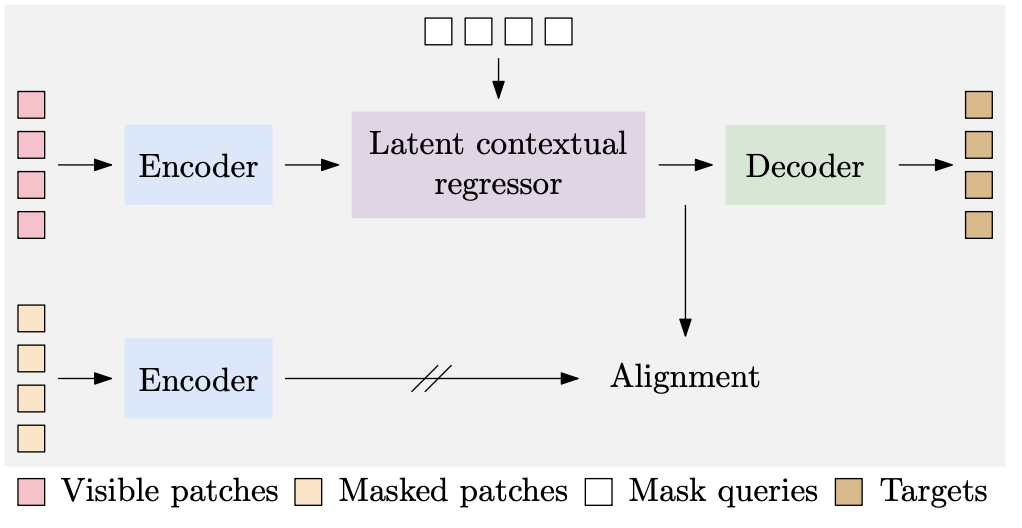

modelcenter/VINER-CAE/CAE2.png

0 → 100644

55.7 KB

modelcenter/VINER-CAE/info.yaml

0 → 100644