init commit for deepvoice3 (#3458)

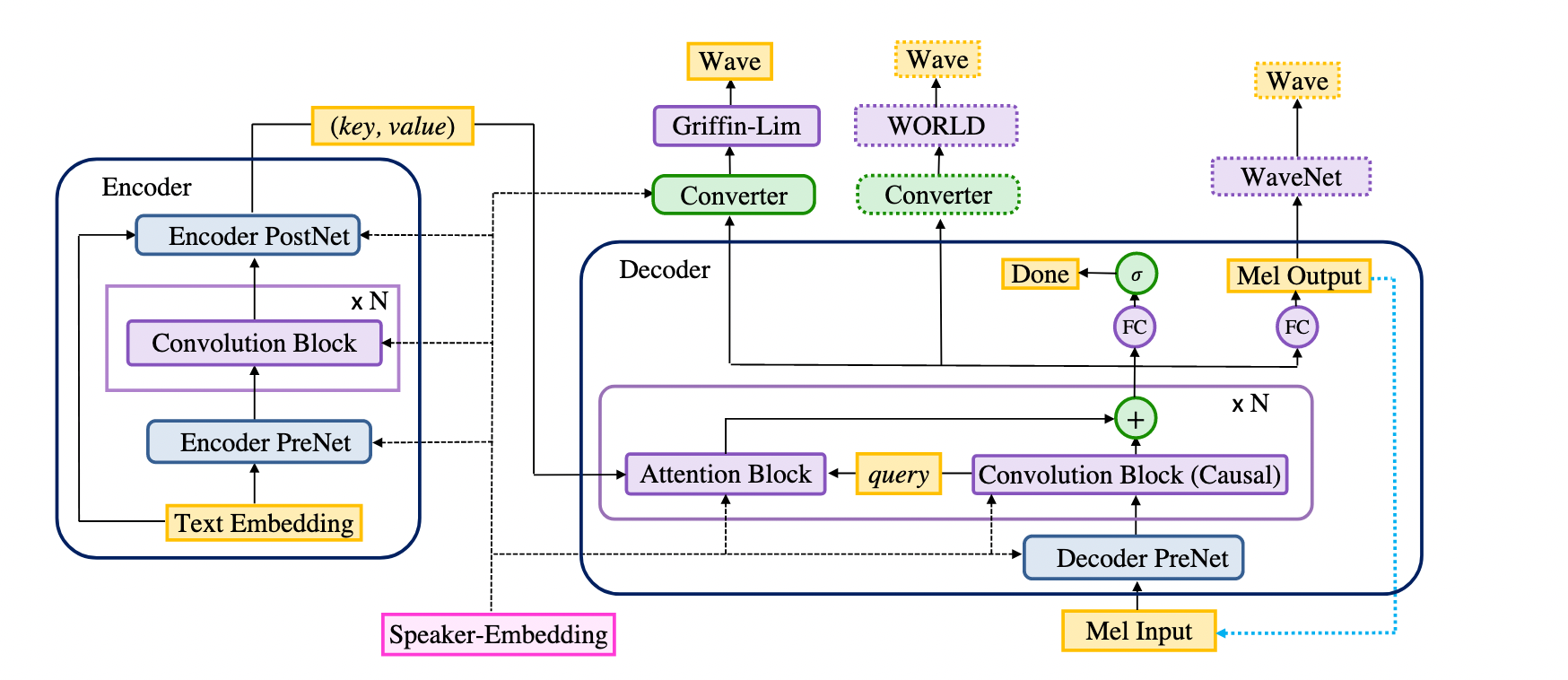

* ini commit for deepvoice, add tensorboard to requirements * fix urls for code we adapted from * fix makedirs for python2, fix README * fix open with encoding for python2 compatability * fix python2's str(), use encode for unicode, and str() for int * fix python2 encoding issue, add model architecture and project structure for README * add model structure, add explanation for hyperparameter priority order.

Showing

PaddleSpeech/DeepVoice3/LICENSE

0 → 100644

PaddleSpeech/DeepVoice3/README.md

0 → 100644

447.4 KB

PaddleSpeech/DeepVoice3/audio.py

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

PaddleSpeech/DeepVoice3/train.py

0 → 100644

此差异已折叠。

此差异已折叠。