add infer and dataset

Showing

yolov3/colormap.py

0 → 100644

yolov3/dataset/voc/download.py

0 → 100644

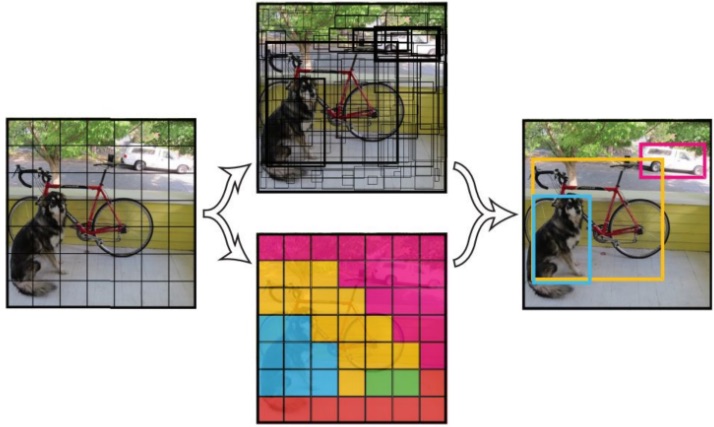

yolov3/image/YOLOv3.jpg

0 → 100644

68.4 KB

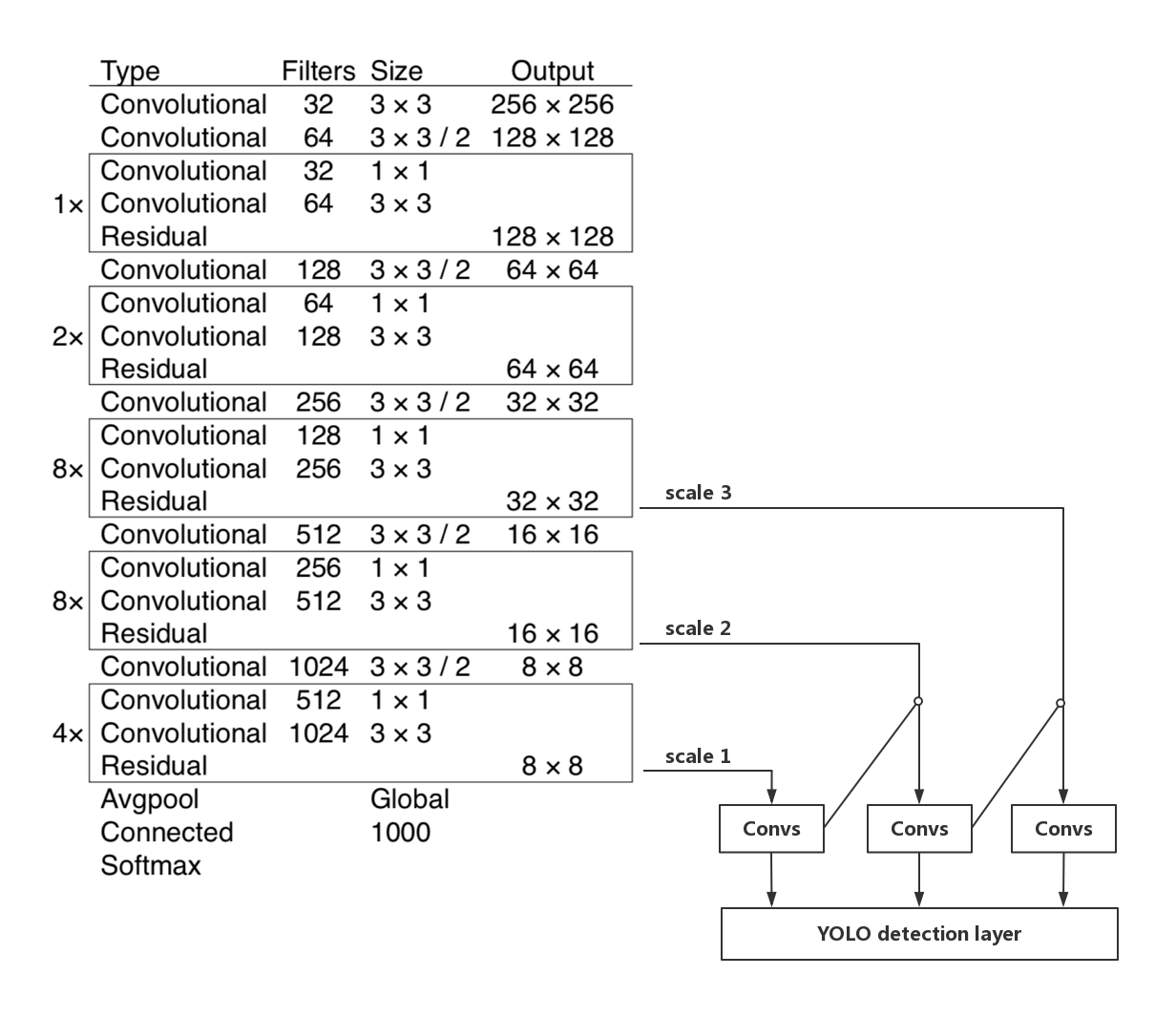

yolov3/image/YOLOv3_structure.jpg

0 → 100644

288.4 KB

yolov3/image/dog.jpg

0 → 100644

159.9 KB

yolov3/infer.py

0 → 100644

yolov3/visualizer.py

0 → 100644