Created by: barrierye

当前版本性能测试

结论(简单模型为BOW/CNN,复杂模型为LSTM)

- 预测部分使用grpc接口测得性能并不理想:

- (线程版异步接口)简单单模型是c++ core耗时的230%

- (线程版异步接口)简单双模型是c++ core耗时的336%

- (线程版异步接口)复杂双模型是c++ core耗时的134%

- 进程版(同步接口,因future不可序列化)复杂双模型耗时与线程版差不多(反而有所增加)

- 线程版同步接口与异步接口在复杂双模型上耗时基本一致

- 预测部分使用brpc与预期一致:

- (线程版)复杂双模型是c++ core耗时的63.3%

所以应该是grpc接口受GIL作用没有并行。

实验

- 使用两个LSTM模型(预测耗时较大)进行测试,PyServing比原C++ core增加了34%,猜测是因为数据处理由线程进行,当这部分耗时占比较大时PyServing性能很差,故将多线程改写为多进程

- 将线程改成进程,在双LSTM模型测试中,耗时与线程版差不多(反而增加),一部分原因是往多进程空队列里put数据,会有一小段时间延迟(https://hg.python.org/cpython/rev/860fc6a2bd21 ),而目前channel的实现不能阻塞get操作,所以在get数据时加了1e-3s的timeout(https://github.com/PaddlePaddle/Serving/pull/667/files#diff-f6ad39aa57713e2da4fb9e81e6193357R377 ),队列会在timeout前get数据,但经测试timeout越大,等待时间越大;另一部分原因是进程间通信增加。

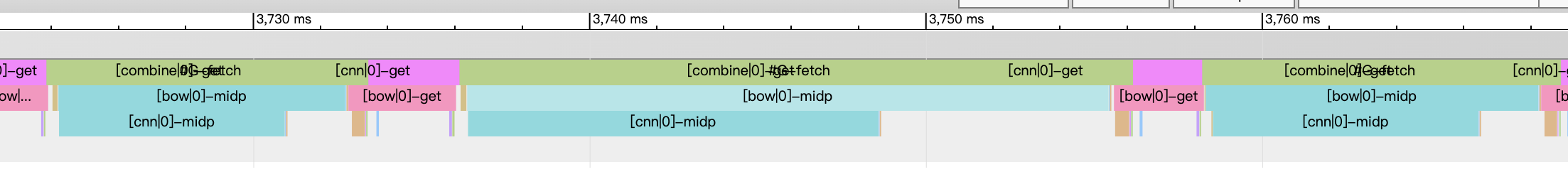

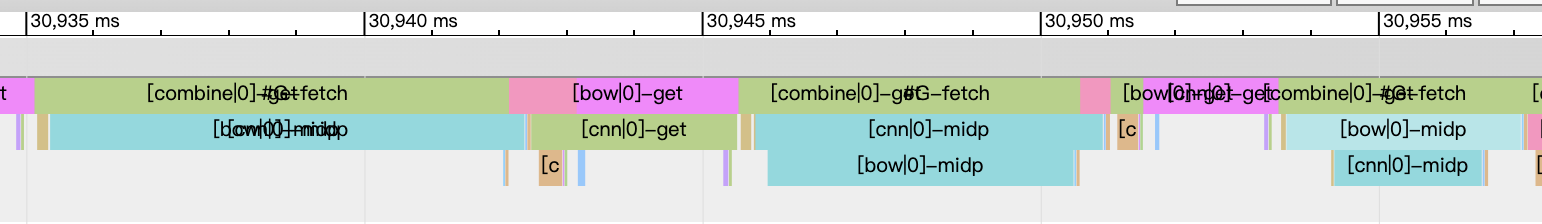

- 重新分析耗时:用线程版查看profile,发现预处理时间耗时占比非常小,在获取future时消耗较多时间:

取消future换用同步模式,耗时集中在op的mid-process(预测部分),似乎这部分没有并发,总耗时和异步模式差不多:线程版双lstm(同一列为顺序执行): #G prepack: 0.155 ms #G push: 0.058 ms <long empty: 大概 0.3 ms> l1 prep: 0.158 ms l2 prep: 0.175 ms l2 midp: 3.898 ms l1 midp: 4.618 ms l2 postp: 0.022 ms l2 push: 0.050 ms l1 postp: 0.022 ms l1 push: 0.054 ms combine prep: 7.806 ms combine postp: 0.026 ms combine push: 0.045 ms <long empty: 大概 0.3 ms> #G postpack: 0.120 ms <long empty: 大概 6 ms> <next iter>

- 预测部分换用用brpc接口,总耗时降到C++ core的63.3%,profile如下:

PyServing BOW单模型(thread版 grpc-aync-impl)

Timer unit: 1e-06 s

Total time: 7.91556 s

Line # Hits Time Per Hit % Time Line Contents

==============================================================

21 def func():

22 1 8.0 8.0 0.0 client = PyClient()

23 1 5452.0 5452.0 0.1 client.connect('localhost:8080')

24

25 #lp = LineProfiler()

26 #lp_wrapper = lp(client.predict)

27

28 1 1.0 1.0 0.0 words = 'i am very sad | 0'

29 1 5.0 5.0 0.0 imdb_dataset = IMDBDataset()

30 1 106775.0 106775.0 1.3 imdb_dataset.load_resource('imdb.vocab')

31

32 2085 7741.0 3.7 0.1 for line in sys.stdin:

33 2084 861203.0 413.2 10.9 word_ids, label = imdb_dataset.get_words_and_label(line)

34 #fetch_map = lp_wrapper(

35 2084 1813.0 0.9 0.0 fetch_map = client.predict(

36 2084 6932563.0 3326.6 87.6 feed={"words": word_ids}, fetch=["prediction"])PyServing BOW和CNN双模型(thread版 grpc-aync-impl)

Total time: 12.3527 s

Line # Hits Time Per Hit % Time Line Contents

==============================================================

20 def func():

21 1 9.0 9.0 0.0 client = PyClient()

22 1 5916.0 5916.0 0.0 client.connect('localhost:8080')

23

24 1 1.0 1.0 0.0 words = 'i am very sad | 0'

25 1 6.0 6.0 0.0 imdb_dataset = IMDBDataset()

26 1 104786.0 104786.0 0.8 imdb_dataset.load_resource('imdb.vocab')

27

28 2085 8467.0 4.1 0.1 for line in sys.stdin:

29 2084 877019.0 420.8 7.1 word_ids, label = imdb_dataset.get_words_and_label(line)

30 2084 11356504.0 5449.4 91.9 fetch_map = client.predict(feed={"words": word_ids}, fetch=["prediction"])PyServing LSTM和LSTM双模型(thread版 grpc-aync-impl)

Total time: 21.9295 s

Line # Hits Time Per Hit % Time Line Contents

==============================================================

20 def func():

21 1 29.0 29.0 0.0 client = PyClient()

22 1 6088.0 6088.0 0.0 client.connect('localhost:8080')

23

24 1 1.0 1.0 0.0 words = 'i am very sad | 0'

25 1 7.0 7.0 0.0 imdb_dataset = IMDBDataset()

26 1 228226.0 228226.0 1.0 imdb_dataset.load_resource('imdb.vocab')

27

28 2085 11646.0 5.6 0.1 for line in sys.stdin:

29 2084 965434.0 463.3 4.4 word_ids, label = imdb_dataset.get_words_and_label(line)

30 2084 20718107.0 9941.5 94.5 fetch_map = client.predict(feed={"words": word_ids}, fetch=["prediction"])PyServing LSTM和LSTM双模型(process版 grpc-aync-impl)

Total time: 24.3444 s

Line # Hits Time Per Hit % Time Line Contents

==============================================================

20 def func():

21 1 7.0 7.0 0.0 client = PyClient()

22 1 5404.0 5404.0 0.0 client.connect('localhost:8080')

23

24 1 1.0 1.0 0.0 words = 'i am very sad | 0'

25 1 4.0 4.0 0.0 imdb_dataset = IMDBDataset()

26 1 103255.0 103255.0 0.4 imdb_dataset.load_resource('imdb.vocab')

27

28 2085 9329.0 4.5 0.0 for line in sys.stdin:

29 2084 895440.0 429.7 3.7 word_ids, label = imdb_dataset.get_words_and_label(line)

30 2084 23329327.0 11194.5 95.8 fetch_map = client.predict(feed={"words": word_ids}, fetch=["prediction"])

31 1 1675.0 1675.0 0.0 print(fetch_map)PyServing LSTM和LSTM双模型(thread版 c++ core)

Total time: 10.3327 s

Line # Hits Time Per Hit % Time Line Contents

==============================================================

20 def func():

21 1 6.0 6.0 0.0 client = PyClient()

22 1 5441.0 5441.0 0.1 client.connect('localhost:8080')

23

24 1 1.0 1.0 0.0 words = 'i am very sad | 0'

25 1 4.0 4.0 0.0 imdb_dataset = IMDBDataset()

26 1 104695.0 104695.0 1.0 imdb_dataset.load_resource('imdb.vocab')

27

28 2085 7459.0 3.6 0.1 for line in sys.stdin:

29 2084 872195.0 418.5 8.4 word_ids, label = imdb_dataset.get_words_and_label(line)

30 2084 9339443.0 4481.5 90.4 fetch_map = client.predict(feed={"words": word_ids}, fetch=["prediction"])

31 2084 2712.0 1.3 0.0 if fetch_map['ecode'] != 0:

32 print(fetch_map['error_info'])

33 1 772.0 772.0 0.0 print(fetch_map)C++ core BOW单模型

Total time: 3.3367 s

Line # Hits Time Per Hit % Time Line Contents

==============================================================

20 def func():

21 1 3.0 3.0 0.0 words = 'i am very sad | 0'

22 1 5.0 5.0 0.0 imdb_dataset = IMDBDataset()

23 1 101483.0 101483.0 3.0 imdb_dataset.load_resource('imdb.vocab')

24

25 1 21312.0 21312.0 0.6 client = Client()

26 1 2796.0 2796.0 0.1 client.load_client_config('imdb_bow_client_conf/serving_client_conf.prototxt')

27 1 4403.0 4403.0 0.1 client.connect(["127.0.0.1:8888"])

28

29 #lp = LineProfiler()

30 #lp_wrapper = lp(client.predict)

31

32 2085 7266.0 3.5 0.2 for line in sys.stdin:

33 2084 840609.0 403.4 25.2 word_ids, label = imdb_dataset.get_words_and_label(line)

34 #fetch_map = lp_wrapper(

35 2084 1757.0 0.8 0.1 fetch_map = client.predict(

36 2084 2357063.0 1131.0 70.6 feed={"words": word_ids}, fetch=["prediction"])C++ core BOW和CNN双模型

Total time: 3.7254 s

Line # Hits Time Per Hit % Time Line Contents

==============================================================

21 def func():

22 1 18822.0 18822.0 0.5 client = Client()

23 # If you have more than one model, make sure that the input

24 # and output of more than one model are the same.

25 1 2541.0 2541.0 0.1 client.load_client_config('imdb_bow_client_conf/serving_client_conf.prototxt')

26 1 4270.0 4270.0 0.1 client.connect(["127.0.0.1:8889"])

27

28 # you can define any english sentence or dataset here

29 # This example reuses imdb reader in training, you

30 # can define your own data preprocessing easily.

31 1 11.0 11.0 0.0 imdb_dataset = IMDBDataset()

32 1 107277.0 107277.0 2.9 imdb_dataset.load_resource('imdb.vocab')

33

34 2085 7840.0 3.8 0.2 for line in sys.stdin:

35 2084 858674.0 412.0 23.0 word_ids, label = imdb_dataset.get_words_and_label(line)

36 2084 3835.0 1.8 0.1 feed = {"words": word_ids}

37 2084 1556.0 0.7 0.0 fetch = ["prediction"]

38 2084 2717955.0 1304.2 73.0 fetch_maps = client.predict(feed=feed, fetch=fetch)C++ core LSTM和LSTM双模型

Total time: 16.3102 s

Line # Hits Time Per Hit % Time Line Contents

==============================================================

21 def func():

22 1 453148.0 453148.0 2.8 client = Client()

23 # If you have more than one model, make sure that the input

24 # and output of more than one model are the same.

25 1 5346.0 5346.0 0.0 client.load_client_config('imdb_bow_client_conf/serving_client_conf.prototxt')

26 1 4825.0 4825.0 0.0 client.connect(["127.0.0.1:8889"])

27

28 # you can define any english sentence or dataset here

29 # This example reuses imdb reader in training, you

30 # can define your own data preprocessing easily.

31 1 13.0 13.0 0.0 imdb_dataset = IMDBDataset()

32 1 162498.0 162498.0 1.0 imdb_dataset.load_resource('imdb.vocab')

33

34 2085 12260.0 5.9 0.1 for line in sys.stdin:

35 2084 942797.0 452.4 5.8 word_ids, label = imdb_dataset.get_words_and_label(line)

36 2084 6451.0 3.1 0.0 feed = {"words": word_ids}

37 2084 1705.0 0.8 0.0 fetch = ["prediction"]

38 2084 14718960.0 7062.8 90.2 fetch_maps = client.predict(feed=feed, fetch=fetch)

39 1 2219.0 2219.0 0.0 print(fetch_maps)