rebase cube_gpu_ci with develop test=serving

Showing

core/predictor/tools/quant.cpp

0 → 100644

core/predictor/tools/quant.h

0 → 100644

23.7 KB

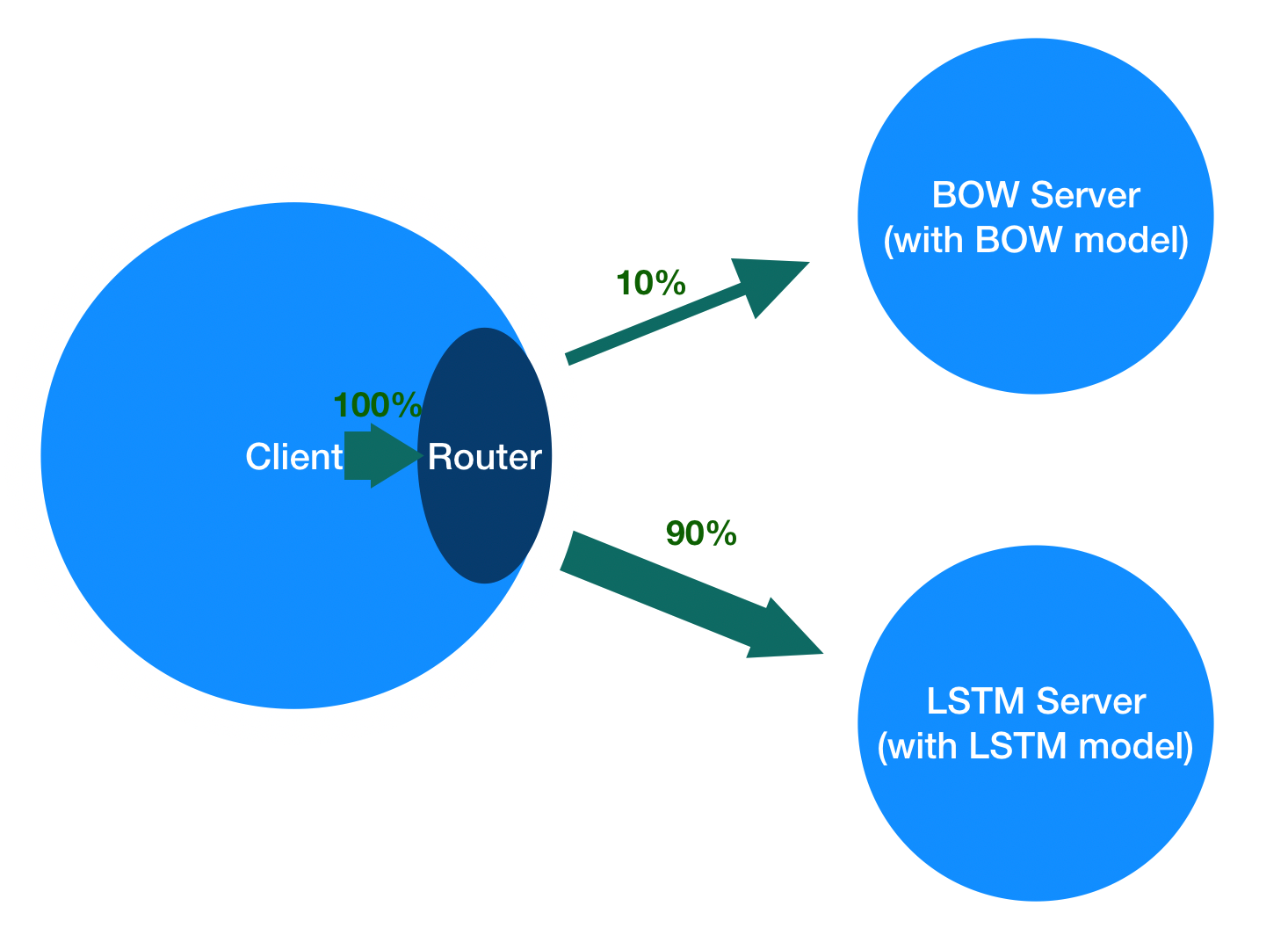

doc/ABTEST_IN_PADDLE_SERVING.md

0 → 100644

doc/BERT_10_MINS.md

0 → 100644

doc/CUBE_QUANT.md

0 → 100644

doc/CUBE_QUANT_CN.md

0 → 100644

doc/abtest.png

0 → 100644

291.5 KB

| W: | H:

| W: | H:

doc/timeline-example.png

0 → 100644

300.6 KB

python/setup.py.app.in

0 → 100644