Merge pull request #319 from wangjiawei04/develop

Criteo CTR with Cube Doc

Showing

doc/CUBE_LOCAL.md

0 → 100644

doc/CUBE_LOCAL_CN.md

0 → 100644

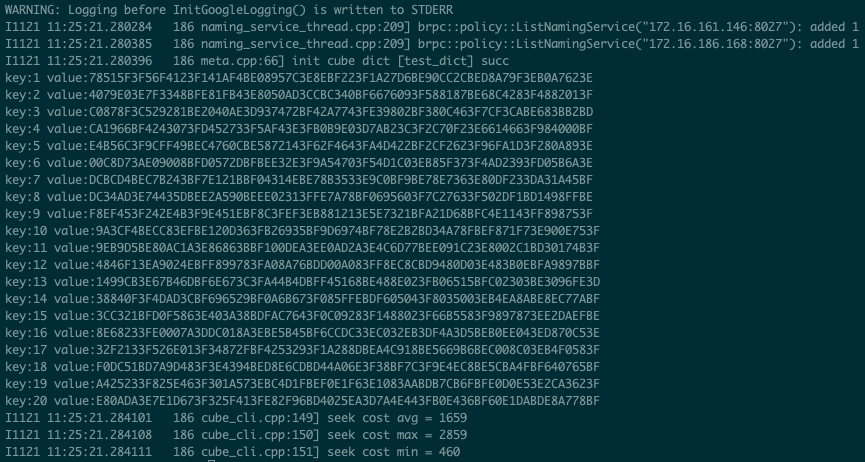

doc/cube-cli.png

0 → 100644

151.1 KB

Criteo CTR with Cube Doc

151.1 KB