Merge pull request #769 from barrierye/pipeline-auto-batch

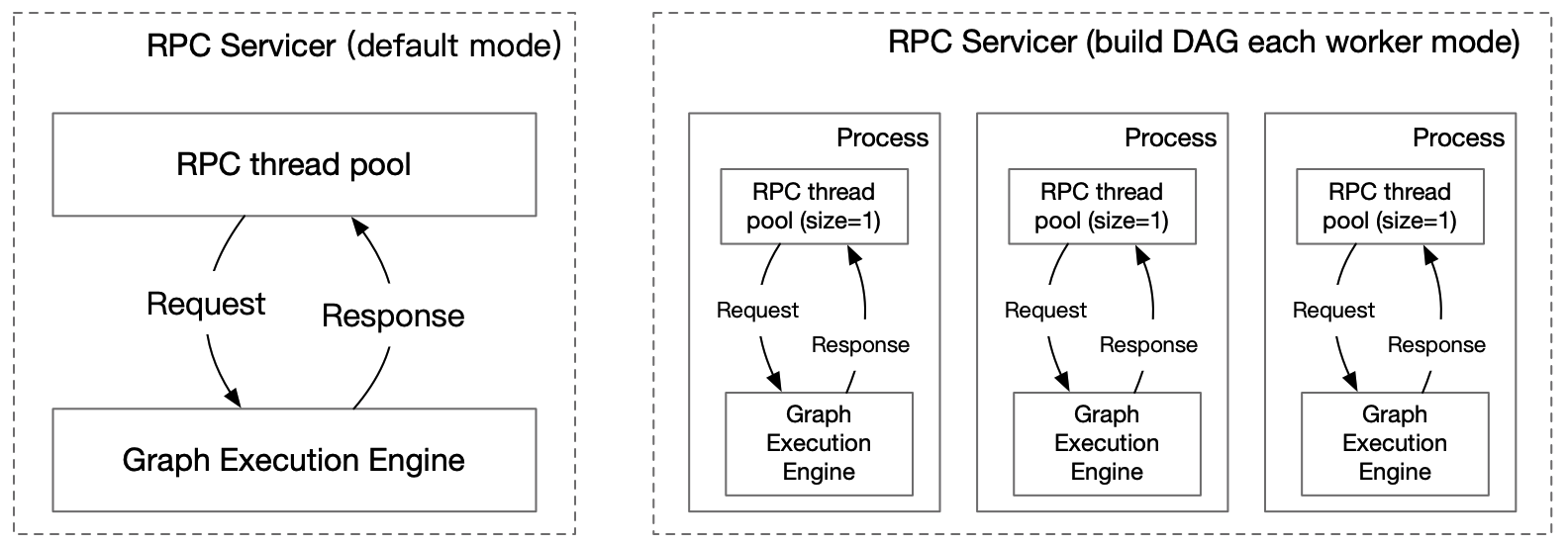

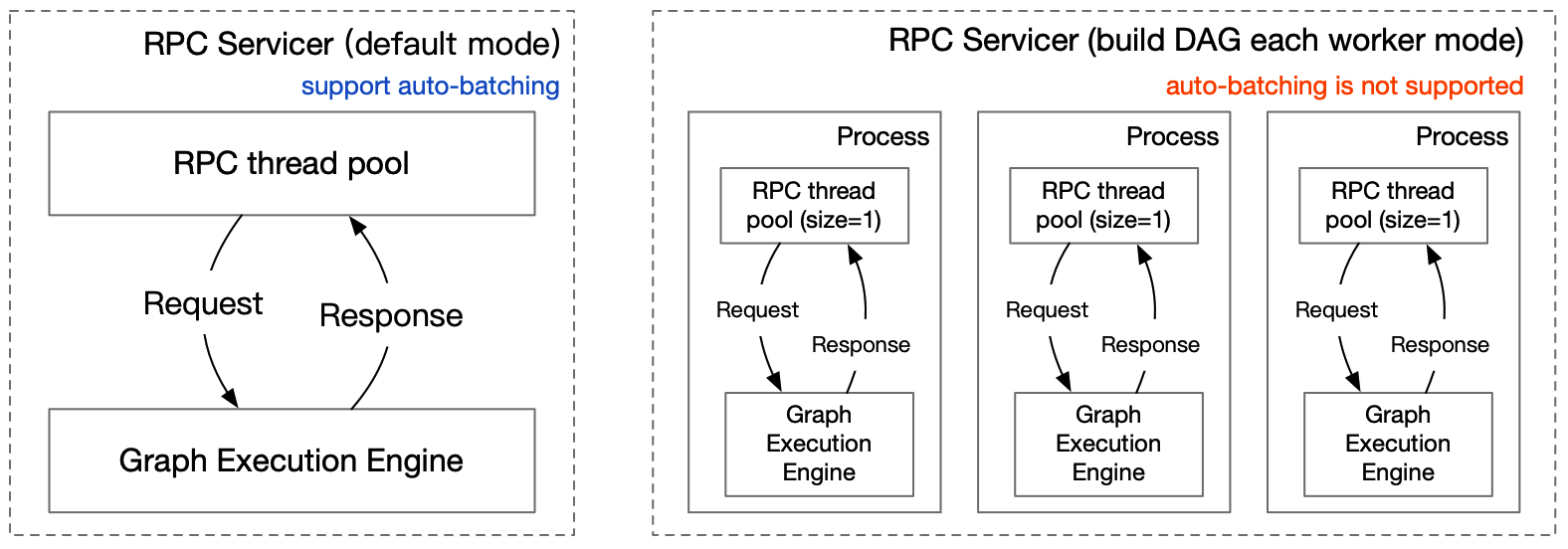

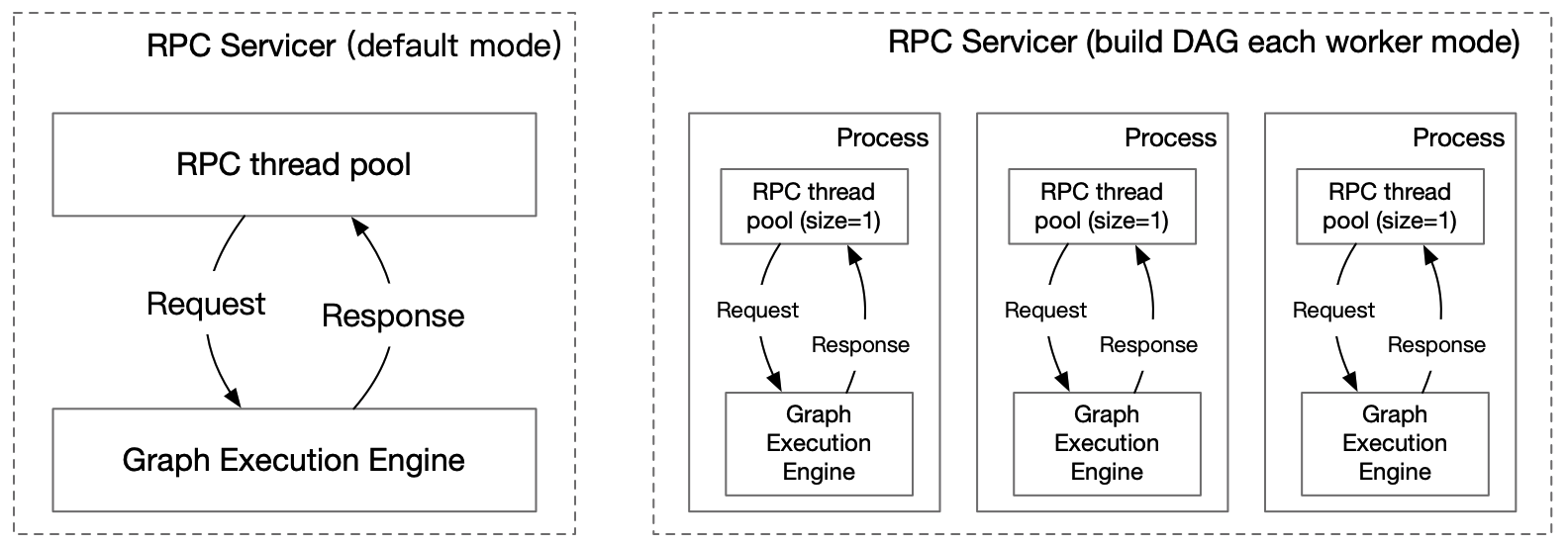

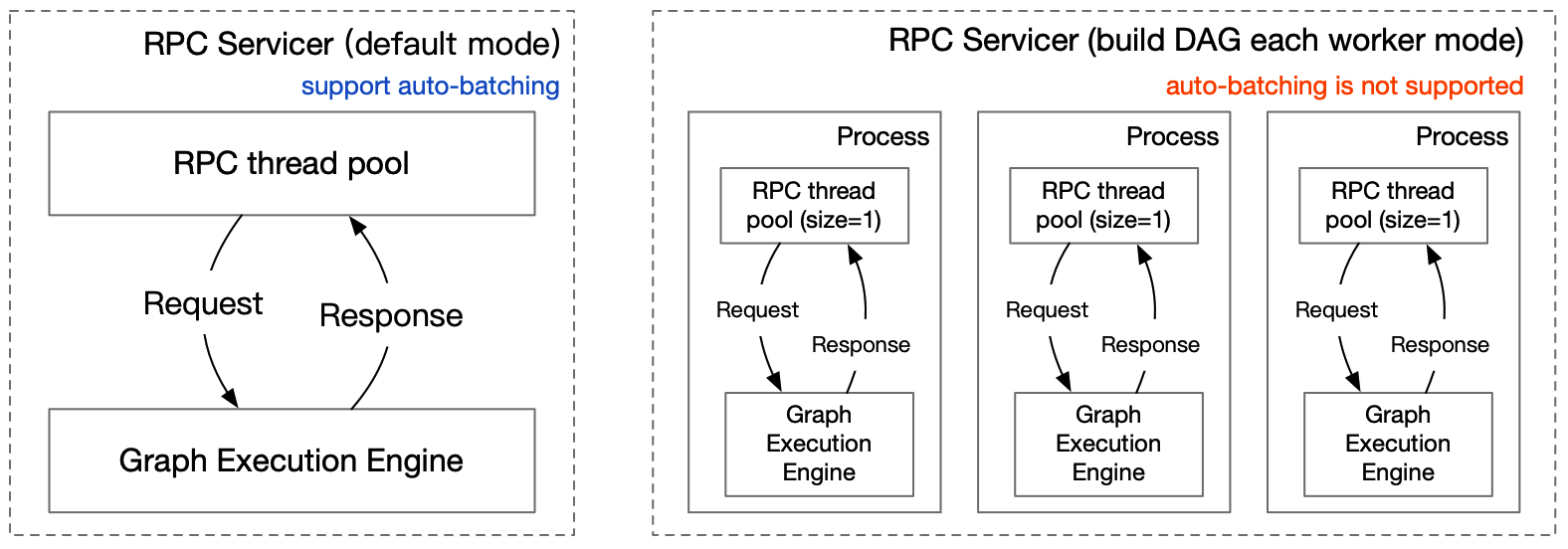

Pipeline Auto-batching feature & Update Log

Showing

文件已删除

| W: | H:

| W: | H:

python/pipeline/logger.py

0 → 100644

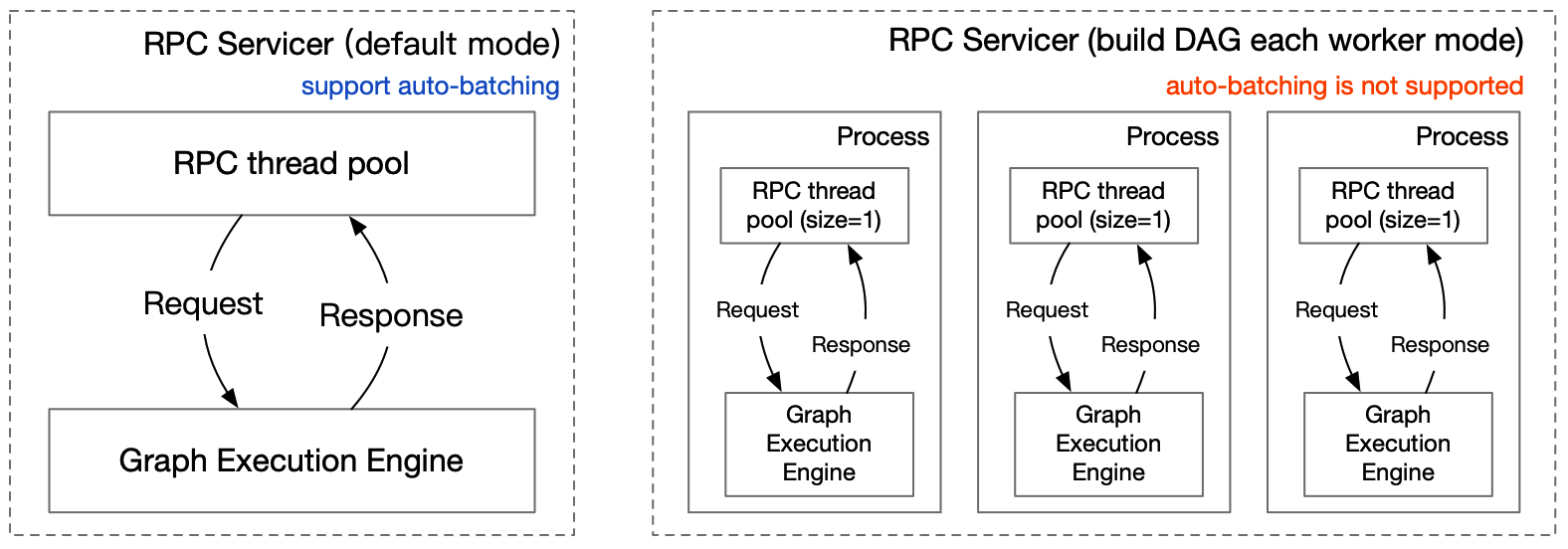

Pipeline Auto-batching feature & Update Log

96.0 KB | W: | H:

107.7 KB | W: | H: