merge paddle_serving_client/__init__.py file

Showing

23.7 KB

doc/BERT_10_MINS.md

0 → 100644

doc/CUBE_LOCAL.md

0 → 100644

doc/CUBE_LOCAL_CN.md

0 → 100644

doc/INSTALL.md

已删除

100644 → 0

doc/TRAIN_TO_SERVICE.md

0 → 100644

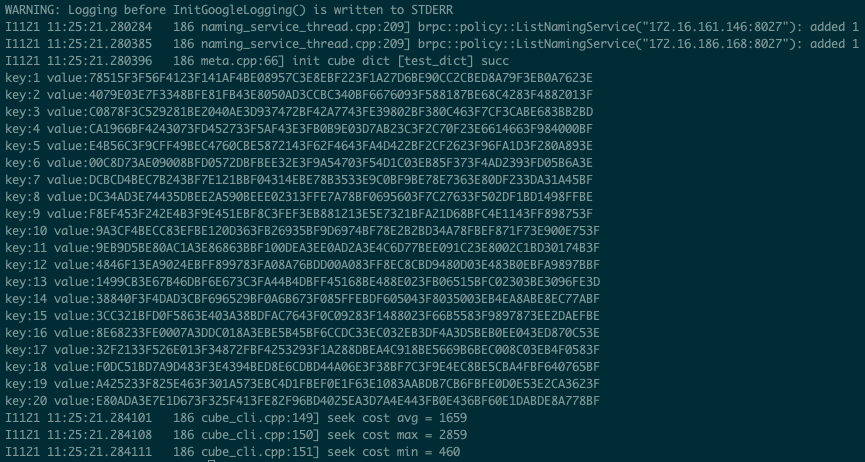

doc/cube-cli.png

0 → 100644

151.1 KB

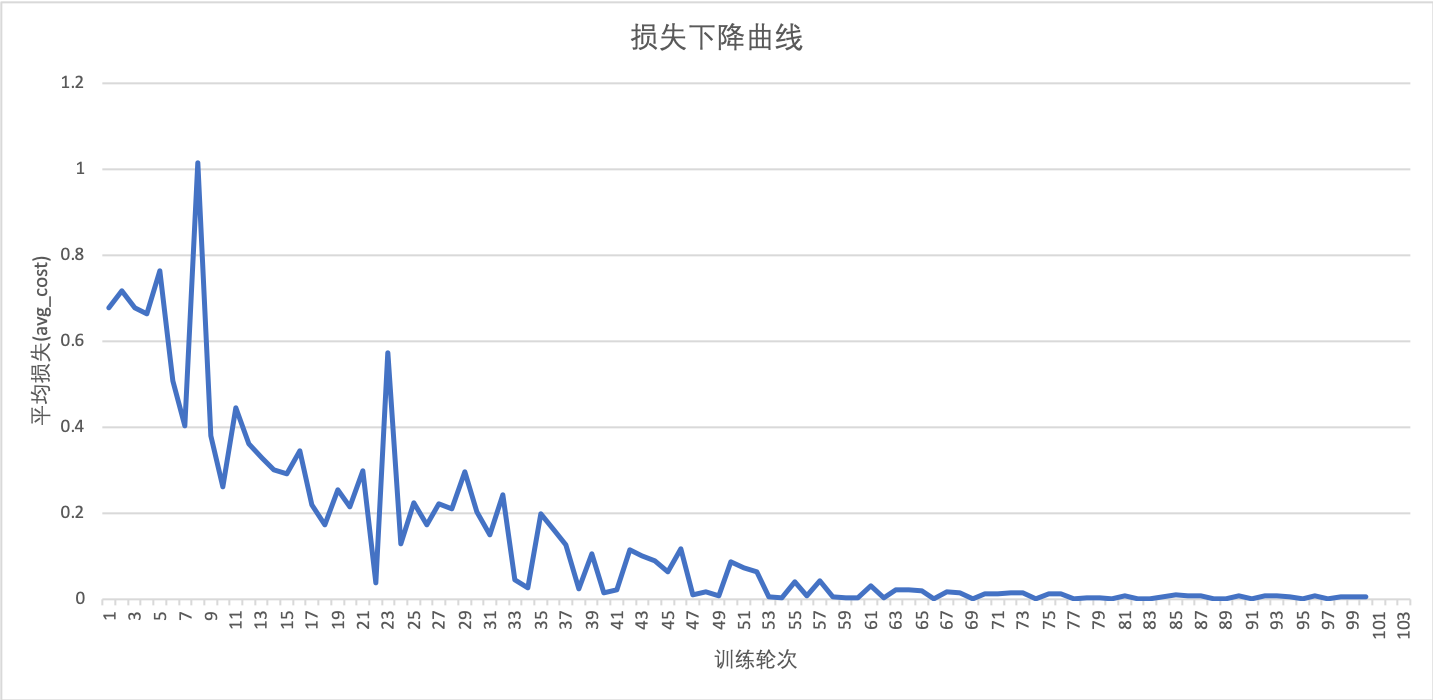

doc/imdb_loss.png

0 → 100644

81.2 KB

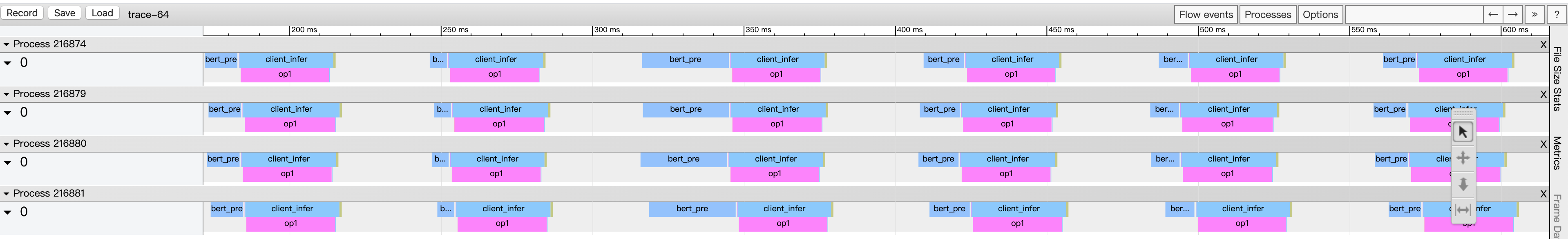

doc/timeline-example.png

0 → 100644

300.6 KB

python/setup.py.app.in

0 → 100644