merge with master 0624

Change-Id: I09b028bf244e63654da0cab154766856f94742d1

Showing

doc/GPU_BENCHMARKING.md

0 → 100755

此差异已折叠。

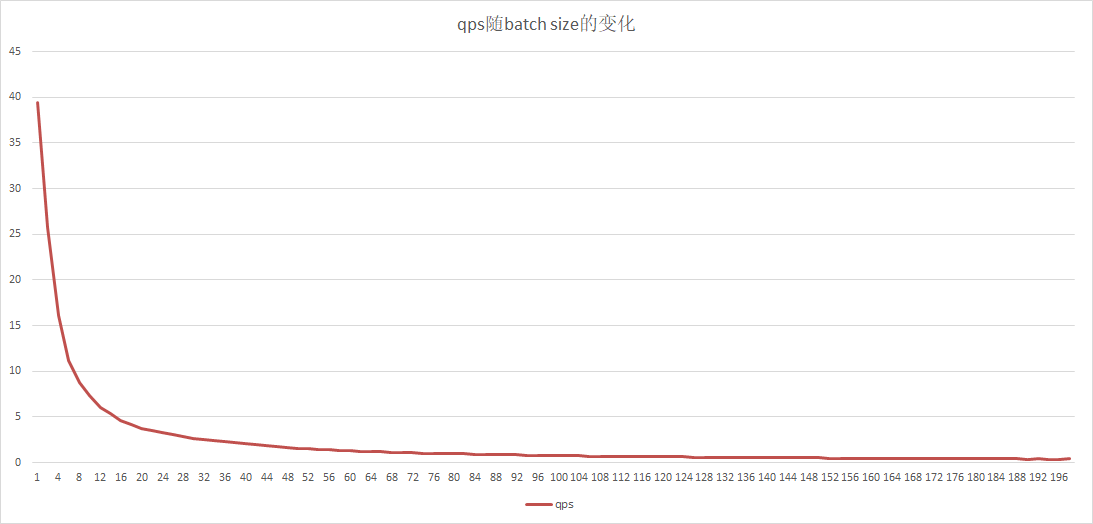

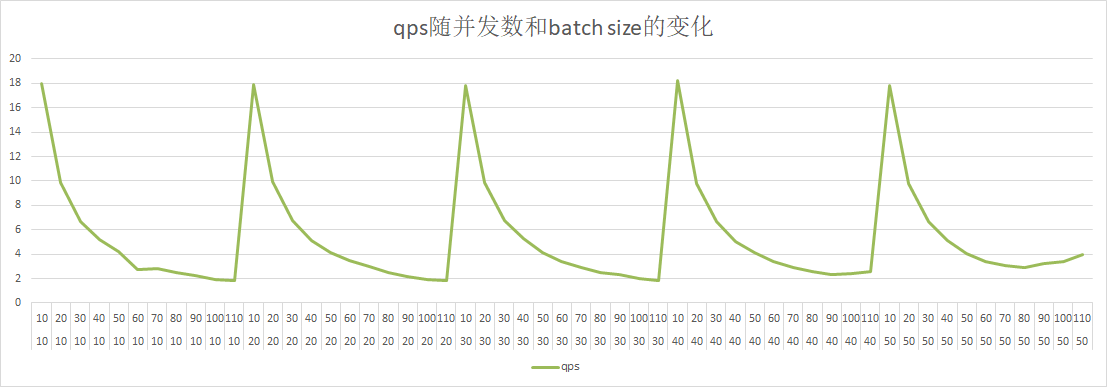

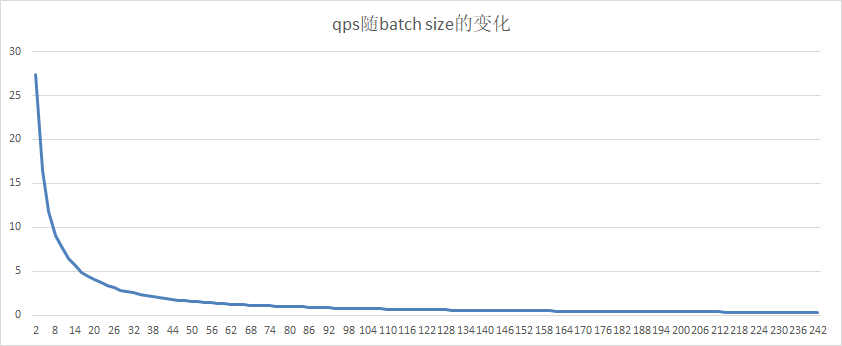

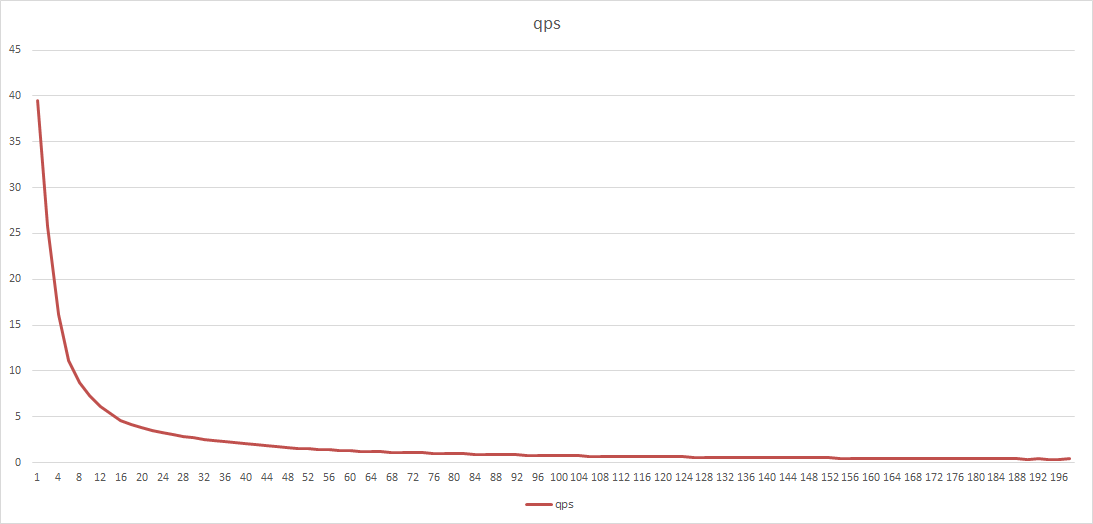

doc/gpu-local-qps-batchsize.png

0 → 100755

25.6 KB

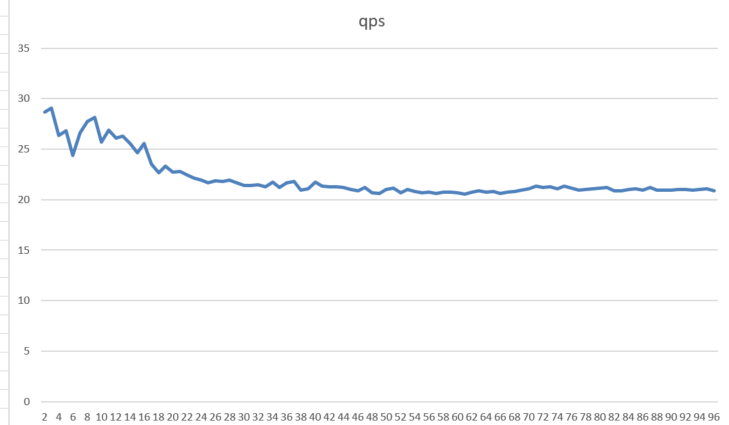

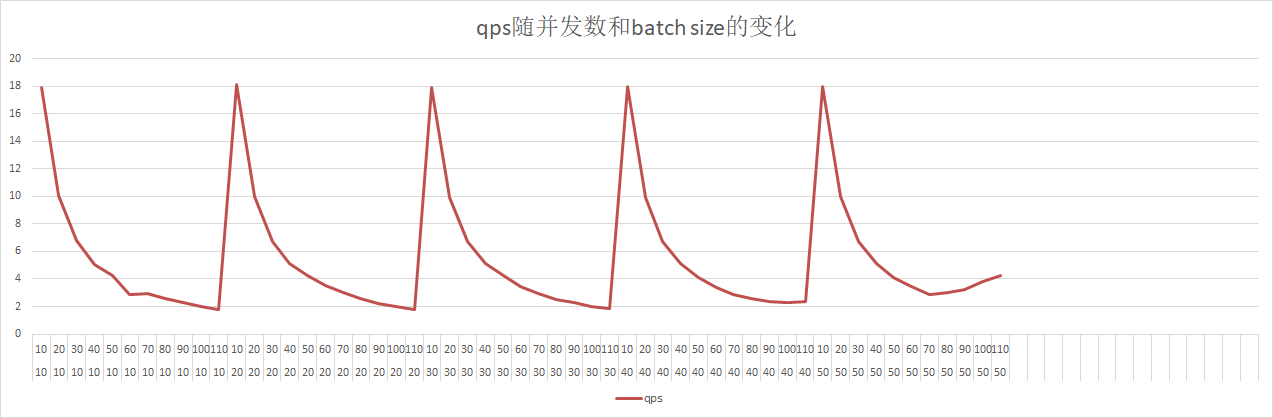

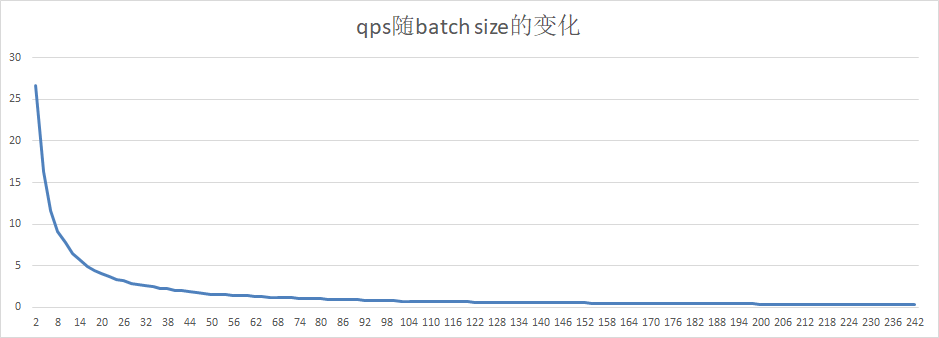

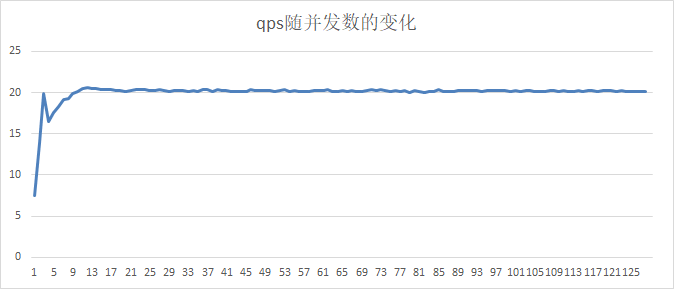

doc/gpu-local-qps-concurrency.png

0 → 100755

30.4 KB

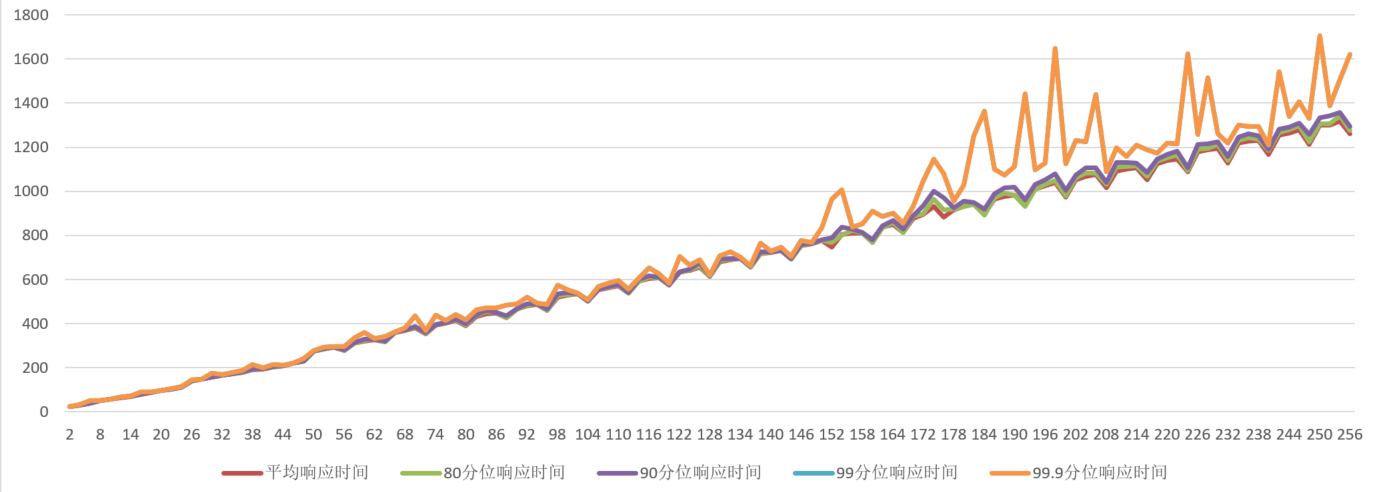

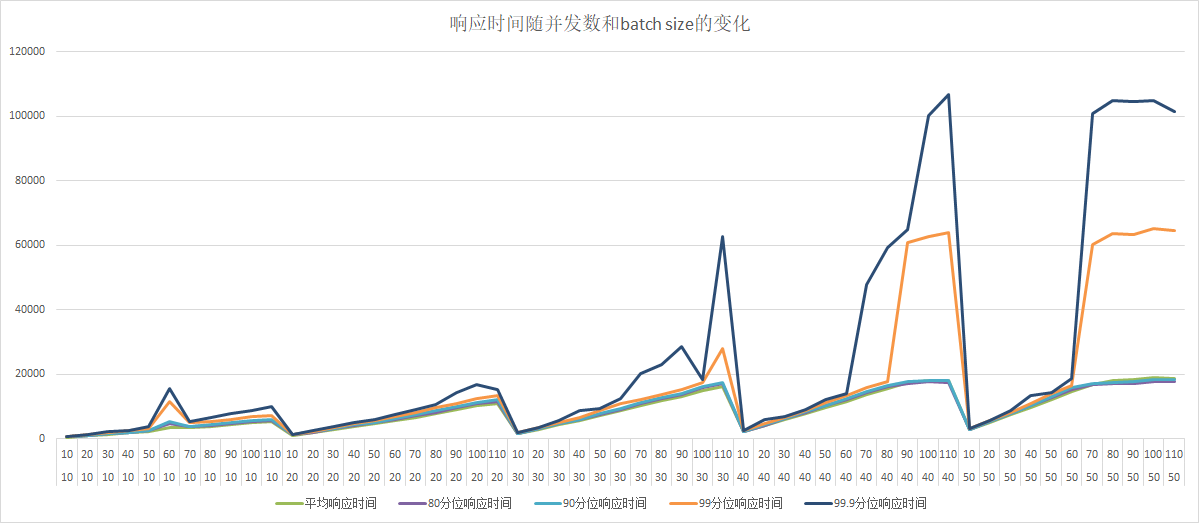

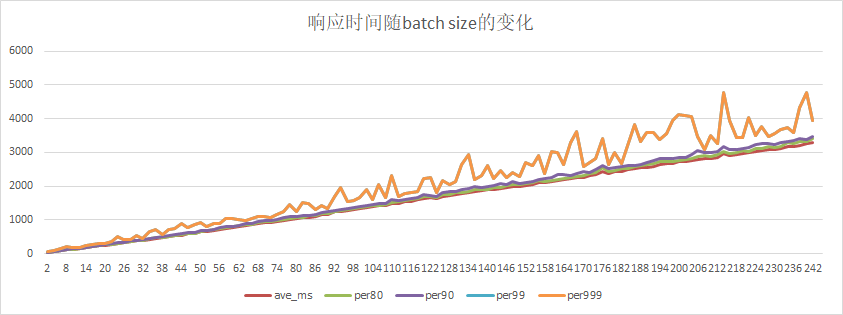

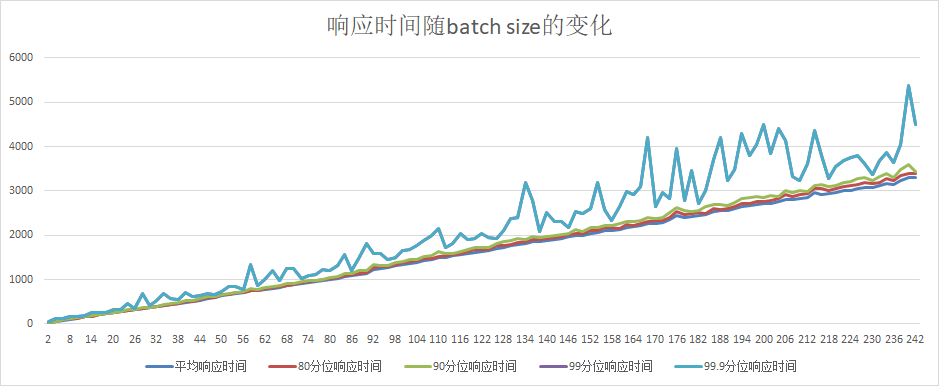

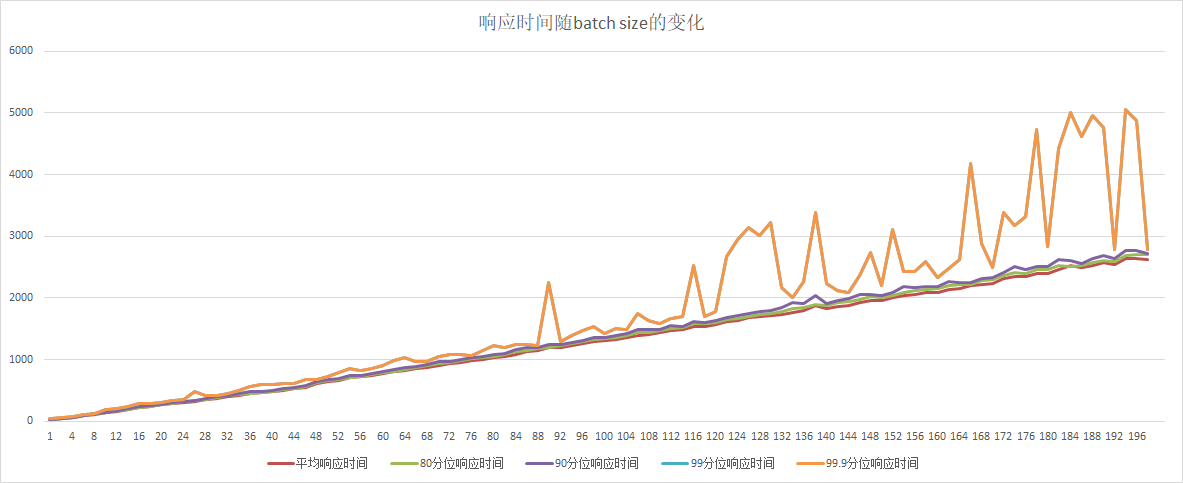

doc/gpu-local-time-batchsize.png

0 → 100755

129.0 KB

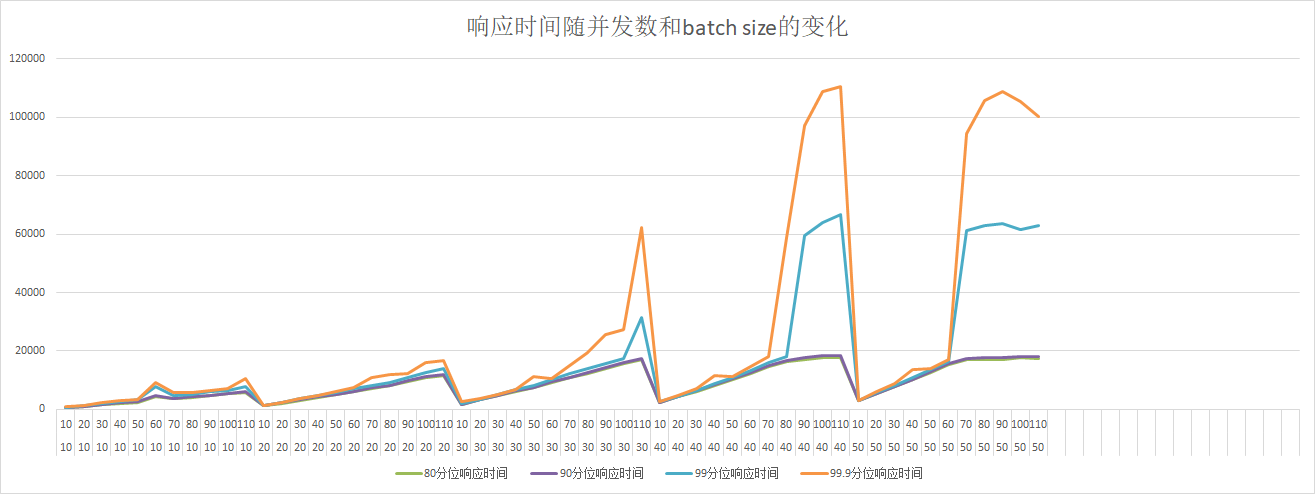

126.2 KB

39.0 KB

44.2 KB

68.1 KB

60.4 KB

17.0 KB

18.4 KB

36.6 KB

51.6 KB

23.2 KB

14.3 KB

62.3 KB

51.4 KB

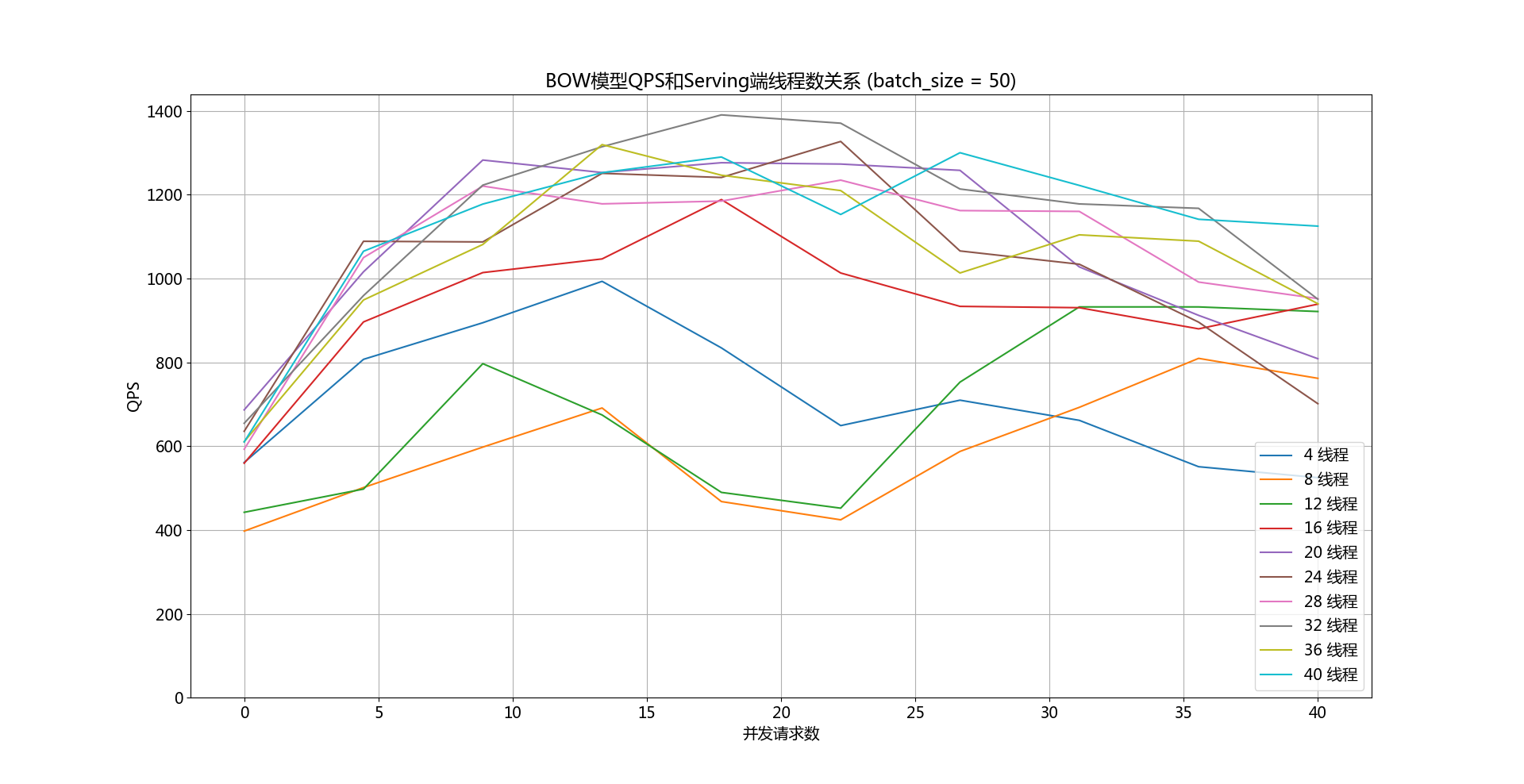

doc/qps-threads-bow.png

0 → 100755

237.2 KB

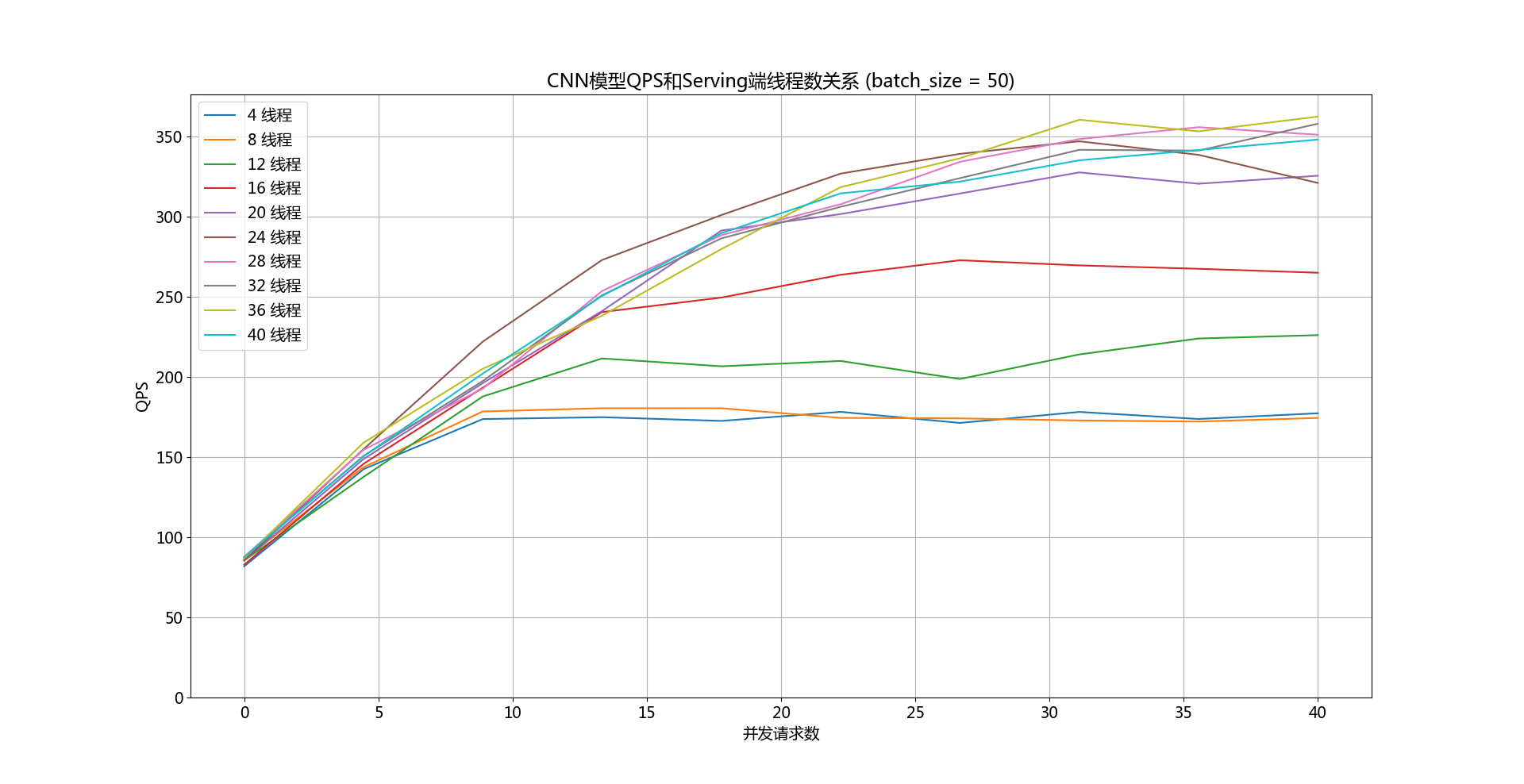

doc/qps-threads-cnn.png

0 → 100755

174.9 KB

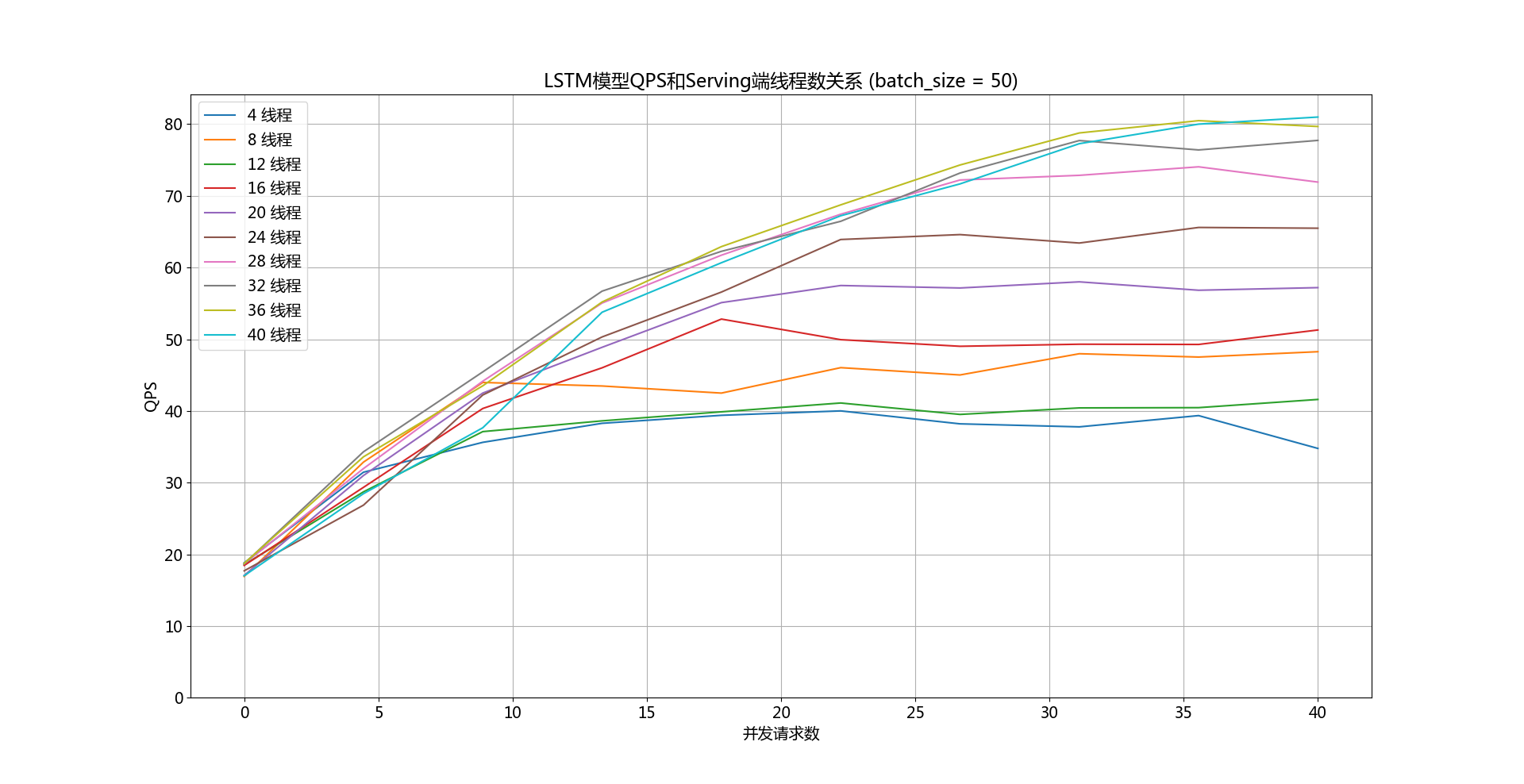

doc/qps-threads-lstm.png

0 → 100755

176.9 KB

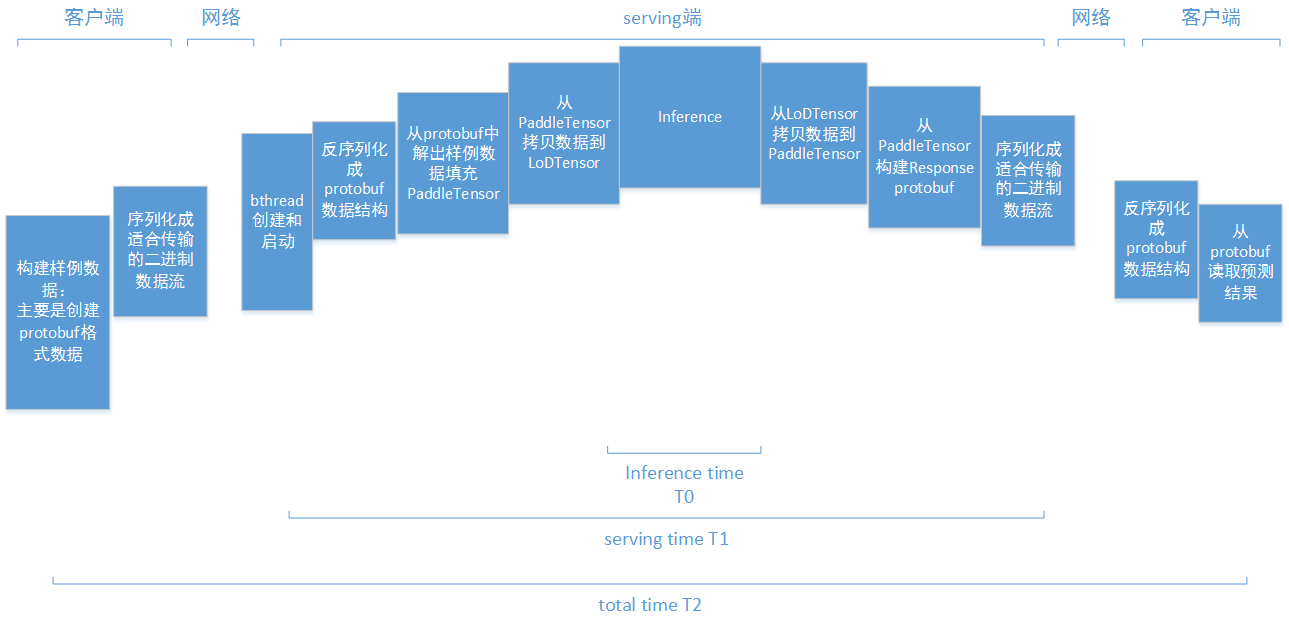

doc/serving-timings.png

0 → 100755

36.9 KB