Merge pull request #714 from barrierye/pipeline-update

update pipeline2

Showing

doc/PIPELINE_SERVING.md

0 → 100644

doc/PIPELINE_SERVING_CN.md

0 → 100644

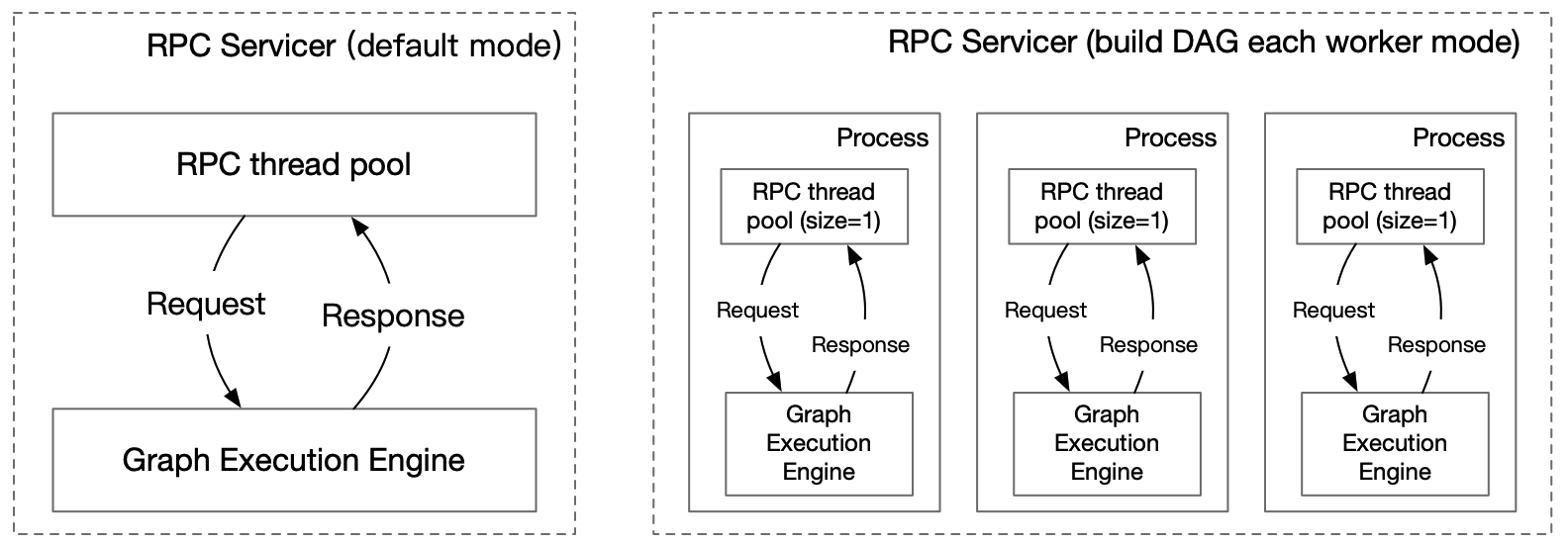

doc/pipeline_serving-image1.png

0 → 100644

96.0 KB

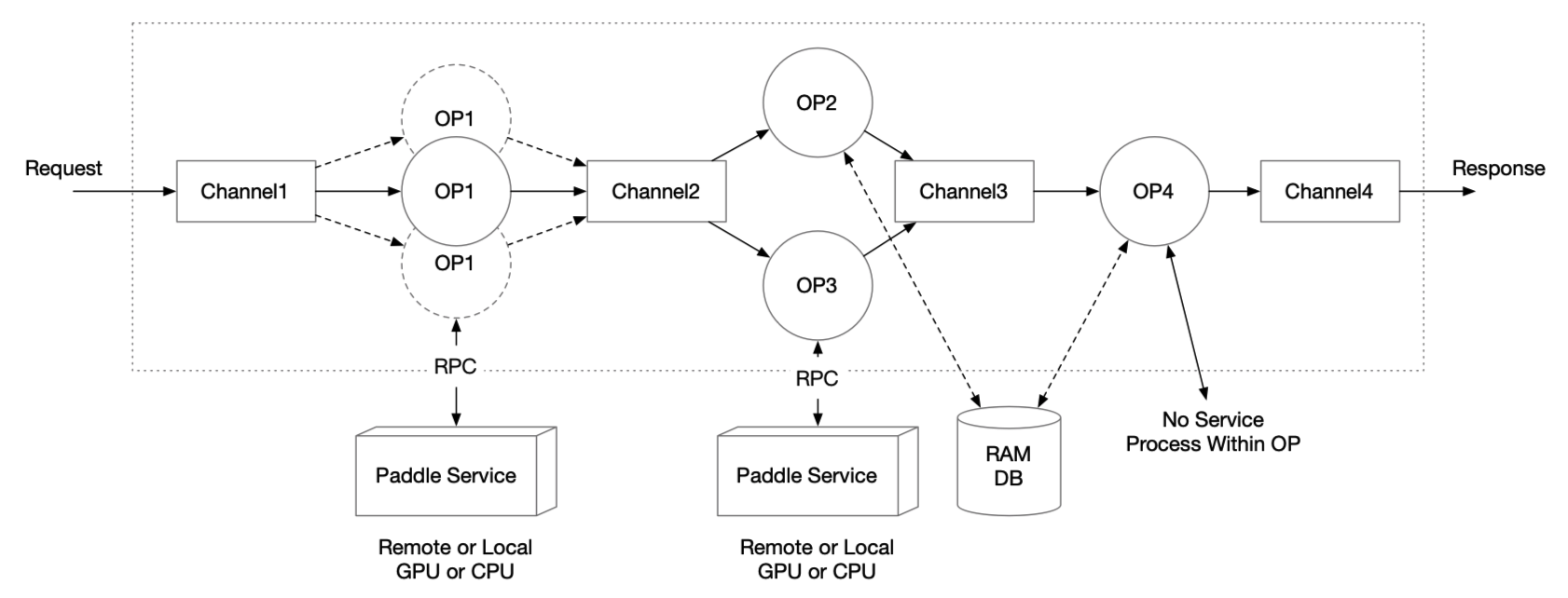

doc/pipeline_serving-image2.png

0 → 100644

129.5 KB

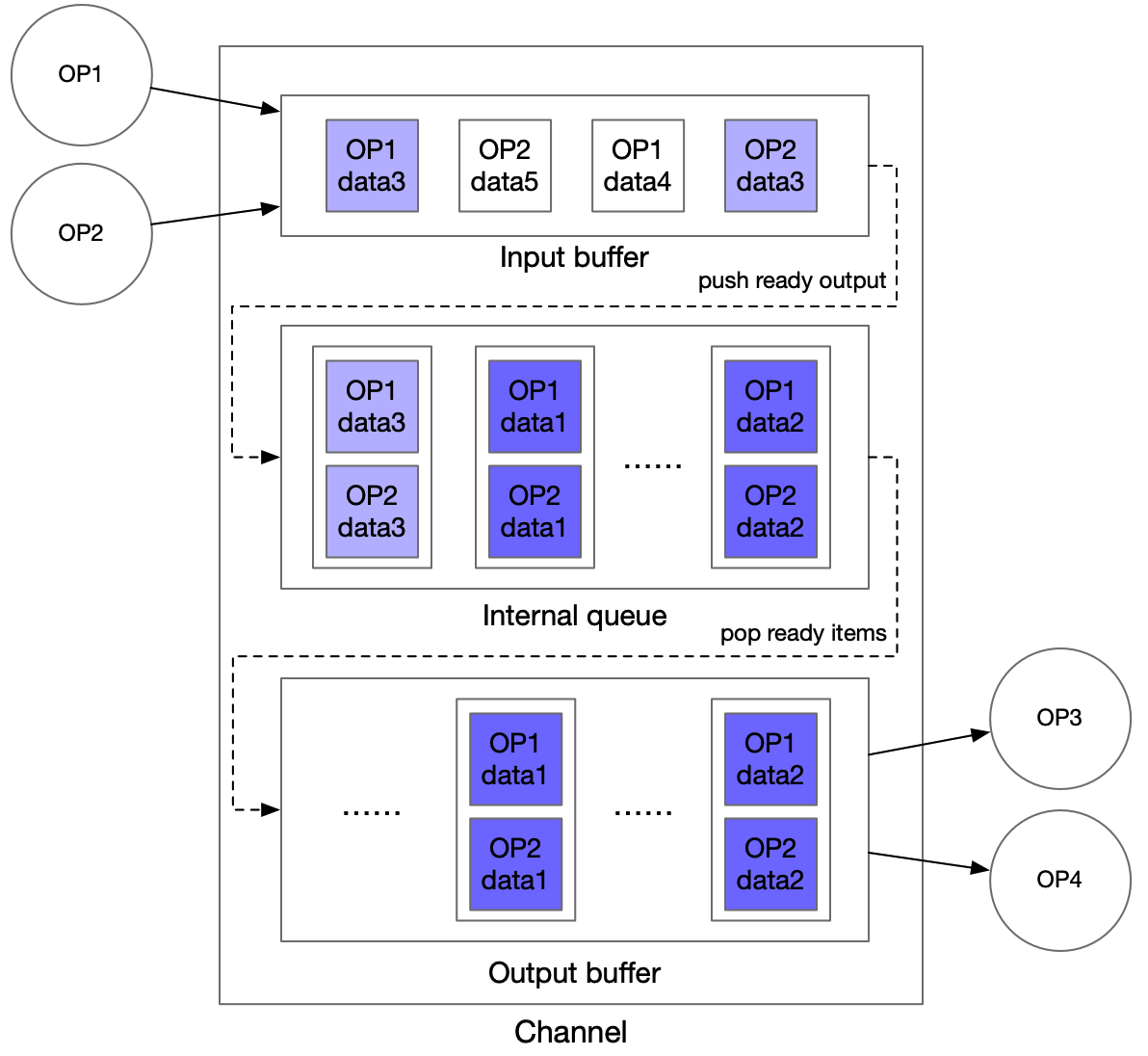

doc/pipeline_serving-image3.png

0 → 100644

126.3 KB

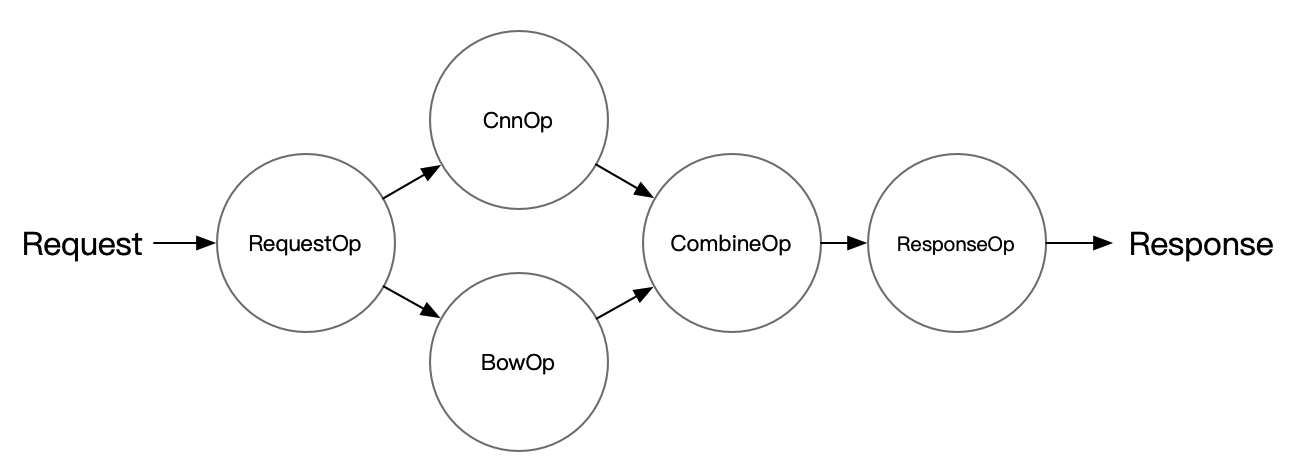

doc/pipeline_serving-image4.png

0 → 100644

62.1 KB

python/pipeline/analyse.py

0 → 100644

python/pipeline/dag.py

0 → 100644

此差异已折叠。