Merge branch 'develop' of https://github.com/PaddlePaddle/Serving into ysl_add_function

Showing

doc/Latest_Packages_EN.md

0 → 100644

doc/Offical_Docs/1-2_Benchmark.md

0 → 100644

doc/Offical_Docs/Index_CN.md

0 → 100644

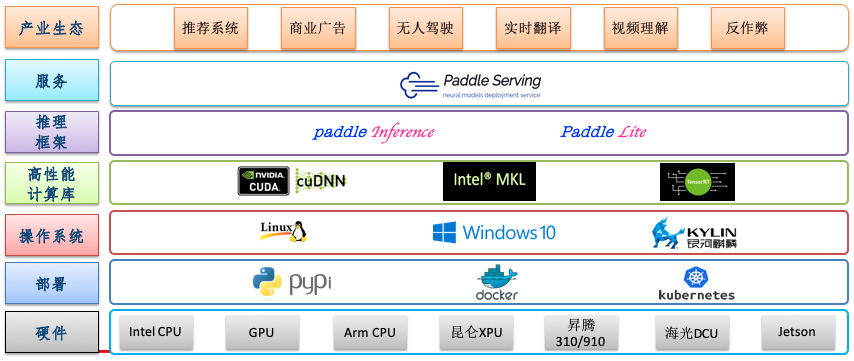

doc/images/tech_stack.png

0 → 100644

111.2 KB

| W: | H:

| W: | H:

38.8 KB