Merge branch 'develop' into develop

Showing

doc/BERT_10_MINS.md

已删除

100644 → 0

doc/BERT_10_MINS_CN.md

已删除

100644 → 0

doc/GRPC_IMPL_CN.md

已删除

100644 → 0

doc/HTTP_SERVICE_CN.md

100755 → 100644

文件模式从 100755 更改为 100644

doc/Makefile

已删除

100644 → 0

doc/PERFORMANCE_OPTIM.md

已删除

100644 → 0

doc/PERFORMANCE_OPTIM_CN.md

已删除

100644 → 0

doc/UWSGI_DEPLOY.md

已删除

100644 → 0

doc/UWSGI_DEPLOY_CN.md

已删除

100644 → 0

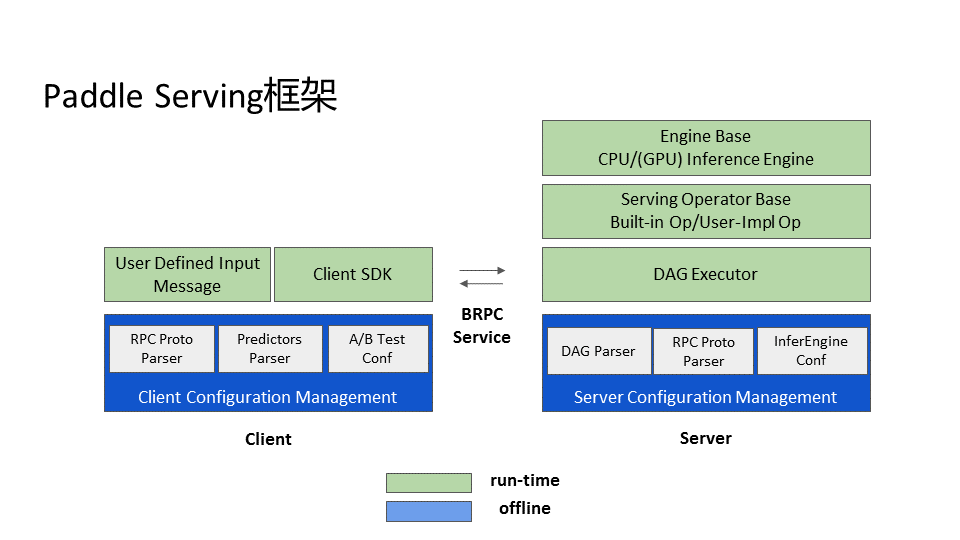

doc/architecture.png

已删除

100644 → 0

21.9 KB

24.2 KB

doc/blank.png

已删除

100644 → 0

18.2 KB

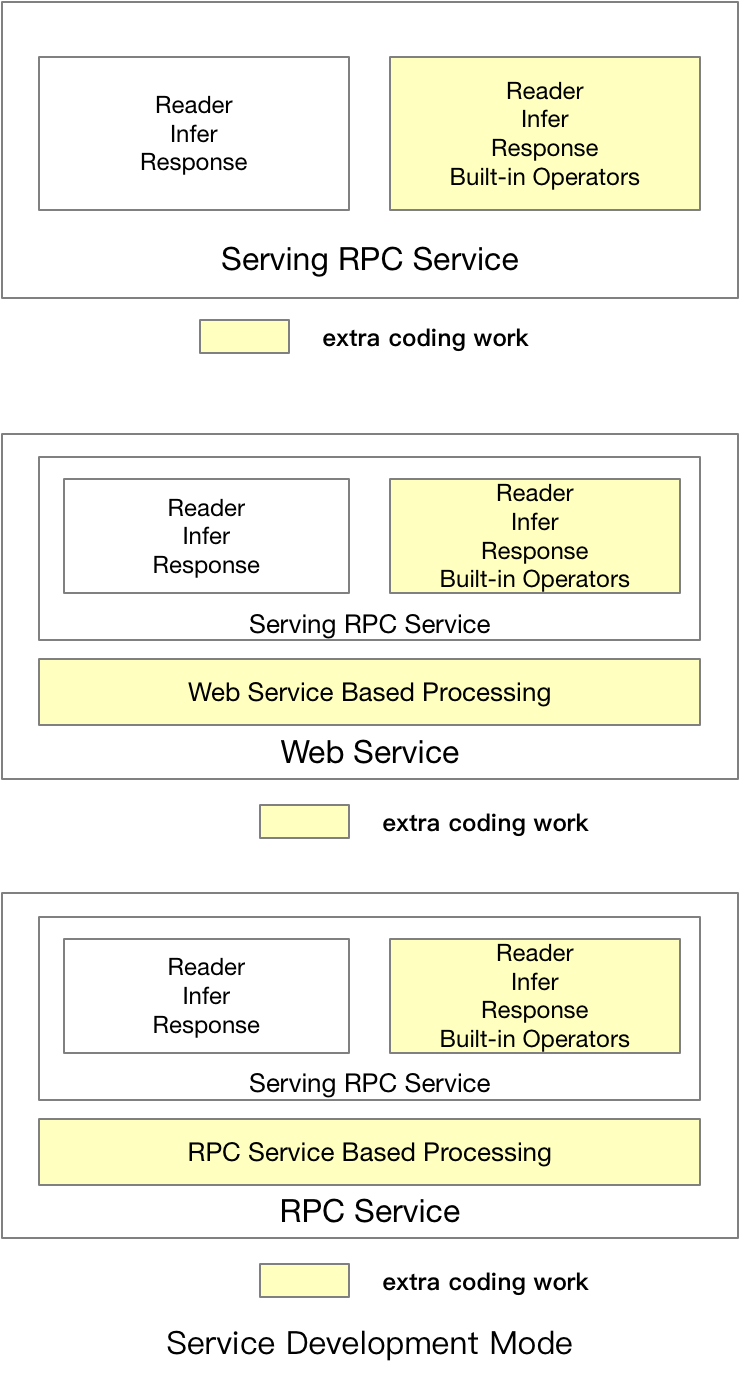

doc/coding_mode.png

已删除

100644 → 0

126.6 KB

文件已移动

此差异已折叠。

doc/doc_test_list

已删除

100644 → 0

25.6 KB

30.4 KB

129.0 KB

126.2 KB

39.0 KB

44.2 KB

68.1 KB

60.4 KB

17.0 KB

18.4 KB

36.6 KB

51.6 KB

23.2 KB

14.3 KB

62.3 KB

51.4 KB

文件已移动

文件已移动

24.3 KB

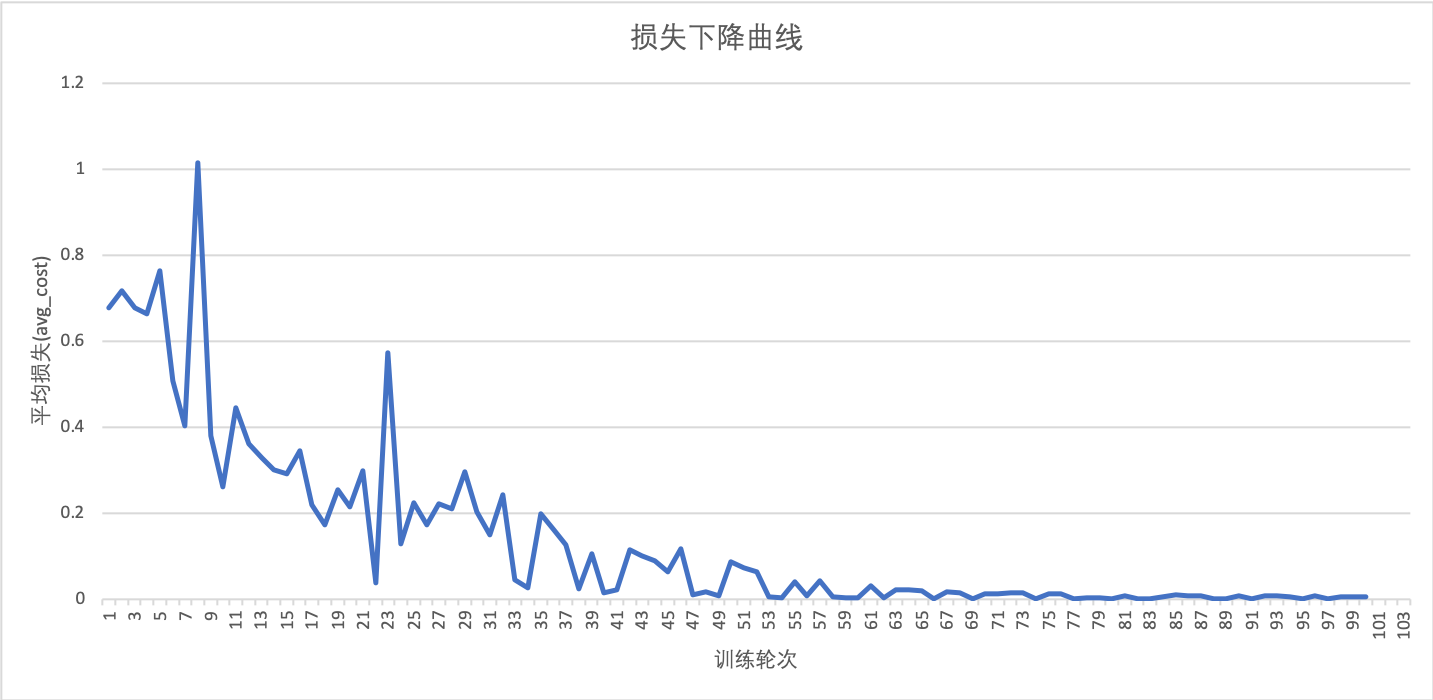

doc/imdb_loss.png

已删除

100644 → 0

81.2 KB

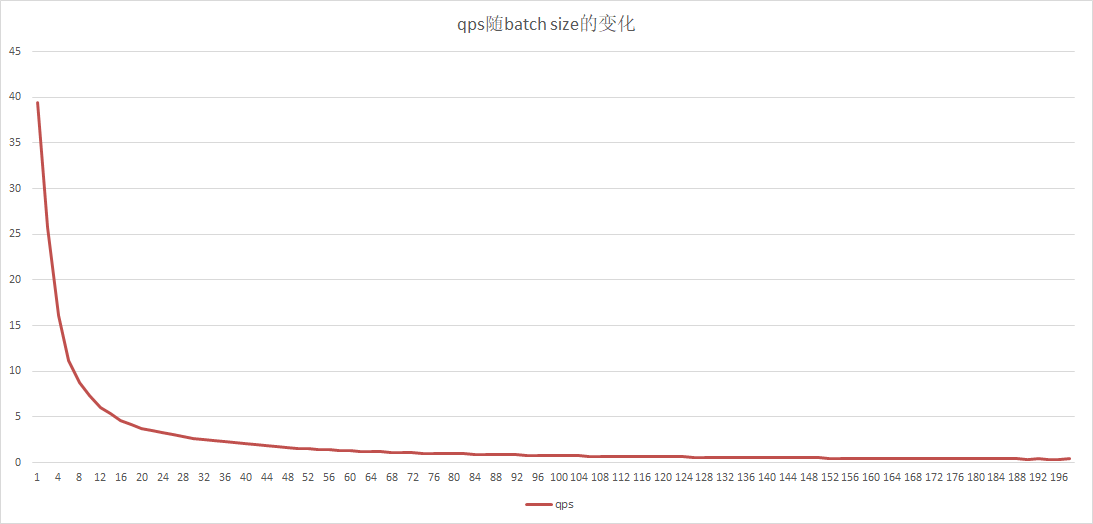

doc/qps-threads-bow.png

已删除

100644 → 0

此差异已折叠。

doc/qps-threads-cnn.png

已删除

100644 → 0

此差异已折叠。

doc/qps-threads-lstm.png

已删除

100644 → 0

此差异已折叠。

doc/qq.jpeg

已删除

100644 → 0

此差异已折叠。

doc/serving-timings.png

已删除

100644 → 0

此差异已折叠。

doc/wechat.jpeg

已删除

100644 → 0

此差异已折叠。