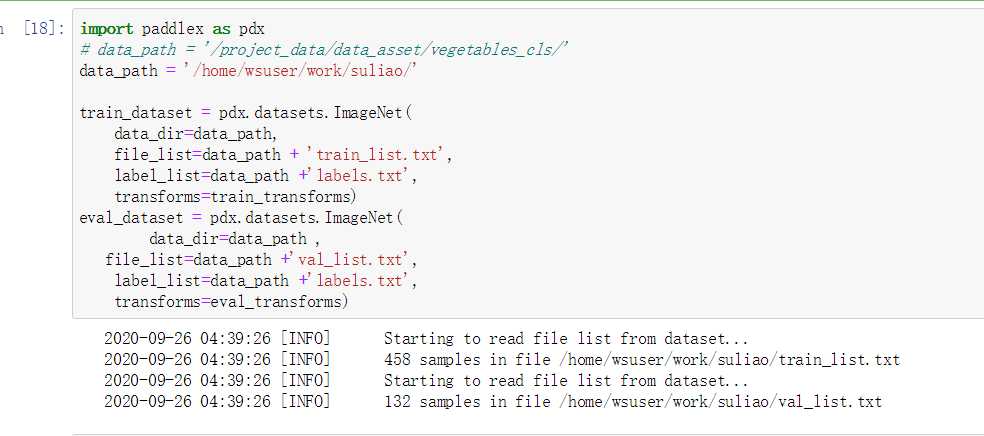

运行 PaddleX notebook 版——MobileNetV3_ssld图像分类 的报错,期待官方大佬们帮帮忙

Created by: tspp520

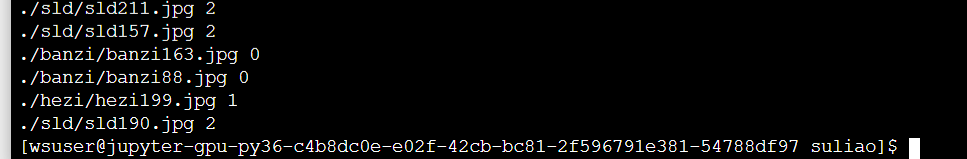

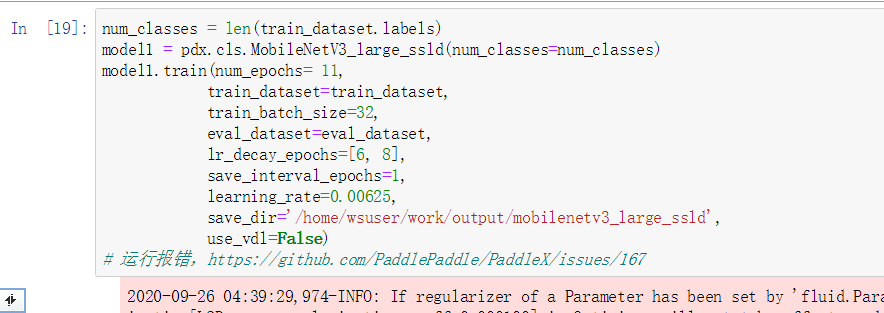

但就是加载完就报错了,报错信息如下: 2020-09-26 04:39:29,974-INFO: If regularizer of a Parameter has been set by 'fluid.ParamAttr' or 'fluid.WeightNormParamAttr' already. The Regularization[L2Decay, regularization_coeff=0.000100] in Optimizer will not take effect, and it will only be applied to other Parameters! 2020-09-26 04:39:30 [INFO] Connecting PaddleHub server to get pretrain weights... 2020-09-26 04:39:32 [INFO] Load pretrain weights from /home/wsuser/work/output/mobilenetv3_large_ssld/pretrain/MobileNetV3_large_x1_0_ssld. 2020-09-26 04:39:32 [WARNING] [SKIP] Shape of pretrained weight /home/wsuser/work/output/mobilenetv3_large_ssld/pretrain/MobileNetV3_large_x1_0_ssld/fc_weights doesn't match.(Pretrained: (1280, 1000), Actual: (1280, 3)) 2020-09-26 04:39:32 [WARNING] [SKIP] Shape of pretrained weight /home/wsuser/work/output/mobilenetv3_large_ssld/pretrain/MobileNetV3_large_x1_0_ssld/fc_offset doesn't match.(Pretrained: (1000,), Actual: (3,)) 2020-09-26 04:39:32,903-WARNING: /home/wsuser/work/output/mobilenetv3_large_ssld/pretrain/MobileNetV3_large_x1_0_ssld.pdparams not found, try to load model file saved with [ save_params, save_persistables, save_vars ] 2020-09-26 04:39:33 [INFO] There are 268 varaibles in /home/wsuser/work/output/mobilenetv3_large_ssld/pretrain/MobileNetV3_large_x1_0_ssld are loaded. 2020-09-26 04:39:35,680-WARNING: Your reader has raised an exception! Exception in thread Thread-15: Traceback (most recent call last): File "/opt/conda/envs/Python-3.6-CUDA/lib/python3.6/threading.py", line 916, in _bootstrap_inner self.run() File "/opt/conda/envs/Python-3.6-CUDA/lib/python3.6/threading.py", line 864, in run self._target(*self._args, **self._kwargs) File "/opt/conda/envs/Python-3.6-CUDA/lib/python3.6/site-packages/paddle/fluid/reader.py", line 1157, in thread_main six.reraise(*sys.exc_info()) File "/opt/conda/envs/Python-3.6-CUDA/lib/python3.6/site-packages/six.py", line 703, in reraise raise value File "/opt/conda/envs/Python-3.6-CUDA/lib/python3.6/site-packages/paddle/fluid/reader.py", line 1137, in thread_main for tensors in self._tensor_reader(): File "/opt/conda/envs/Python-3.6-CUDA/lib/python3.6/site-packages/paddle/fluid/reader.py", line 1207, in tensor_reader_impl for slots in paddle_reader(): File "/opt/conda/envs/Python-3.6-CUDA/lib/python3.6/site-packages/paddle/fluid/data_feeder.py", line 506, in reader_creator for item in reader(): File "/opt/conda/envs/Python-3.6-CUDA/lib/python3.6/site-packages/paddlex/cv/datasets/dataset.py", line 191, in queue_reader batch_data = generate_minibatch(batch_data, mapper=mapper) File "/opt/conda/envs/Python-3.6-CUDA/lib/python3.6/site-packages/paddlex/cv/datasets/dataset.py", line 242, in generate_minibatch elif len(data[1]) == 0 or isinstance(data[1][0], tuple) and data[ TypeError: object of type 'int' has no len()

EnforceNotMet Traceback (most recent call last) in 9 learning_rate=0.00625, 10 save_dir='/home/wsuser/work/output/mobilenetv3_large_ssld', ---> 11 use_vdl=False) 12 # 运行报错,https://github.com/PaddlePaddle/PaddleX/issues/167

/opt/conda/envs/Python-3.6-CUDA/lib/python3.6/site-packages/paddlex/cv/models/classifier.py in train(self, num_epochs, train_dataset, train_batch_size, eval_dataset, save_interval_epochs, log_interval_steps, save_dir, pretrain_weights, optimizer, learning_rate, warmup_steps, warmup_start_lr, lr_decay_epochs, lr_decay_gamma, use_vdl, sensitivities_file, eval_metric_loss, early_stop, early_stop_patience, resume_checkpoint) 205 use_vdl=use_vdl, 206 early_stop=early_stop, --> 207 early_stop_patience=early_stop_patience) 208 209 def evaluate(self,

/opt/conda/envs/Python-3.6-CUDA/lib/python3.6/site-packages/paddlex/cv/models/base.py in train_loop(self, num_epochs, train_dataset, train_batch_size, eval_dataset, save_interval_epochs, log_interval_steps, save_dir, use_vdl, early_stop, early_stop_patience) 483 step_start_time = time.time() 484 epoch_start_time = time.time() --> 485 for step, data in enumerate(self.train_data_loader()): 486 outputs = self.exe.run( 487 self.parallel_train_prog,

/opt/conda/envs/Python-3.6-CUDA/lib/python3.6/site-packages/paddle/fluid/reader.py in next(self) 1101 return self._reader.read_next_list() 1102 else: -> 1103 return self._reader.read_next() 1104 except StopIteration: 1105 self._queue.close()

EnforceNotMet:

C++ Call Stacks (More useful to developers):

0 std::string paddle::platform::GetTraceBackString<std::string const&>(std::string const&, char const*, int) 1 paddle::platform::EnforceNotMet::EnforceNotMet(std::string const&, char const*, int) 2 paddle::operators::reader::BlockingQueue<std::vector<paddle::framework::LoDTensor, std::allocatorpaddle::framework::LoDTensor > >::Receive(std::vector<paddle::framework::LoDTensor, std::allocatorpaddle::framework::LoDTensor >) 3 paddle::operators::reader::PyReader::ReadNext(std::vector<paddle::framework::LoDTensor, std::allocatorpaddle::framework::LoDTensor >) 4 std::_Function_handler<std::unique_ptr<std::__future_base::_Result_base, std::__future_base::_Result_base::_Deleter> (), std::__future_base::_Task_setter<std::unique_ptr<std::__future_base::_Result, std::__future_base::_Result_base::_Deleter>, unsigned long> >::_M_invoke(std::_Any_data const&) 5 std::__future_base::_State_base::_M_do_set(std::function<std::unique_ptr<std::__future_base::_Result_base, std::__future_base::_Result_base::_Deleter> ()>&, bool&) 6 ThreadPool::ThreadPool(unsigned long)::{lambda()#1}::operator()() const

Error Message Summary:

Error: Blocking queue is killed because the data reader raises an exception [Hint: Expected killed_ != true, but received killed_:1 == true:1.] at (/paddle/paddle/fluid/operators/reader/blocking_queue.h:141)

====================

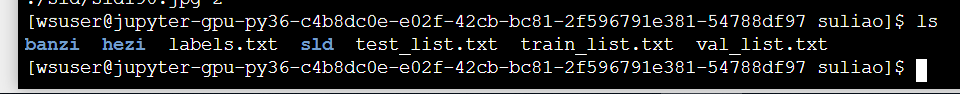

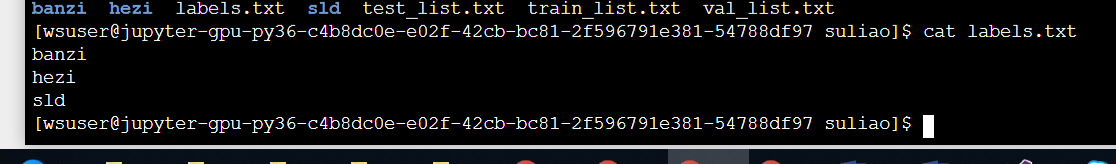

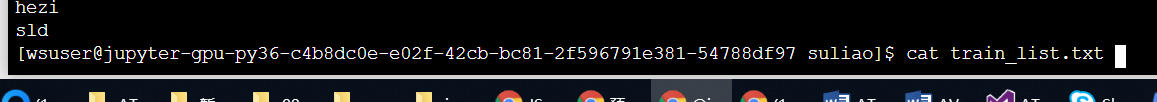

请在这里描述您在使用过程中的问题,如模型训练出错,建议贴上模型训练代码,以便开发人员分析,并快速响应