[cherry pick] ofa docs and bug fix (#612)

* cherry pick * fix bug when paddle upgrade (#606) * add jpg

Showing

demo/ofa/bert/run_glue_ofa.py

0 → 100644

此差异已折叠。

demo/ofa/ernie/ofa_ernie.py

0 → 100644

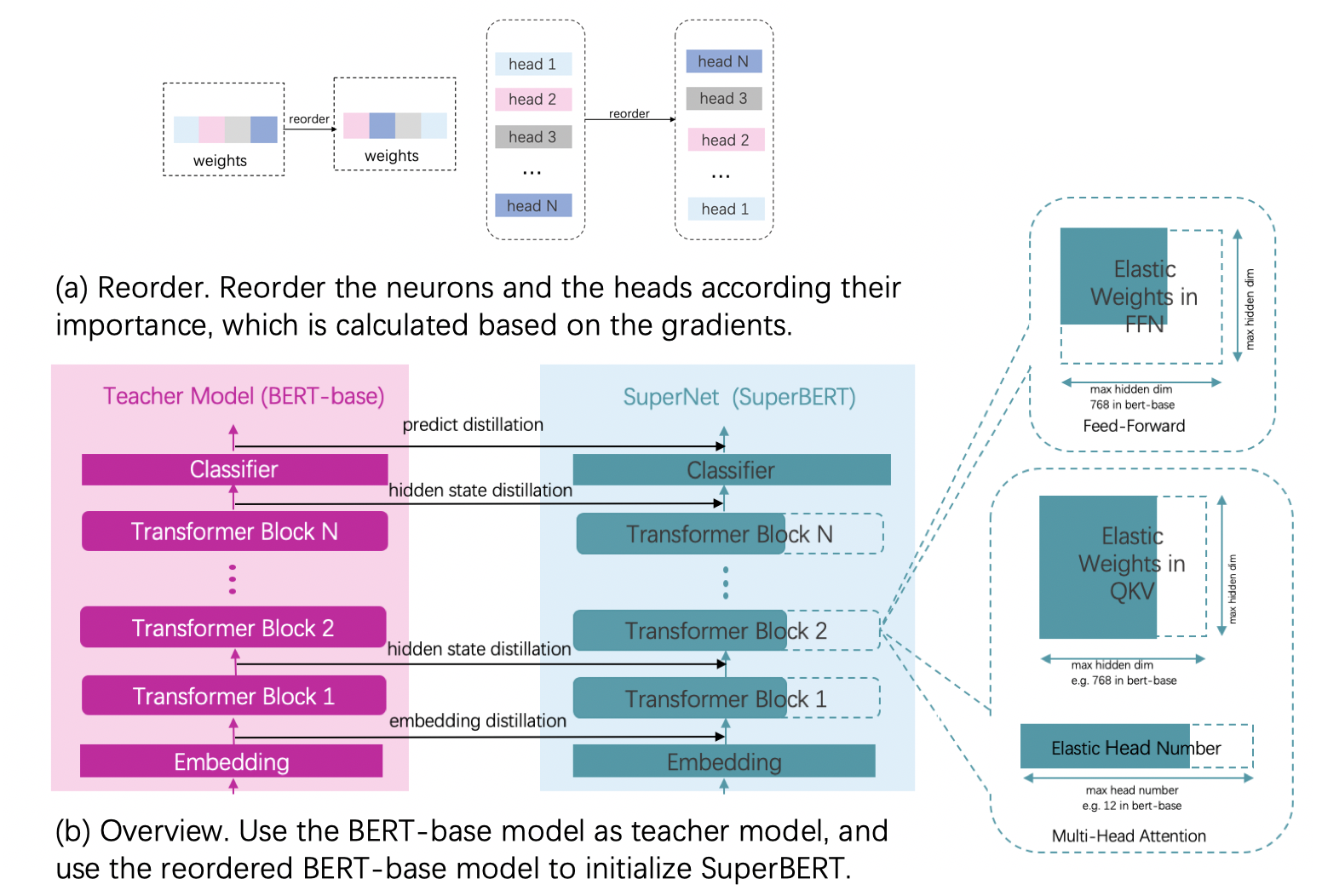

docs/images/algo/ofa_bert.jpg

0 → 100644

990.1 KB

docs/zh_cn/api_cn/ofa_api.rst

0 → 100644

此差异已折叠。

tests/test_ofa_layers.py

0 → 100644

tests/test_ofa_layers_old.py

0 → 100644