add implementation of BiBERT (#1102)

Showing

demo/quant/BiBERT/README.md

0 → 100644

demo/quant/BiBERT/basic.py

0 → 100644

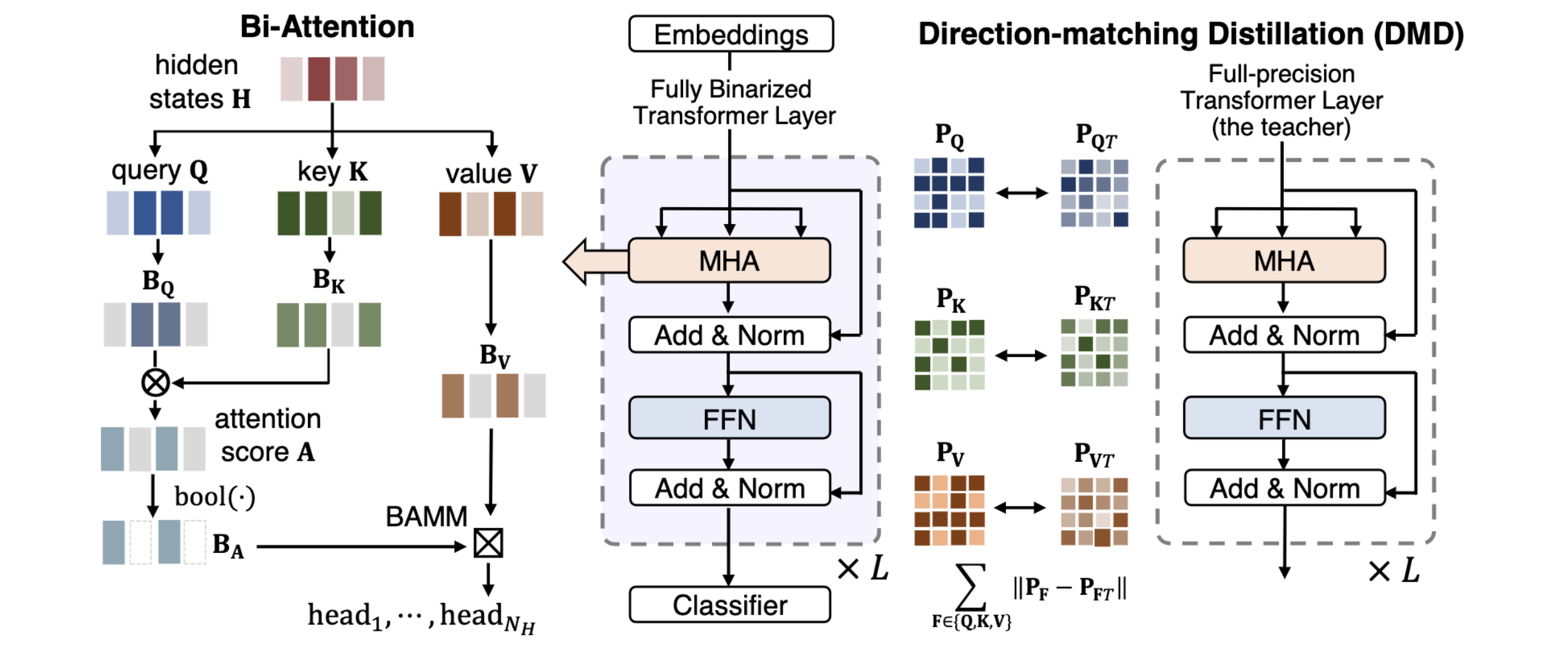

demo/quant/BiBERT/overview.png

0 → 100644

439.3 KB

demo/quant/BiBERT/run_glue.py

0 → 100644

demo/quant/BiBERT/task_distill.py

0 → 100644