Release pantheon (#56)

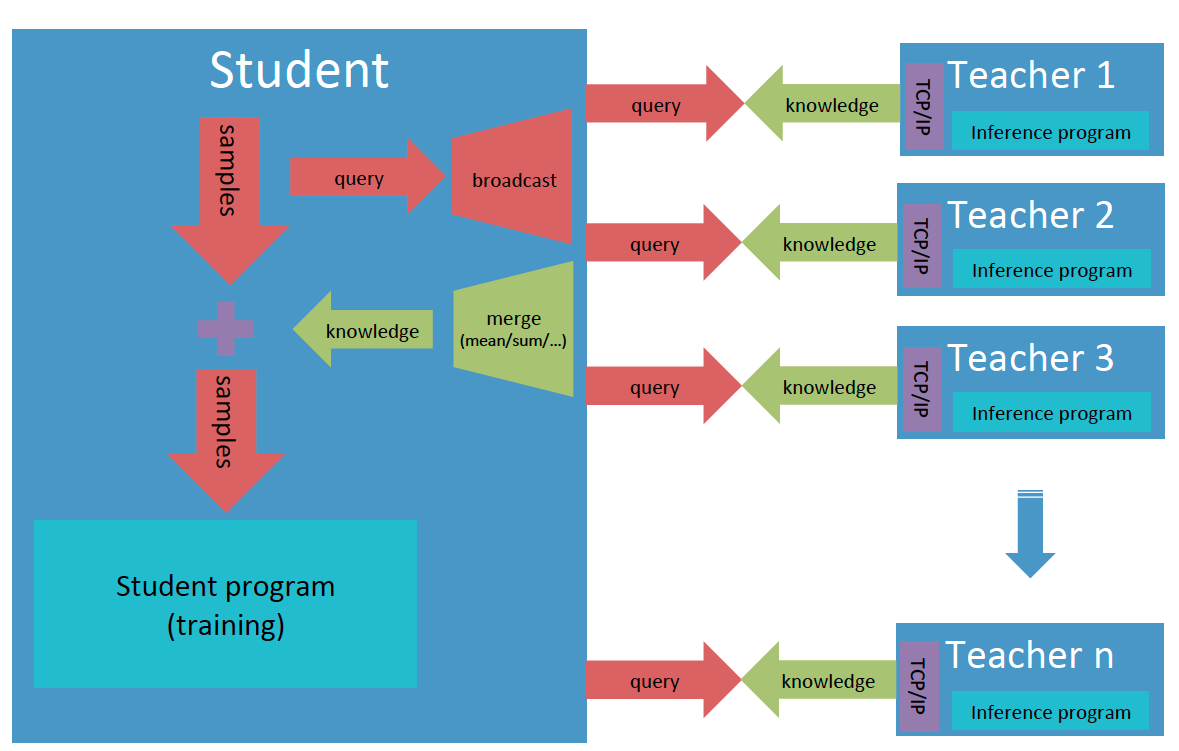

* Init pantheon * Update README * Fix pantheon import * Update README * Fix the possible bug when del student * Format docs of public methods * Add api guide & docs for pantheon * Use str2bool instead of bool

Showing

demo/pantheon/README.md

0 → 100644

demo/pantheon/run_student.py

0 → 100644

demo/pantheon/run_teacher1.py

0 → 100644

demo/pantheon/run_teacher2.py

0 → 100644

demo/pantheon/utils.py

0 → 100644

docs/docs/api/pantheon_api.md

0 → 100644

paddleslim/pantheon/README.md

0 → 100644

paddleslim/pantheon/__init__.py

0 → 100644

96.3 KB

paddleslim/pantheon/student.py

0 → 100644

paddleslim/pantheon/teacher.py

0 → 100644

paddleslim/pantheon/utils.py

0 → 100644