add bipointnet. (#1727)

Showing

example/BiPointNet/README.md

0 → 100644

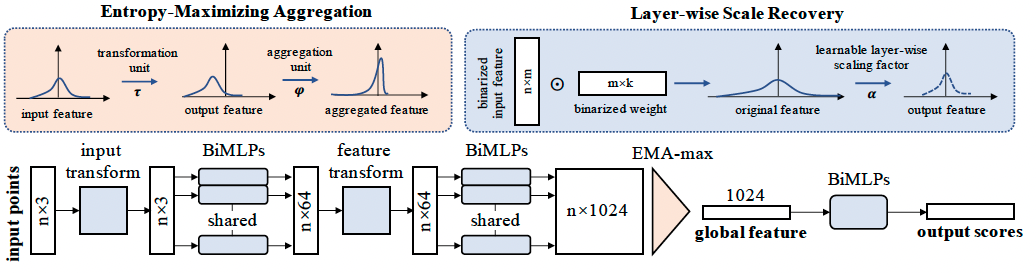

example/BiPointNet/arch.png

0 → 100644

165.8 KB

example/BiPointNet/basic.py

0 → 100644

example/BiPointNet/data.py

0 → 100644

example/BiPointNet/model.py

0 → 100644

example/BiPointNet/test.py

0 → 100644

example/BiPointNet/train.py

0 → 100644