Merge branch 'develop' of https://github.com/PaddlePaddle/PaddleSeg into develop

Showing

configs/cityscape_fast_scnn.yaml

0 → 100644

configs/fast_scnn_pet.yaml

0 → 100644

slim/distillation/README.md

0 → 100644

slim/distillation/cityscape.yaml

0 → 100644

此差异已折叠。

slim/nas/README.md

0 → 100644

slim/nas/deeplab.py

0 → 100644

slim/nas/eval_nas.py

0 → 100644

slim/nas/model_builder.py

0 → 100644

slim/nas/train_nas.py

0 → 100644

slim/prune/README.md

0 → 100644

slim/prune/eval_prune.py

0 → 100644

slim/prune/train_prune.py

0 → 100644

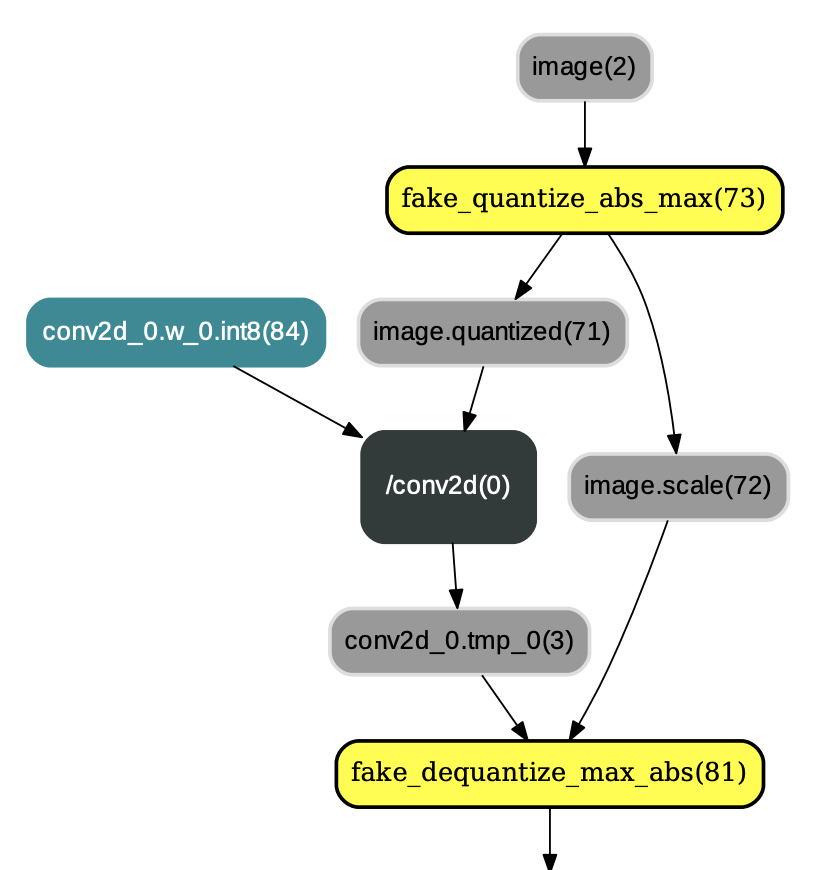

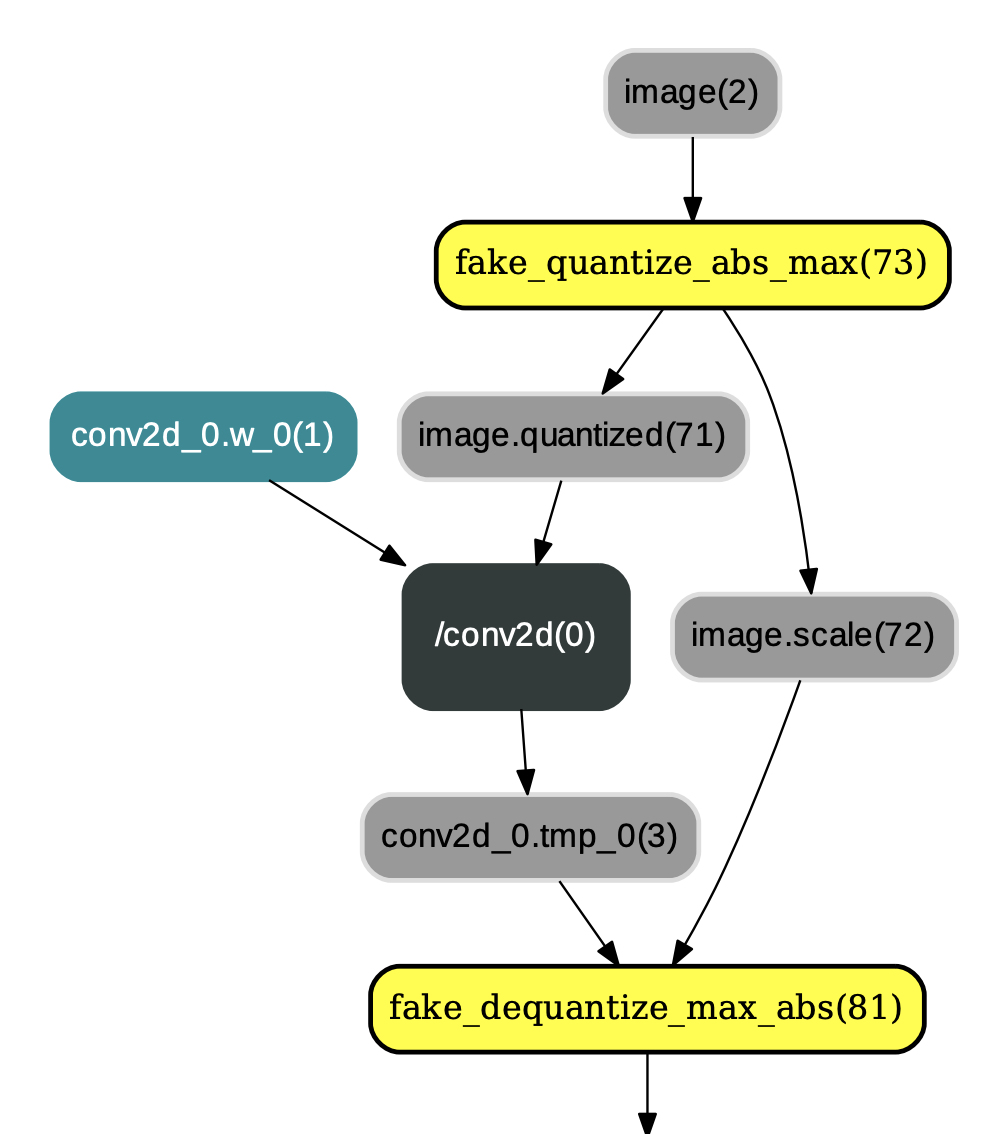

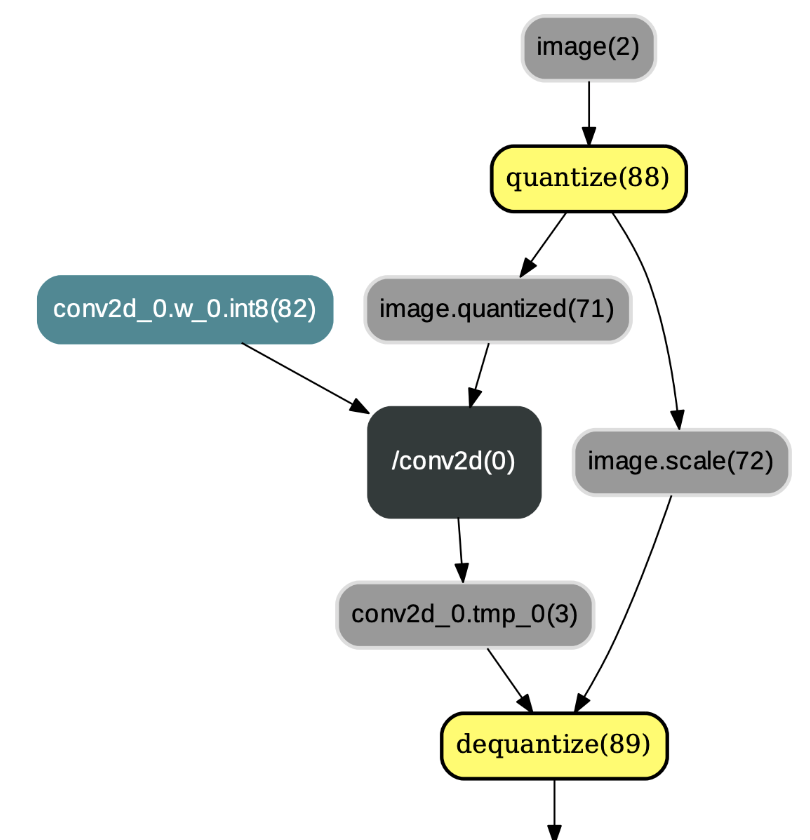

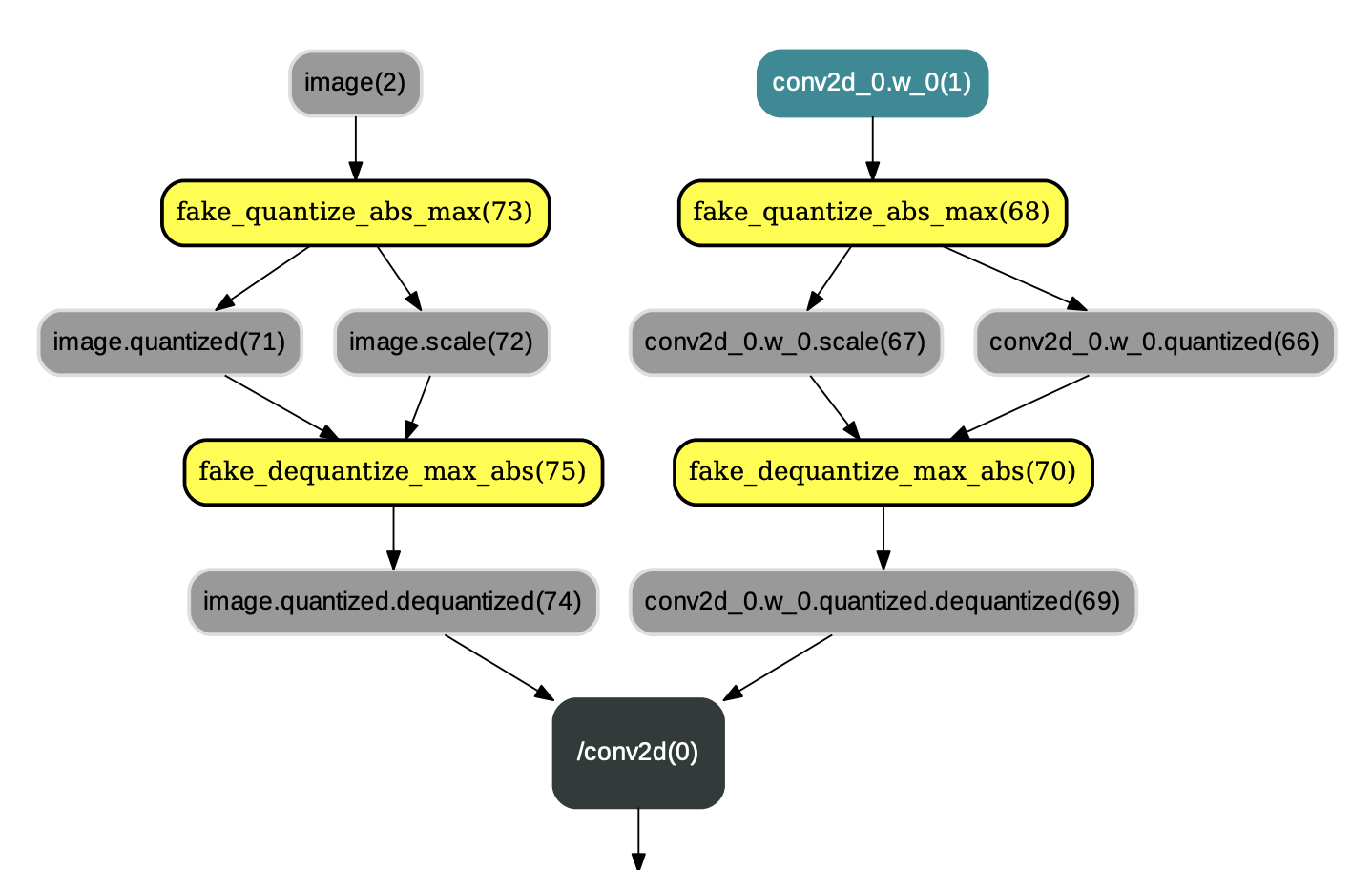

slim/quantization/README.md

0 → 100644

slim/quantization/eval_quant.py

0 → 100644

85.6 KB

110.9 KB

91.4 KB

132.4 KB

slim/quantization/train_quant.py

0 → 100644

turtorial/finetune_fast_scnn.md

0 → 100644