通过checkpoints继续训练无法修改adam学习率,我该如何修改。

Created by: lx-rookie

我在用新数据在Paddle的db模型基础上通过checkpoints方式导入模型继续训练时发现,无法修改学习率?通过pretrain_weights,加载R50的预训练时没有这个问题。请问这个是由于什么原因呢,可以修改吗。

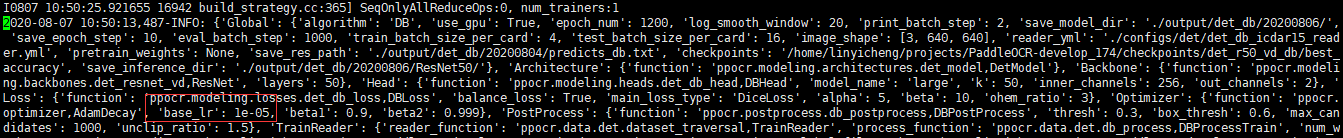

1、通过checkpoints导入paddle模型时,我设置的学习率以及训练实际的学习率如下:

020-08-07 10:50:13,487-INFO: {'Global': {'algorithm': 'DB', 'use_gpu': True, 'epoch_num': 1200, 'log_smooth_window': 20, 'print_batch_step': 2, 'save_model_dir': './output/det_db/20200806/', 'save_epoch_step': 10, 'eval_batch_step': 1000, 'train_batch_size_per_card': 4, 'test_batch_size_per_card': 16, 'image_shape': [3, 640, 640], 'reader_yml': './configs/det/det_db_icdar15_reader.yml', 'pretrain_weights': None, 'save_res_path': './output/det_db/20200804/predicts_db.txt', 'checkpoints': '/home/linyicheng/projects/PaddleOCR-develop_174/checkpoints/det_r50_vd_db/best_accuracy', 'save_inference_dir': './output/det_db/20200806/ResNet50/'}, 'Architecture': {'function': 'ppocr.modeling.architectures.det_model,DetModel'}, 'Backbone': {'function': 'ppocr.modeling.backbones.det_resnet_vd,ResNet', 'layers': 50}, 'Head': {'function': 'ppocr.modeling.heads.det_db_head,DBHead', 'model_name': 'large', 'k': 50, 'inner_channels': 256, 'out_channels': 2}, 'Loss': {'function': 'ppocr.modeling.losses.det_db_loss,DBLoss', 'balance_loss': True, 'main_loss_type': 'DiceLoss', 'alpha': 5, 'beta': 10, 'ohem_ratio': 3}, 'Optimizer': {'function': 'ppocr.optimizer,AdamDecay', 'base_lr': 1e-05, 'beta1': 0.9, 'beta2': 0.999},

020-08-07 10:50:13,487-INFO: {'Global': {'algorithm': 'DB', 'use_gpu': True, 'epoch_num': 1200, 'log_smooth_window': 20, 'print_batch_step': 2, 'save_model_dir': './output/det_db/20200806/', 'save_epoch_step': 10, 'eval_batch_step': 1000, 'train_batch_size_per_card': 4, 'test_batch_size_per_card': 16, 'image_shape': [3, 640, 640], 'reader_yml': './configs/det/det_db_icdar15_reader.yml', 'pretrain_weights': None, 'save_res_path': './output/det_db/20200804/predicts_db.txt', 'checkpoints': '/home/linyicheng/projects/PaddleOCR-develop_174/checkpoints/det_r50_vd_db/best_accuracy', 'save_inference_dir': './output/det_db/20200806/ResNet50/'}, 'Architecture': {'function': 'ppocr.modeling.architectures.det_model,DetModel'}, 'Backbone': {'function': 'ppocr.modeling.backbones.det_resnet_vd,ResNet', 'layers': 50}, 'Head': {'function': 'ppocr.modeling.heads.det_db_head,DBHead', 'model_name': 'large', 'k': 50, 'inner_channels': 256, 'out_channels': 2}, 'Loss': {'function': 'ppocr.modeling.losses.det_db_loss,DBLoss', 'balance_loss': True, 'main_loss_type': 'DiceLoss', 'alpha': 5, 'beta': 10, 'ohem_ratio': 3}, 'Optimizer': {'function': 'ppocr.optimizer,AdamDecay', 'base_lr': 1e-05, 'beta1': 0.9, 'beta2': 0.999},

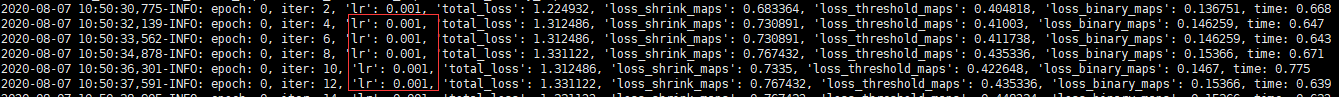

2020-08-07 10:50:41,677-INFO: epoch: 0, iter: 18, 'lr': 0.001, 'total_loss': 1.401952, 'loss_shrink_maps': 0.775264, 'loss_threshold_maps': 0.471727, 'loss_binary_maps': 0.154961, time: 0.649 2020-08-07 10:50:43,021-INFO: epoch: 0, iter: 20, 'lr': 0.001, 'total_loss': 1.44982, 'loss_shrink_maps': 0.804695, 'loss_threshold_maps': 0.474939, 'loss_binary_maps': 0.160919, time: 0.686 2020-08-07 10:50:44,446-INFO: epoch: 0, iter: 22, 'lr': 0.001, 'total_loss': 1.52065, 'loss_shrink_maps': 0.861021, 'loss_threshold_maps': 0.479762, 'loss_binary_maps': 0.17221, time: 0.782 2020-08-07 10:50:45,771-INFO: epoch: 0, iter: 24, 'lr': 0.001, 'total_loss': 1.472782, 'loss_shrink_maps': 0.83159, 'loss_threshold_maps': 0.474939, 'loss_binary_maps': 0.166253, time: 0.694 2020-08-07 10:50:47,053-INFO: epoch: 0, iter: 26, 'lr': 0.001, 'total_loss': 1.472782, 'loss_shrink_maps': 0.83159, 'loss_threshold_maps': 0.474939, 'loss_binary_maps': 0.166253, time: 0.636 2020-08-07 10:50:48,335-INFO: epoch: 0, iter: 28, 'lr': 0.001, 'total_loss': 1.371394, 'loss_shrink_maps': 0.761884, 'loss_threshold_maps': 0.468806, 'loss_binary_maps': 0.152407, time: 0.64 我设置学习率为1e-5,但是训练显示学习率为0.001,由于我是为了增量训练,如此大的学习率会严重影响效果。 2、通过pretrain_weights导入R50的模型参数时,设置学习率为0.0001,实际使用学习率也为0.001,如下图所示。 我想问有什么方法可以修改这个学习率呢 2020-08-05 12:44:51,173-INFO: {'Global': {'algorithm': 'DB', 'use_gpu': True, 'epoch_num': 1200, 'log_smooth_window': 20, 'print_batch_step': 2, 'save_model_dir': './output/det_db/20200804/', 'save_epoch_step': 10, 'eval_batch_step': 1000, 'train_batch_size_per_card': 4, 'test_batch_size_per_card': 16, 'image_shape': [3, 640, 640], 'reader_yml': './configs/det/det_db_icdar15_reader.yml', 'pretrain_weights': './pretrain_models/ResNet50_vd_ssld_pretrained/', 'save_res_path': './output/det_db/20200804/predicts_db.txt', 'checkpoints': '/home/linyicheng/projects/PaddleOCR-develop_174/checkpoints/det_r50_vd_db/', 'save_inference_dir': './output/det_db/20200804/ResNet50/'}, 'Architecture': {'function': 'ppocr.modeling.architectures.det_model,DetModel'}, 'Backbone': {'function': 'ppocr.modeling.backbones.det_resnet_vd,ResNet', 'layers': 50}, 'Head': {'function': 'ppocr.modeling.heads.det_db_head,DBHead', 'model_name': 'large', 'k': 50, 'inner_channels': 256, 'out_channels': 2}, 'Loss': {'function': 'ppocr.modeling.losses.det_db_loss,DBLoss', 'balance_loss': True, 'main_loss_type': 'DiceLoss', 'alpha': 5, 'beta': 10, 'ohem_ratio': 3}, 'Optimizer': {'function': 'ppocr.optimizer,AdamDecay', 'base_lr': 0.0001, 'beta1': 0.9, 'beta2': 0.999}, 'PostProcess': {'function': 'ppocr.postprocess.db_postprocess,DBPostProcess', 'thresh': 0.3, 'box_thresh': 0.6, 'max_candidates': 1000, 'unclip_ratio': 1.5},

['lr', 'total_loss', 'loss_shrink_maps', 'loss_threshold_maps', 'loss_binary_maps'] 2020-08-05 12:45:05,924-INFO: epoch: 0, iter: 2, 'lr': 1e-04, 'total_loss': 8.828784, 'loss_shrink_maps': 4.39508, 'loss_threshold_maps': 3.579176, 'loss_binary_maps': 0.874548, time: 0.692 2020-08-05 12:45:07,398-INFO: epoch: 0, iter: 4, 'lr': 1e-04, 'total_loss': 8.599646, 'loss_shrink_maps': 4.375061, 'loss_threshold_maps': 3.331243, 'loss_binary_maps': 0.873321, time: 0.658 2020-08-05 12:45:08,916-INFO: epoch: 0, iter: 6, 'lr': 1e-04, 'total_loss': 8.135834, 'loss_shrink_maps': 4.375061, 'loss_threshold_maps': 2.927799, 'loss_binary_maps': 0.873321, time: 0.650 2020-08-05 12:45:10,236-INFO: epoch: 0, iter: 8, 'lr': 1e-04, 'total_loss': 7.975481, 'loss_shrink_maps': 4.383638, 'loss_threshold_maps': 2.772709, 'loss_binary_maps': 0.859874, time: 0.650 2020-08-05 12:45:11,780-INFO: epoch: 0, iter: 10, 'lr': 1e-04, 'total_loss': 7.949251, 'loss_shrink_maps': 4.375061, 'loss_threshold_maps': 2.635275, 'loss_binary_maps': 0.854987, time: 0.671 2020-08-05 12:45:13,149-INFO: epoch: 0, iter: 12, 'lr': 1e-04, 'total_loss': 7.717634, 'loss_shrink_maps': 4.34816, 'loss_threshold_maps': 2.622825, 'loss_binary_maps': 0.852897, time: 0.692 2020-08-05 12:45:14,496-INFO: epoch: 0, iter: 14, 'lr': 1e-04, 'total_loss': 7.64561, 'loss_shrink_maps': 4.32402, 'loss_threshold_maps': 2.409074, 'loss_binary_maps': 0.852523, time: 0.669 2020-08-05 12:45:15,856-INFO: epoch: 0, iter: 16, 'lr': 1e-04, 'total_loss': 7.3877, 'loss_shrink_maps': 4.293646, 'loss_threshold_maps': 2.1307, 'loss_binary_maps': 0.835358, time: 0.692