“f726178075d146060ea3a4a79773a1c8c6795305”上不存在“source/dnode/mnode/impl/src/mnodeBalance.c”

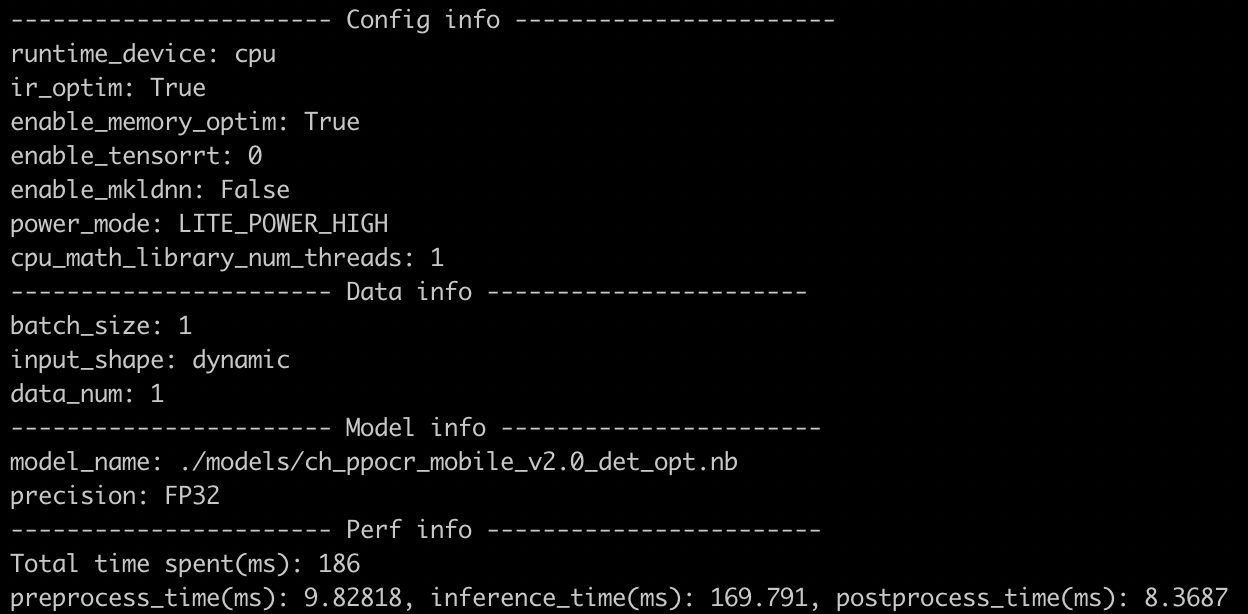

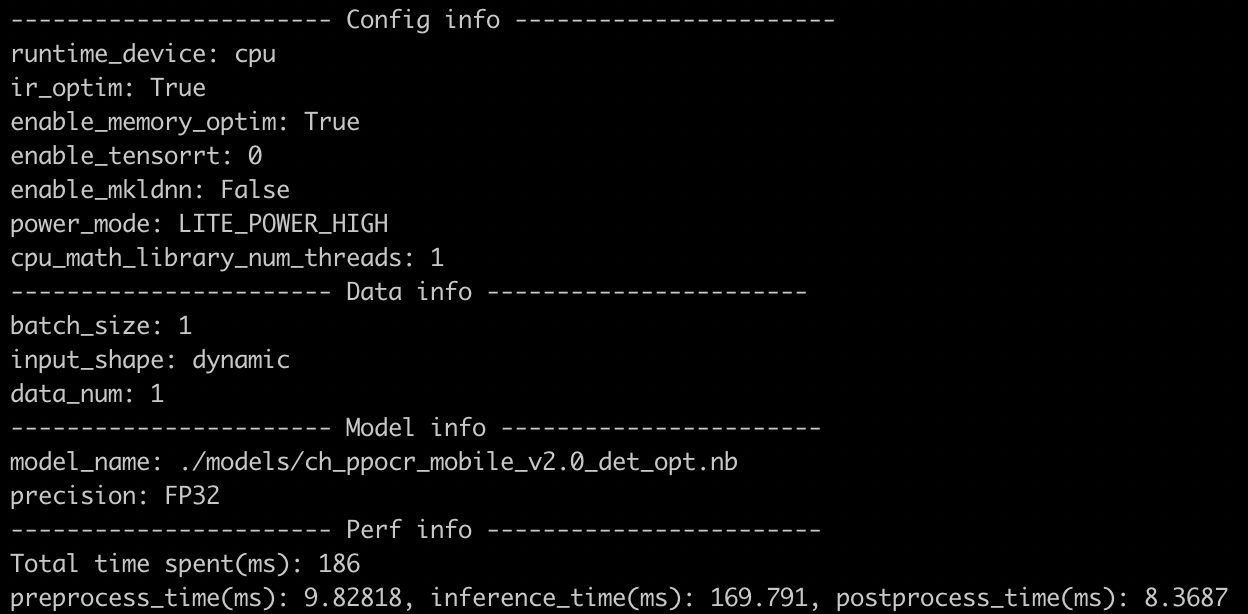

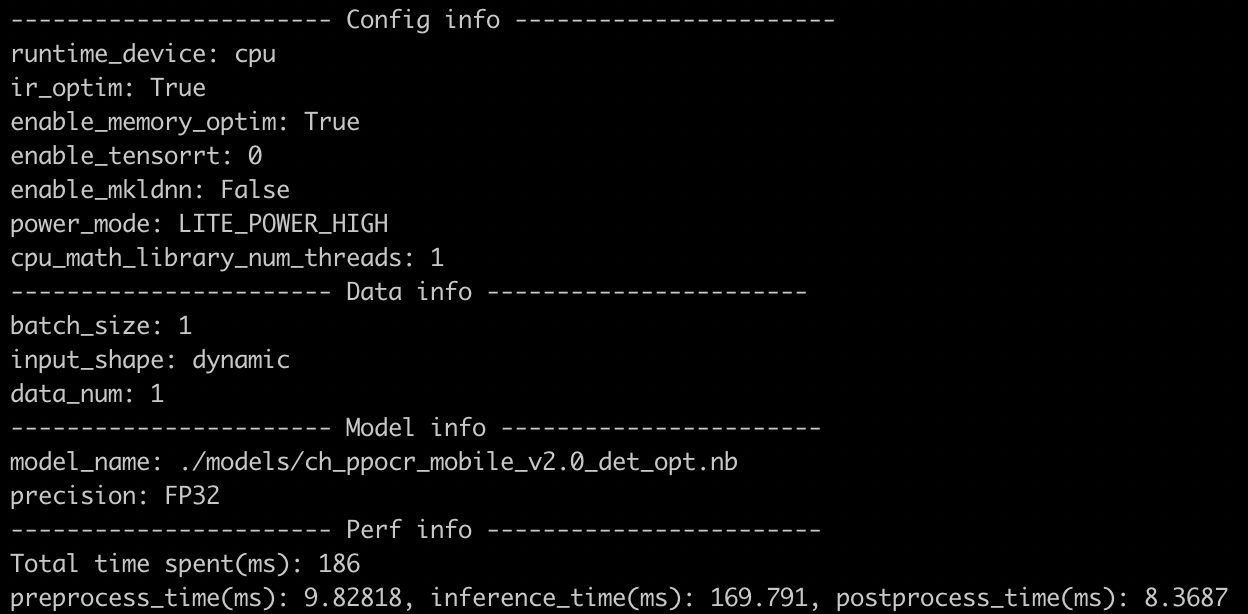

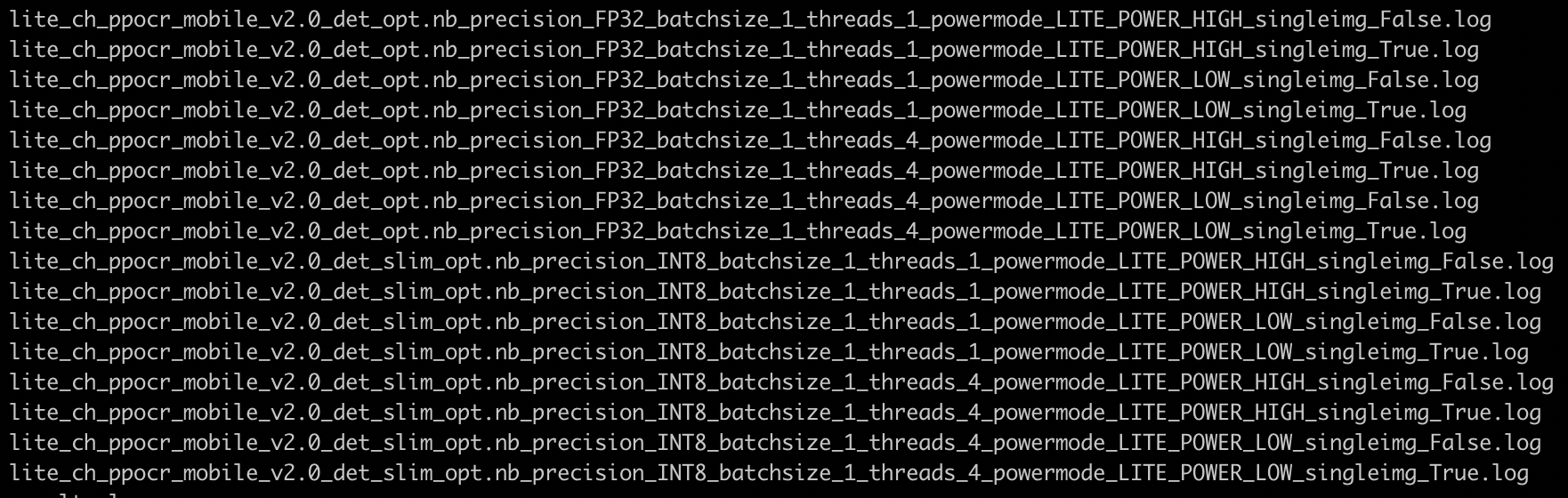

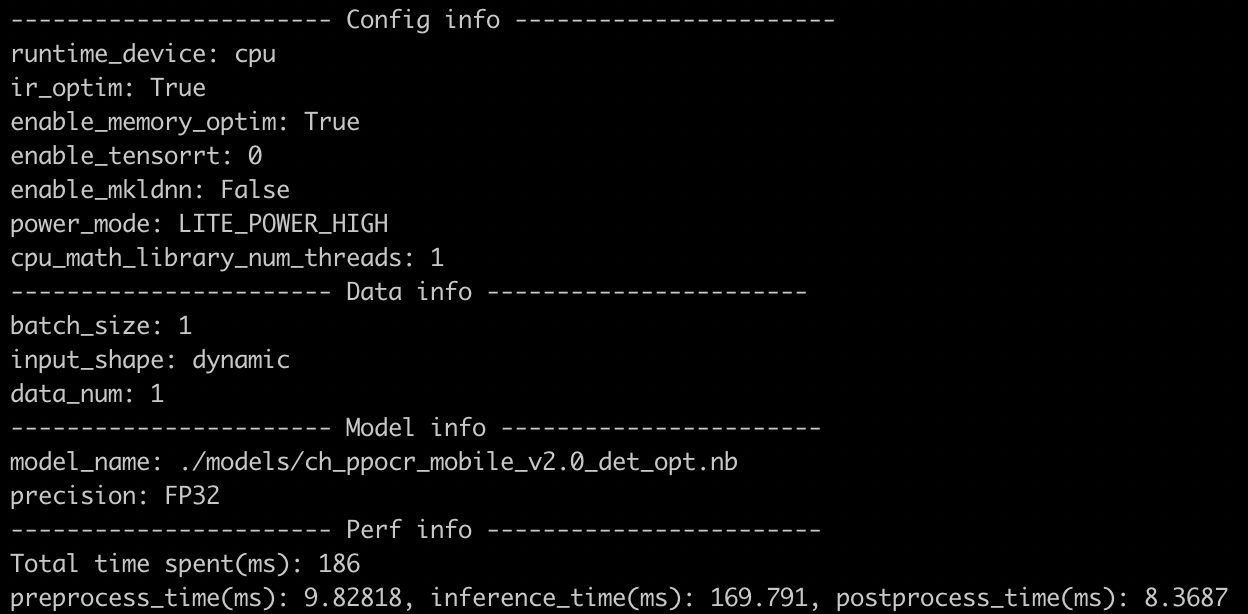

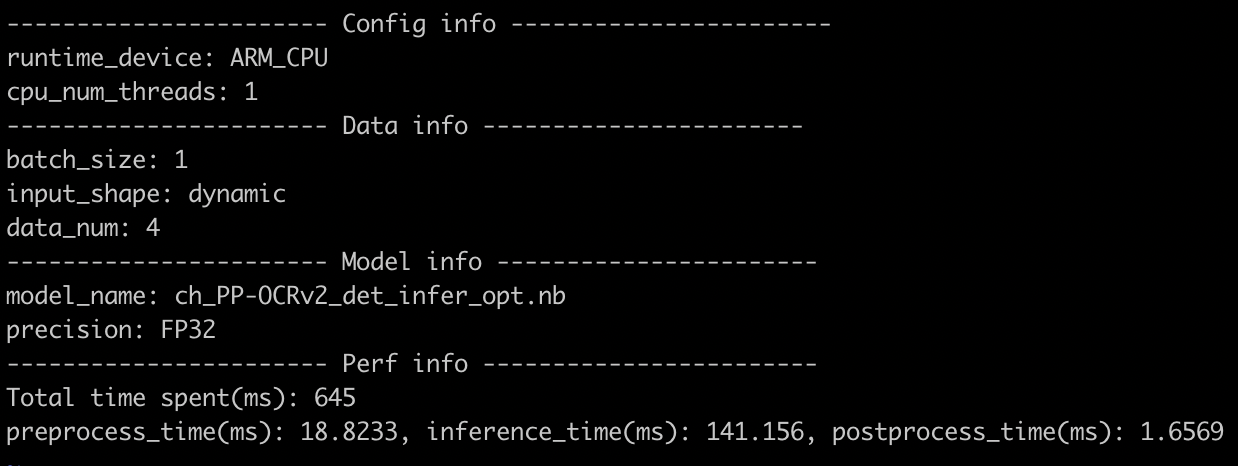

update tipc lite demo

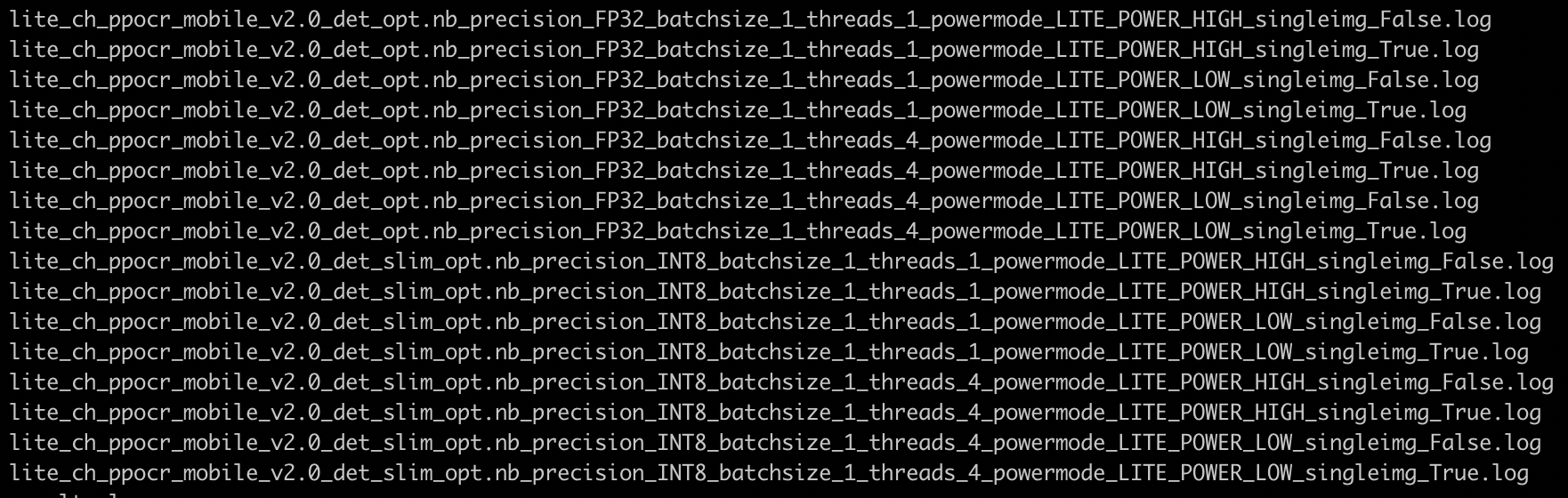

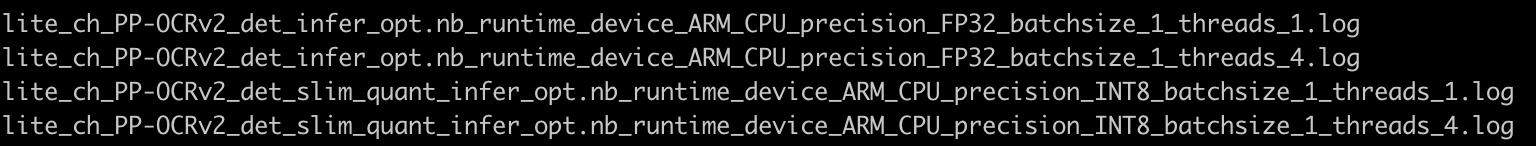

Showing

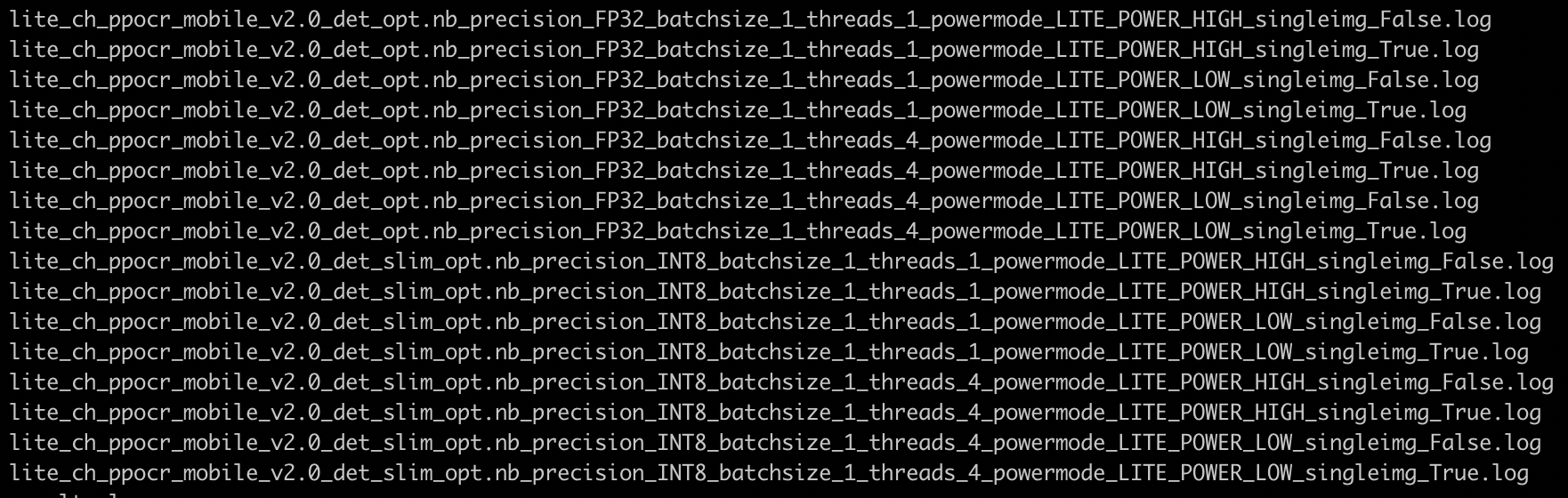

| W: | H:

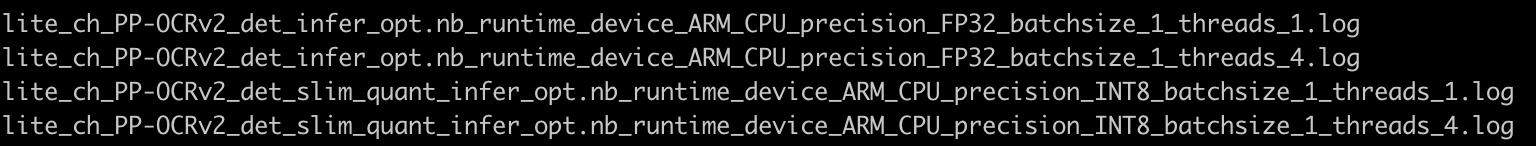

| W: | H:

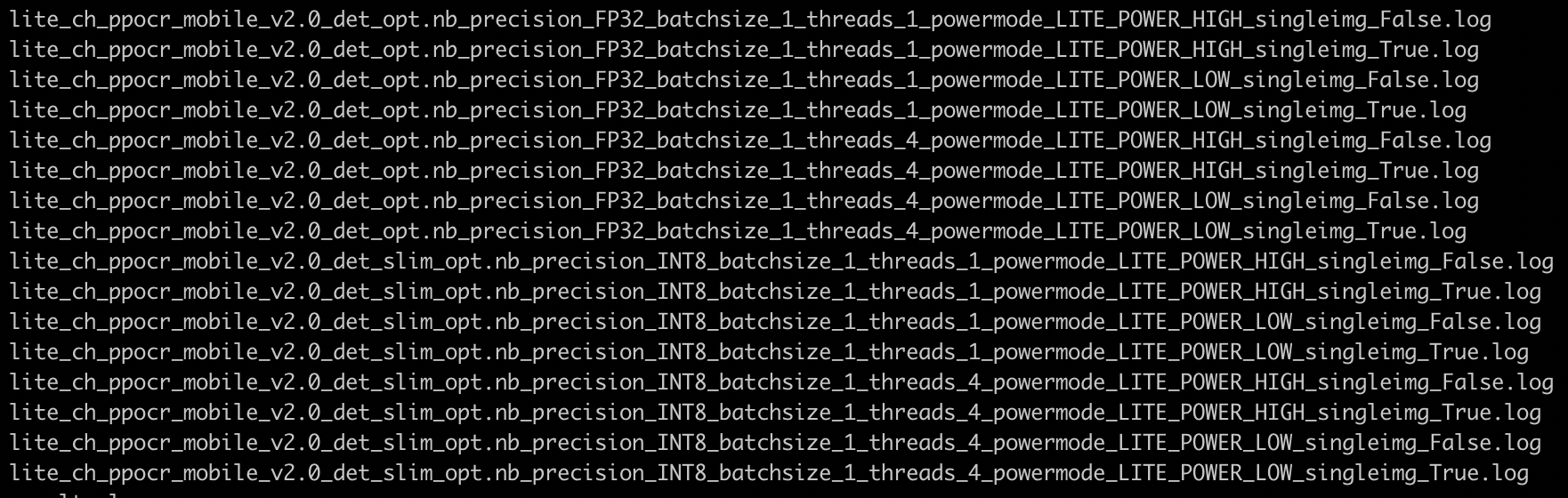

| W: | H:

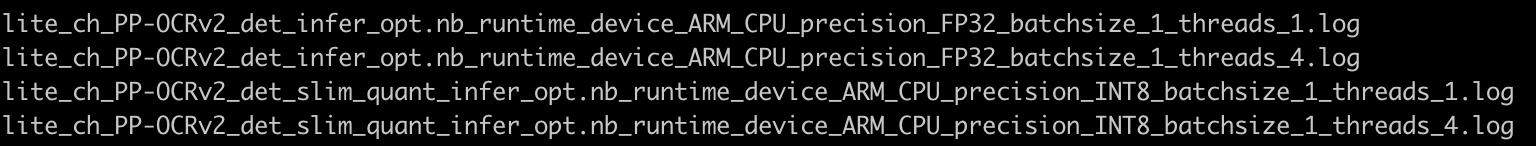

| W: | H:

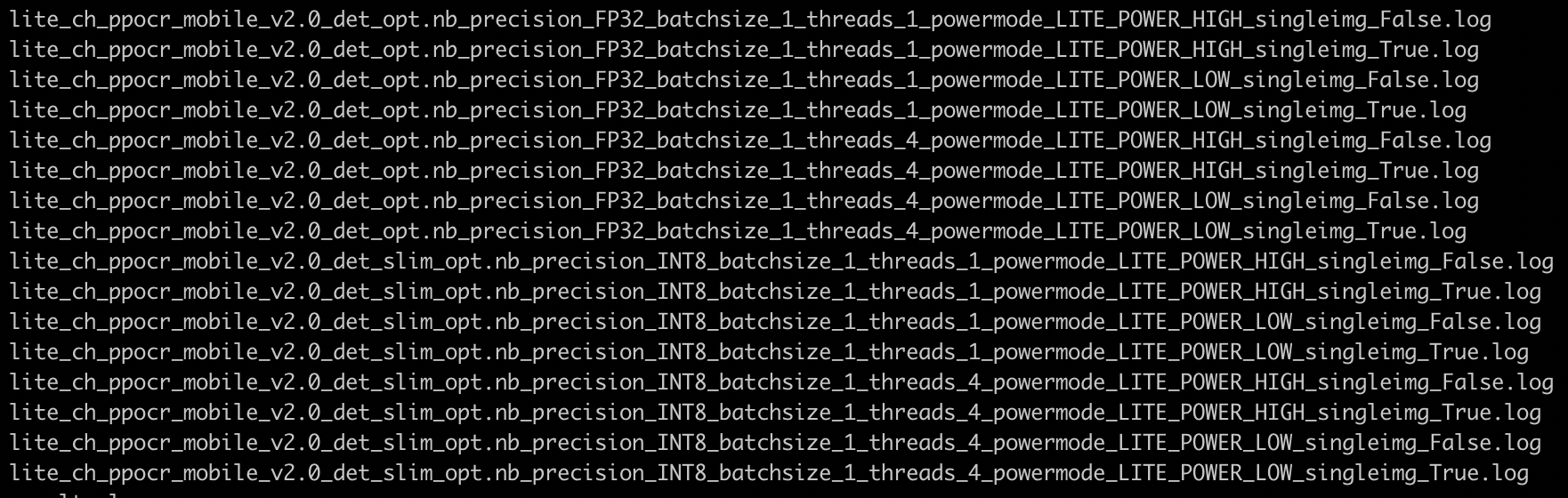

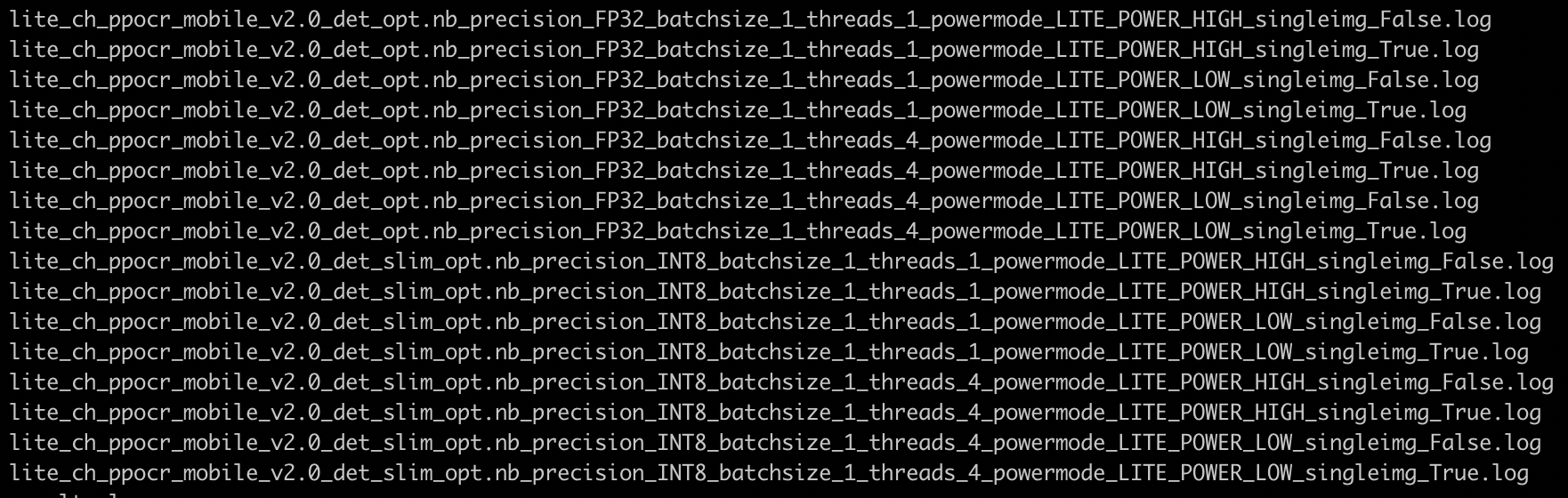

test_tipc/prepare_lite.sh

0 → 100644

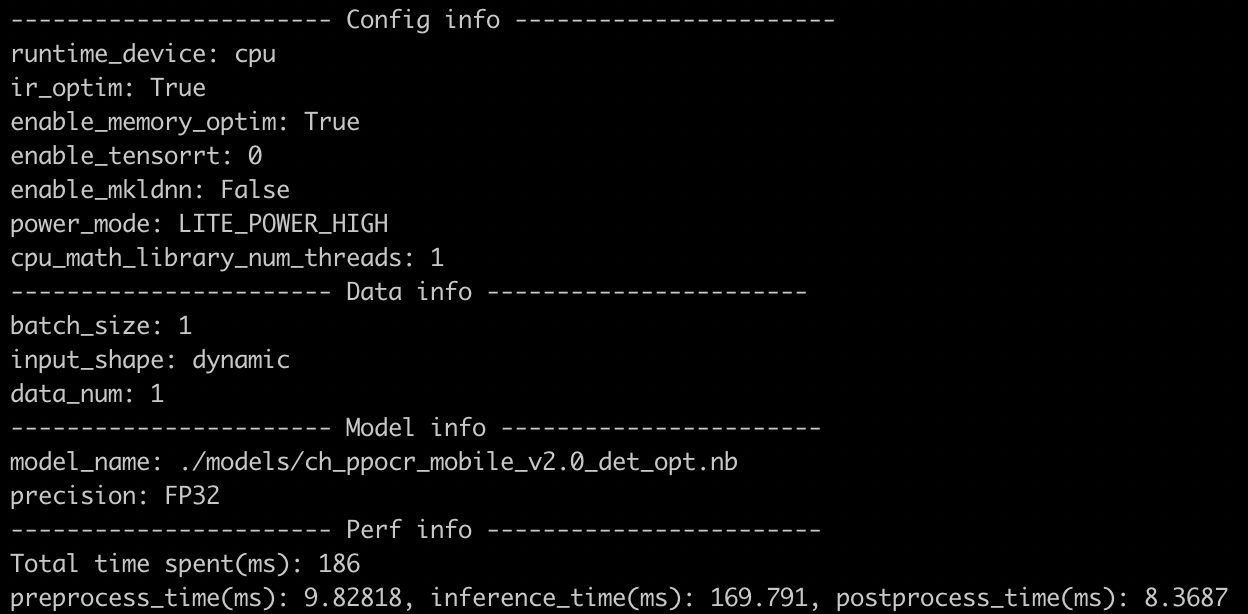

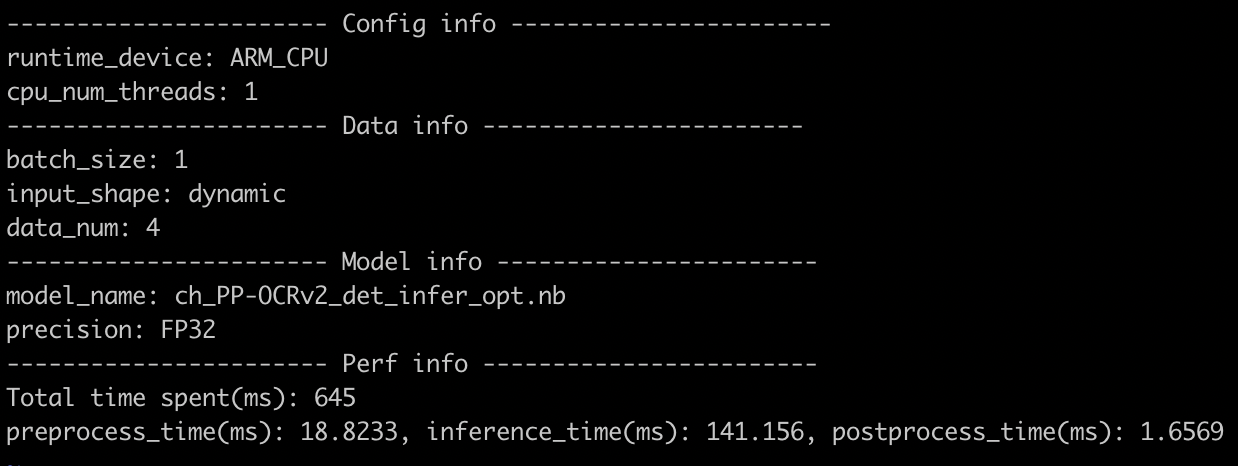

289.9 KB | W: | H:

209.8 KB | W: | H:

775.5 KB | W: | H:

168.7 KB | W: | H: