Merge branch 'dygraph' into fix_prepare

Showing

文件已移动

文件已移动

文件已移动

configs/rec/rec_mtb_nrtr.yml

0 → 100644

因为 它太大了无法显示 image diff 。你可以改为 查看blob。

doc/PaddleOCR_log.png

0 → 100644

75.5 KB

80.1 KB

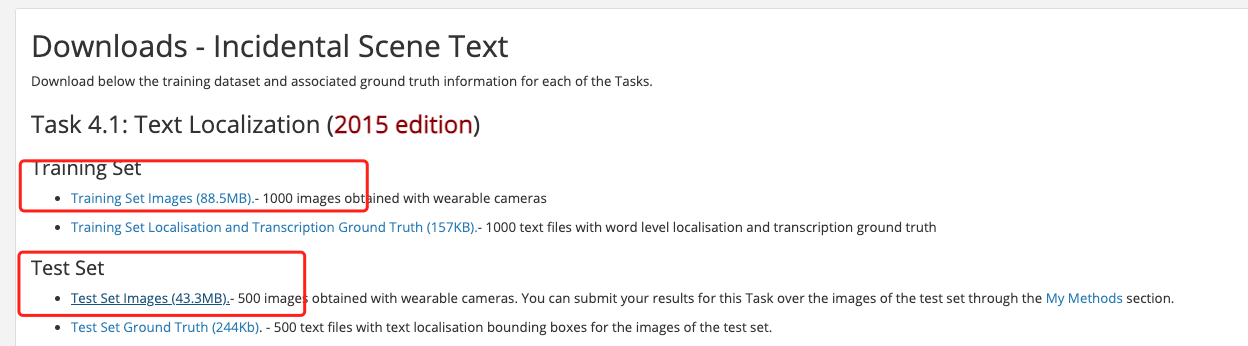

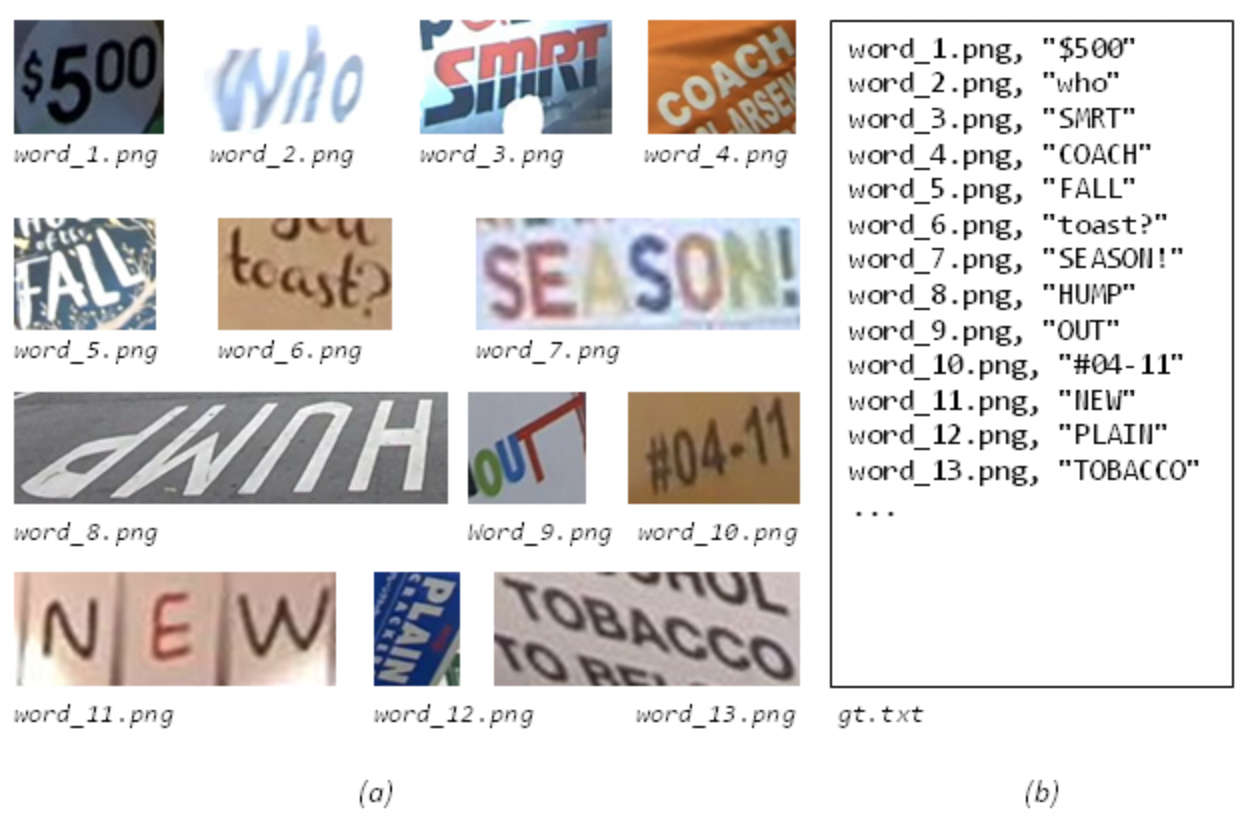

doc/datasets/icdar_rec.png

0 → 100644

921.4 KB

此差异已折叠。

doc/doc_ch/environment.md

0 → 100644

doc/doc_ch/inference_ppocr.md

0 → 100644

doc/doc_ch/models_and_config.md

0 → 100644

doc/doc_ch/paddleOCR_overview.md

0 → 100644

doc/doc_ch/training.md

0 → 100644

doc/doc_en/environment_en.md

0 → 100644

doc/doc_en/inference_ppocr_en.md

0 → 100755

此差异已折叠。

doc/doc_en/training_en.md

0 → 100644

此差异已折叠。

192.4 KB

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

doc/install/mac/conda_create.png

0 → 100755

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

doc/overview.png

0 → 100644

此差异已折叠。

doc/overview_en.png

0 → 100644

此差异已折叠。

doc/ppocrv2_framework.jpg

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

ppocr/losses/rec_nrtr_loss.py

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

tests/compare_results.py

0 → 100644

此差异已折叠。

tests/configs/det_mv3_db.yml

0 → 100644

此差异已折叠。

tests/configs/det_r50_vd_db.yml

0 → 100644

此差异已折叠。

tests/ocr_det_server_params.txt

0 → 100644

此差异已折叠。

tests/ocr_ppocr_mobile_params.txt

0 → 100644

此差异已折叠。

此差异已折叠。

tests/readme.md

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。