Added functionality to use multiple loggers simultaneously and addded the...

Added functionality to use multiple loggers simultaneously and addded the english documentation on how to use them

Showing

doc/doc_en/logging_en.md

0 → 100644

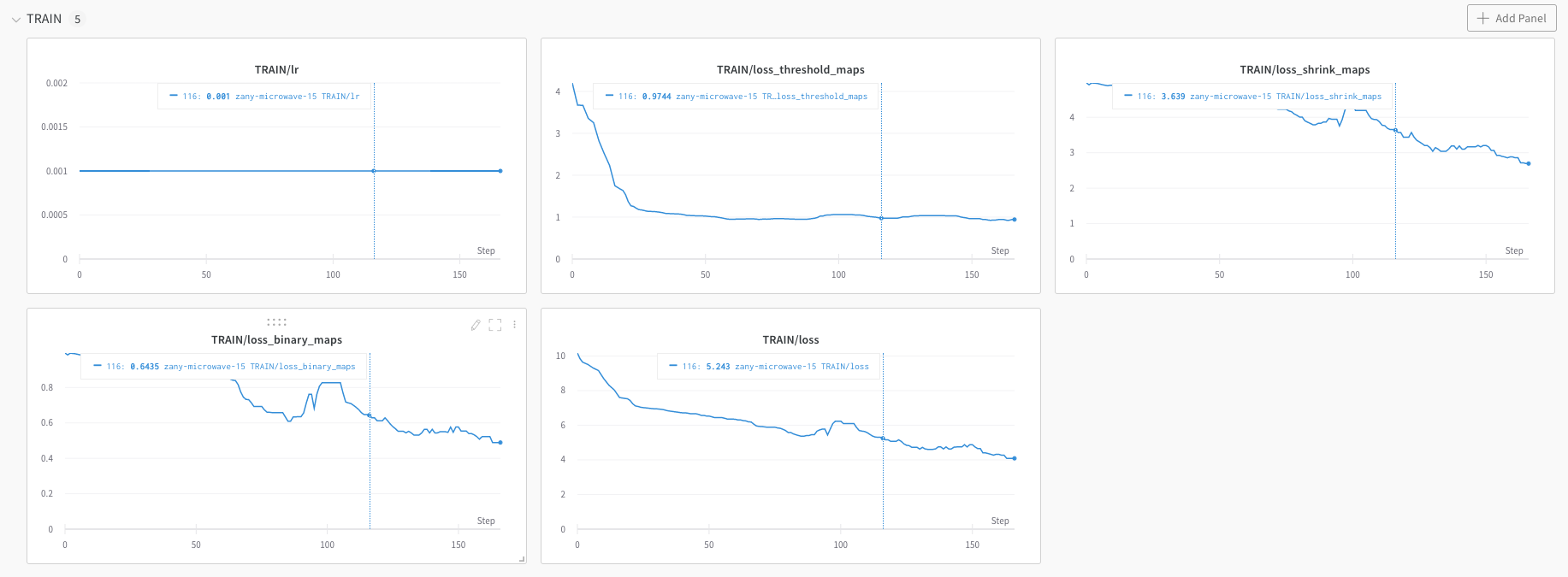

doc/imgs_en/wandb_metrics.png

0 → 100644

89.6 KB

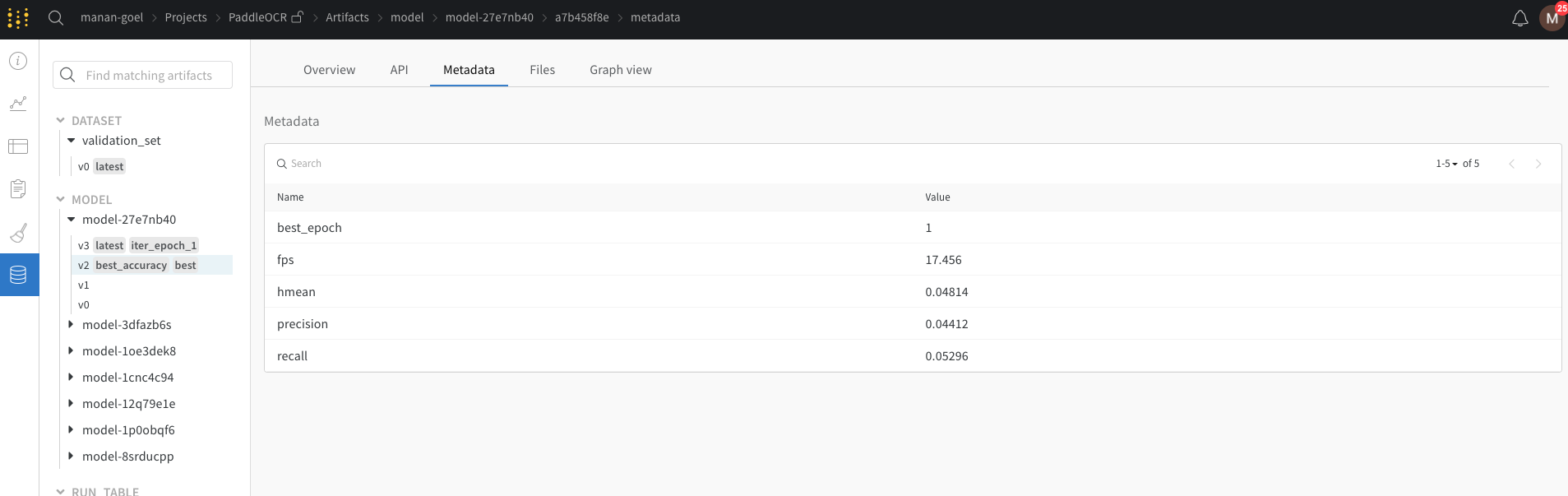

doc/imgs_en/wandb_models.png

0 → 100644

96.0 KB

ppocr/utils/loggers/loggers.py

0 → 100644