Fixed merge conflict in README.md

Showing

deploy/README.md

0 → 100644

deploy/README_ch.md

0 → 100644

26.2 KB

deploy/cpp_infer/include/args.h

0 → 100644

deploy/cpp_infer/readme_ch.md

0 → 100644

deploy/cpp_infer/readme_en.md

已删除

100644 → 0

deploy/cpp_infer/src/args.cpp

0 → 100644

此差异已折叠。

deploy/lite/readme_ch.md

0 → 100644

deploy/lite/readme_en.md

已删除

100644 → 0

deploy/paddle2onnx/readme_ch.md

0 → 100644

deploy/paddlejs/README.md

0 → 100644

deploy/paddlejs/README_ch.md

0 → 100644

deploy/paddlejs/paddlejs_demo.gif

0 → 100644

553.7 KB

doc/PPOCR.pdf

已删除

100644 → 0

文件已删除

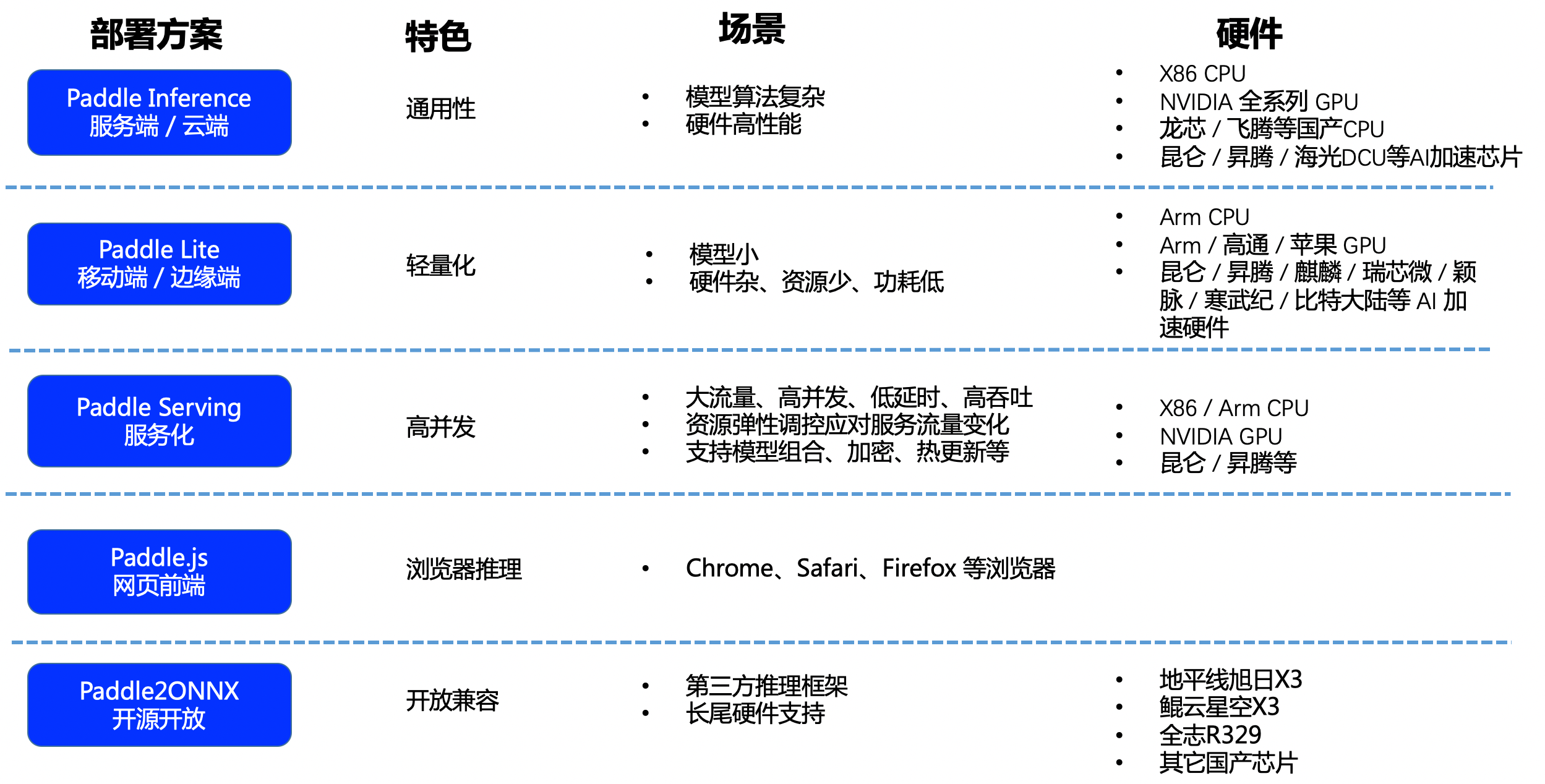

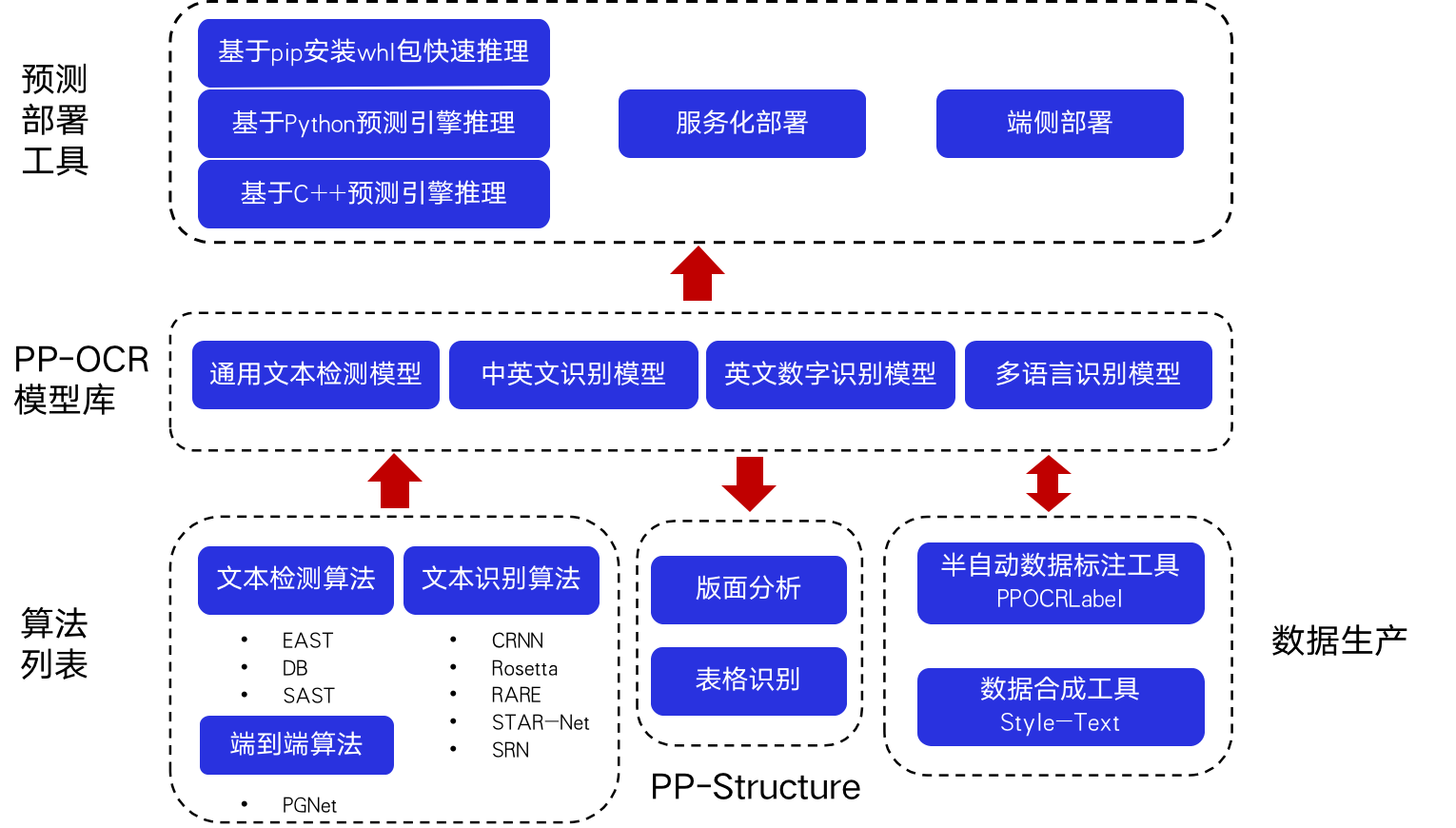

doc/deployment.png

0 → 100644

992.0 KB

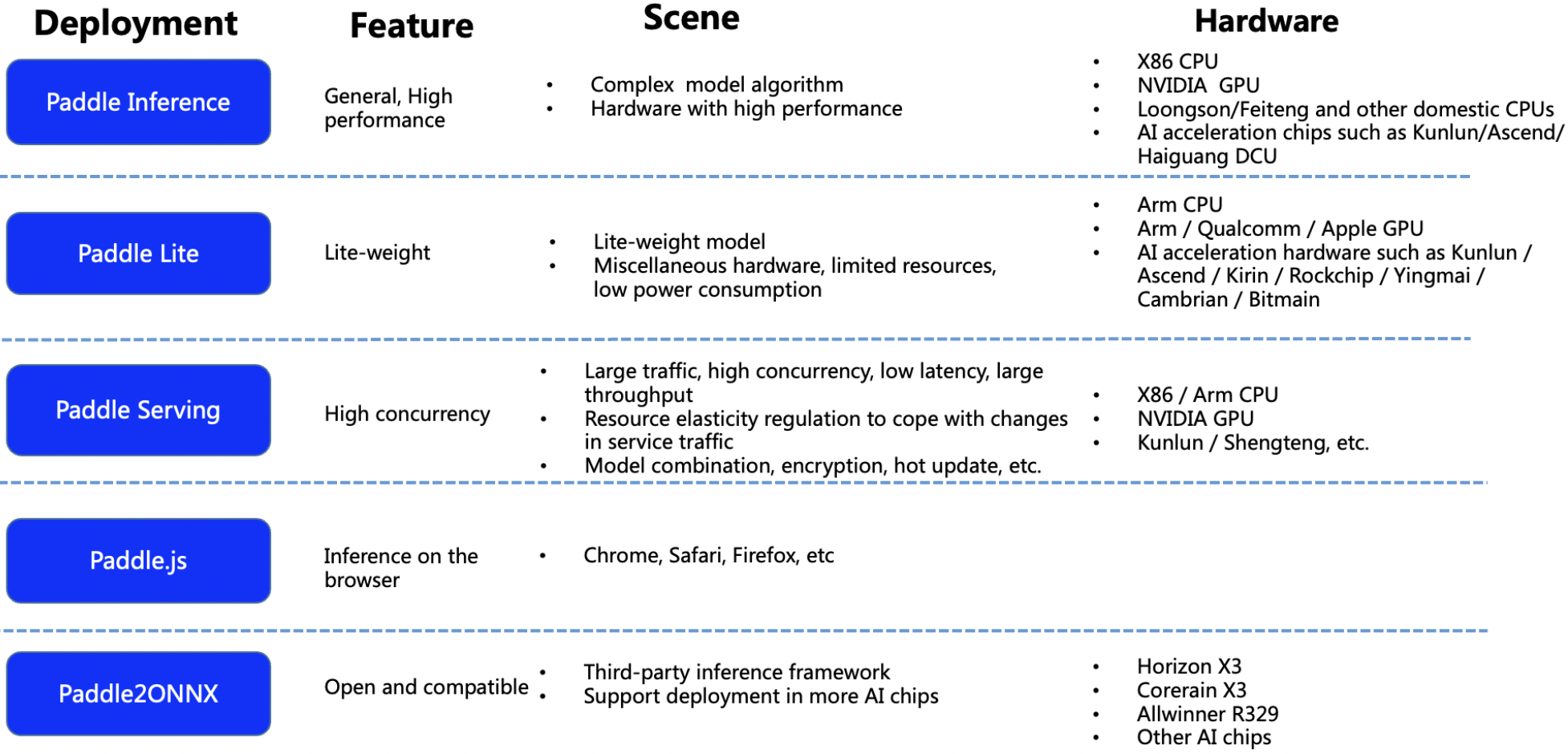

doc/deployment_en.png

0 → 100644

650.2 KB

doc/doc_ch/algorithm.md

0 → 100644

doc/doc_ch/algorithm_det_db.md

0 → 100644

doc/doc_ch/android_demo.md

已删除

100644 → 0

doc/doc_ch/application.md

0 → 100644

doc/doc_ch/docvqa_datasets.md

0 → 100644

doc/doc_ch/layout_datasets.md

0 → 100644

doc/doc_ch/ocr_book.md

0 → 100644

doc/doc_ch/ppocr_introduction.md

0 → 100644

doc/doc_ch/table_datasets.md

0 → 100644

doc/doc_en/algorithm_det_db_en.md

0 → 100644

doc/doc_en/algorithm_en.md

0 → 100644

doc/doc_en/android_demo_en.md

已删除

100644 → 0

此差异已折叠。

doc/doc_en/ocr_book_en.md

0 → 100644

此差异已折叠。

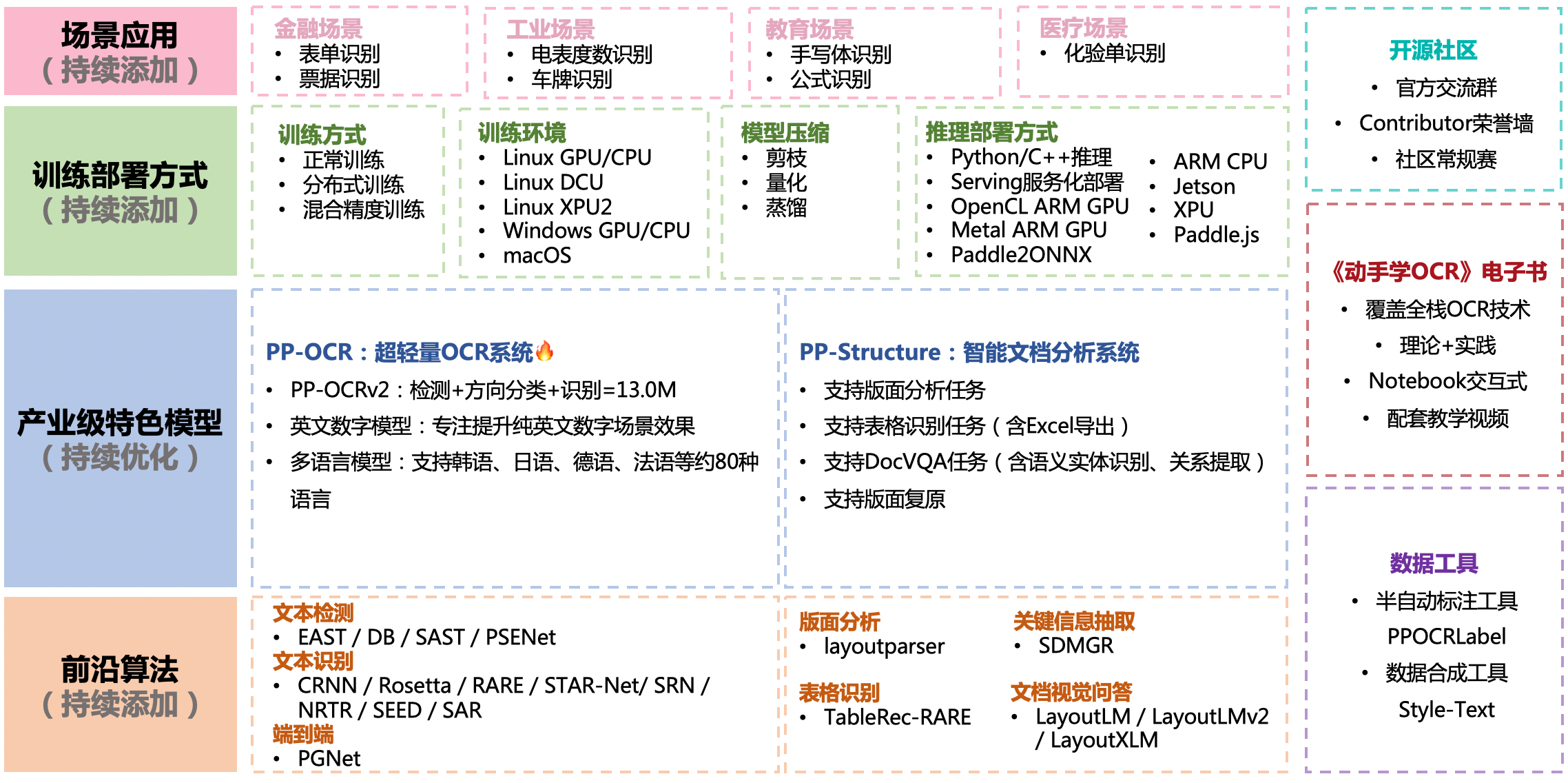

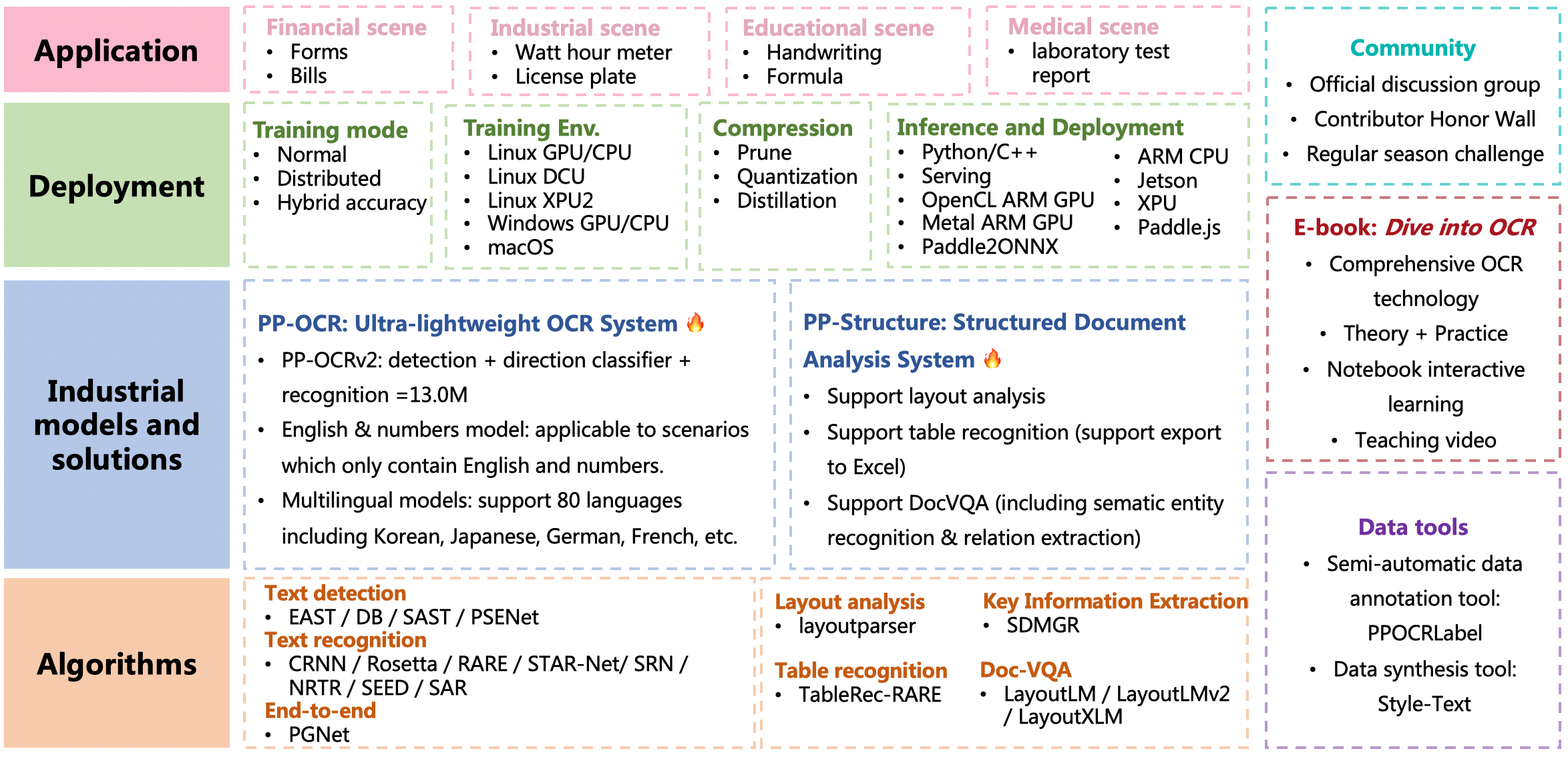

doc/features.png

0 → 100644

1.1 MB

doc/features_en.png

0 → 100644

1.2 MB

| W: | H:

| W: | H:

doc/ocr-android-easyedge.png

已删除

100644 → 0

291.8 KB

doc/overview.png

已删除

100644 → 0

142.8 KB

ppstructure/docs/inference.md

0 → 100644

此差异已折叠。

ppstructure/docs/inference_en.md

0 → 100644

此差异已折叠。

此差异已折叠。

ppstructure/docs/quickstart_en.md

0 → 100644

此差异已折叠。

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动