Skip to content

体验新版

项目

组织

正在加载...

登录

切换导航

打开侧边栏

PaddlePaddle

PaddleHub

提交

b8730bce

P

PaddleHub

项目概览

PaddlePaddle

/

PaddleHub

大约 2 年 前同步成功

通知

285

Star

12117

Fork

2091

代码

文件

提交

分支

Tags

贡献者

分支图

Diff

Issue

200

列表

看板

标记

里程碑

合并请求

4

Wiki

0

Wiki

分析

仓库

DevOps

项目成员

Pages

P

PaddleHub

项目概览

项目概览

详情

发布

仓库

仓库

文件

提交

分支

标签

贡献者

分支图

比较

Issue

200

Issue

200

列表

看板

标记

里程碑

合并请求

4

合并请求

4

Pages

分析

分析

仓库分析

DevOps

Wiki

0

Wiki

成员

成员

收起侧边栏

关闭侧边栏

动态

分支图

创建新Issue

提交

Issue看板

提交

b8730bce

编写于

2月 23, 2020

作者:

S

sjtubinlong

浏览文件

操作

浏览文件

下载

电子邮件补丁

差异文件

Add C++ support for pyramidbox_lite_mobile/server_mask model deployment

上级

381424bd

变更

10

隐藏空白更改

内联

并排

Showing

10 changed file

with

1022 addition

and

0 deletion

+1022

-0

deploy/demo/mask_detector/CMakeLists.txt

deploy/demo/mask_detector/CMakeLists.txt

+236

-0

deploy/demo/mask_detector/CMakeSettings.json

deploy/demo/mask_detector/CMakeSettings.json

+42

-0

deploy/demo/mask_detector/README.md

deploy/demo/mask_detector/README.md

+42

-0

deploy/demo/mask_detector/docs/linux_build.md

deploy/demo/mask_detector/docs/linux_build.md

+105

-0

deploy/demo/mask_detector/docs/windows_build.md

deploy/demo/mask_detector/docs/windows_build.md

+85

-0

deploy/demo/mask_detector/export_model.py

deploy/demo/mask_detector/export_model.py

+27

-0

deploy/demo/mask_detector/linux_build.sh

deploy/demo/mask_detector/linux_build.sh

+21

-0

deploy/demo/mask_detector/main.cc

deploy/demo/mask_detector/main.cc

+80

-0

deploy/demo/mask_detector/mask_detector.cc

deploy/demo/mask_detector/mask_detector.cc

+271

-0

deploy/demo/mask_detector/mask_detector.h

deploy/demo/mask_detector/mask_detector.h

+113

-0

未找到文件。

deploy/demo/mask_detector/CMakeLists.txt

0 → 100644

浏览文件 @

b8730bce

cmake_minimum_required

(

VERSION 3.0

)

project

(

PaddleMaskDetector CXX C

)

option

(

WITH_MKL

"Compile demo with MKL/OpenBlas support,defaultuseMKL."

ON

)

option

(

WITH_GPU

"Compile demo with GPU/CPU, default use CPU."

ON

)

option

(

WITH_STATIC_LIB

"Compile demo with static/shared library, default use static."

ON

)

option

(

USE_TENSORRT

"Compile demo with TensorRT."

OFF

)

SET

(

PADDLE_DIR

""

CACHE PATH

"Location of libraries"

)

SET

(

OPENCV_DIR

""

CACHE PATH

"Location of libraries"

)

SET

(

CUDA_LIB

""

CACHE PATH

"Location of libraries"

)

macro

(

safe_set_static_flag

)

foreach

(

flag_var

CMAKE_CXX_FLAGS CMAKE_CXX_FLAGS_DEBUG CMAKE_CXX_FLAGS_RELEASE

CMAKE_CXX_FLAGS_MINSIZEREL CMAKE_CXX_FLAGS_RELWITHDEBINFO

)

if

(

${

flag_var

}

MATCHES

"/MD"

)

string

(

REGEX REPLACE

"/MD"

"/MT"

${

flag_var

}

"

${${

flag_var

}}

"

)

endif

(

${

flag_var

}

MATCHES

"/MD"

)

endforeach

(

flag_var

)

endmacro

()

if

(

WITH_MKL

)

ADD_DEFINITIONS

(

-DUSE_MKL

)

endif

()

if

(

NOT DEFINED PADDLE_DIR OR

${

PADDLE_DIR

}

STREQUAL

""

)

message

(

FATAL_ERROR

"please set PADDLE_DIR with -DPADDLE_DIR=/path/paddle_influence_dir"

)

endif

()

if

(

NOT DEFINED OPENCV_DIR OR

${

OPENCV_DIR

}

STREQUAL

""

)

message

(

FATAL_ERROR

"please set OPENCV_DIR with -DOPENCV_DIR=/path/opencv"

)

endif

()

include_directories

(

"

${

CMAKE_SOURCE_DIR

}

/"

)

include_directories

(

"

${

PADDLE_DIR

}

/"

)

include_directories

(

"

${

PADDLE_DIR

}

/third_party/install/protobuf/include"

)

include_directories

(

"

${

PADDLE_DIR

}

/third_party/install/glog/include"

)

include_directories

(

"

${

PADDLE_DIR

}

/third_party/install/gflags/include"

)

include_directories

(

"

${

PADDLE_DIR

}

/third_party/install/xxhash/include"

)

if

(

EXISTS

"

${

PADDLE_DIR

}

/third_party/install/snappy/include"

)

include_directories

(

"

${

PADDLE_DIR

}

/third_party/install/snappy/include"

)

endif

()

if

(

EXISTS

"

${

PADDLE_DIR

}

/third_party/install/snappystream/include"

)

include_directories

(

"

${

PADDLE_DIR

}

/third_party/install/snappystream/include"

)

endif

()

include_directories

(

"

${

PADDLE_DIR

}

/third_party/install/zlib/include"

)

include_directories

(

"

${

PADDLE_DIR

}

/third_party/boost"

)

include_directories

(

"

${

PADDLE_DIR

}

/third_party/eigen3"

)

if

(

EXISTS

"

${

PADDLE_DIR

}

/third_party/install/snappy/lib"

)

link_directories

(

"

${

PADDLE_DIR

}

/third_party/install/snappy/lib"

)

endif

()

if

(

EXISTS

"

${

PADDLE_DIR

}

/third_party/install/snappystream/lib"

)

link_directories

(

"

${

PADDLE_DIR

}

/third_party/install/snappystream/lib"

)

endif

()

link_directories

(

"

${

PADDLE_DIR

}

/third_party/install/zlib/lib"

)

link_directories

(

"

${

PADDLE_DIR

}

/third_party/install/protobuf/lib"

)

link_directories

(

"

${

PADDLE_DIR

}

/third_party/install/glog/lib"

)

link_directories

(

"

${

PADDLE_DIR

}

/third_party/install/gflags/lib"

)

link_directories

(

"

${

PADDLE_DIR

}

/third_party/install/xxhash/lib"

)

link_directories

(

"

${

PADDLE_DIR

}

/paddle/lib/"

)

link_directories

(

"

${

CMAKE_CURRENT_BINARY_DIR

}

"

)

if

(

WIN32

)

include_directories

(

"

${

PADDLE_DIR

}

/paddle/fluid/inference"

)

include_directories

(

"

${

PADDLE_DIR

}

/paddle/include"

)

link_directories

(

"

${

PADDLE_DIR

}

/paddle/fluid/inference"

)

include_directories

(

"

${

OPENCV_DIR

}

/build/include"

)

include_directories

(

"

${

OPENCV_DIR

}

/opencv/build/include"

)

link_directories

(

"

${

OPENCV_DIR

}

/build/x64/vc14/lib"

)

else

()

include_directories

(

"

${

PADDLE_DIR

}

/paddle/include"

)

link_directories

(

"

${

PADDLE_DIR

}

/paddle/lib"

)

include_directories

(

"

${

OPENCV_DIR

}

/include"

)

link_directories

(

"

${

OPENCV_DIR

}

/lib64"

)

endif

()

if

(

WIN32

)

add_definitions

(

"/DGOOGLE_GLOG_DLL_DECL="

)

set

(

CMAKE_C_FLAGS_DEBUG

"

${

CMAKE_C_FLAGS_DEBUG

}

/bigobj /MTd"

)

set

(

CMAKE_C_FLAGS_RELEASE

"

${

CMAKE_C_FLAGS_RELEASE

}

/bigobj /MT"

)

set

(

CMAKE_CXX_FLAGS_DEBUG

"

${

CMAKE_CXX_FLAGS_DEBUG

}

/bigobj /MTd"

)

set

(

CMAKE_CXX_FLAGS_RELEASE

"

${

CMAKE_CXX_FLAGS_RELEASE

}

/bigobj /MT"

)

if

(

WITH_STATIC_LIB

)

safe_set_static_flag

()

add_definitions

(

-DSTATIC_LIB

)

endif

()

else

()

set

(

CMAKE_CXX_FLAGS

"

${

CMAKE_CXX_FLAGS

}

-o2 -fopenmp -std=c++11"

)

set

(

CMAKE_STATIC_LIBRARY_PREFIX

""

)

endif

()

# TODO let users define cuda lib path

if

(

WITH_GPU

)

if

(

NOT DEFINED CUDA_LIB OR

${

CUDA_LIB

}

STREQUAL

""

)

message

(

FATAL_ERROR

"please set CUDA_LIB with -DCUDA_LIB=/path/cuda-8.0/lib64"

)

endif

()

if

(

NOT WIN32

)

if

(

NOT DEFINED CUDNN_LIB

)

message

(

FATAL_ERROR

"please set CUDNN_LIB with -DCUDNN_LIB=/path/cudnn_v7.4/cuda/lib64"

)

endif

()

endif

(

NOT WIN32

)

endif

()

if

(

NOT WIN32

)

if

(

USE_TENSORRT AND WITH_GPU

)

include_directories

(

"

${

PADDLE_DIR

}

/third_party/install/tensorrt/include"

)

link_directories

(

"

${

PADDLE_DIR

}

/third_party/install/tensorrt/lib"

)

endif

()

endif

(

NOT WIN32

)

if

(

NOT WIN32

)

set

(

NGRAPH_PATH

"

${

PADDLE_DIR

}

/third_party/install/ngraph"

)

if

(

EXISTS

${

NGRAPH_PATH

}

)

include

(

GNUInstallDirs

)

include_directories

(

"

${

NGRAPH_PATH

}

/include"

)

link_directories

(

"

${

NGRAPH_PATH

}

/

${

CMAKE_INSTALL_LIBDIR

}

"

)

set

(

NGRAPH_LIB

${

NGRAPH_PATH

}

/

${

CMAKE_INSTALL_LIBDIR

}

/libngraph

${

CMAKE_SHARED_LIBRARY_SUFFIX

}

)

endif

()

endif

()

if

(

WITH_MKL

)

include_directories

(

"

${

PADDLE_DIR

}

/third_party/install/mklml/include"

)

if

(

WIN32

)

set

(

MATH_LIB

${

PADDLE_DIR

}

/third_party/install/mklml/lib/mklml.lib

${

PADDLE_DIR

}

/third_party/install/mklml/lib/libiomp5md.lib

)

else

()

set

(

MATH_LIB

${

PADDLE_DIR

}

/third_party/install/mklml/lib/libmklml_intel

${

CMAKE_SHARED_LIBRARY_SUFFIX

}

${

PADDLE_DIR

}

/third_party/install/mklml/lib/libiomp5

${

CMAKE_SHARED_LIBRARY_SUFFIX

}

)

execute_process

(

COMMAND cp -r

${

PADDLE_DIR

}

/third_party/install/mklml/lib/libmklml_intel

${

CMAKE_SHARED_LIBRARY_SUFFIX

}

/usr/lib

)

endif

()

set

(

MKLDNN_PATH

"

${

PADDLE_DIR

}

/third_party/install/mkldnn"

)

if

(

EXISTS

${

MKLDNN_PATH

}

)

include_directories

(

"

${

MKLDNN_PATH

}

/include"

)

if

(

WIN32

)

set

(

MKLDNN_LIB

${

MKLDNN_PATH

}

/lib/mkldnn.lib

)

else

()

set

(

MKLDNN_LIB

${

MKLDNN_PATH

}

/lib/libmkldnn.so.0

)

endif

()

endif

()

else

()

set

(

MATH_LIB

${

PADDLE_DIR

}

/third_party/install/openblas/lib/libopenblas

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

endif

()

if

(

WIN32

)

if

(

EXISTS

"

${

PADDLE_DIR

}

/paddle/fluid/inference/libpaddle_fluid

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

"

)

set

(

DEPS

${

PADDLE_DIR

}

/paddle/fluid/inference/libpaddle_fluid

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

else

()

set

(

DEPS

${

PADDLE_DIR

}

/paddle/lib/libpaddle_fluid

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

endif

()

endif

()

if

(

WITH_STATIC_LIB

)

set

(

DEPS

${

PADDLE_DIR

}

/paddle/lib/libpaddle_fluid

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

else

()

set

(

DEPS

${

PADDLE_DIR

}

/paddle/lib/libpaddle_fluid

${

CMAKE_SHARED_LIBRARY_SUFFIX

}

)

endif

()

if

(

NOT WIN32

)

set

(

DEPS

${

DEPS

}

${

MATH_LIB

}

${

MKLDNN_LIB

}

glog gflags protobuf z xxhash

)

if

(

EXISTS

"

${

PADDLE_DIR

}

/third_party/install/snappystream/lib"

)

set

(

DEPS

${

DEPS

}

snappystream

)

endif

()

if

(

EXISTS

"

${

PADDLE_DIR

}

/third_party/install/snappy/lib"

)

set

(

DEPS

${

DEPS

}

snappy

)

endif

()

else

()

set

(

DEPS

${

DEPS

}

${

MATH_LIB

}

${

MKLDNN_LIB

}

opencv_world346 glog gflags_static libprotobuf zlibstatic xxhash

)

set

(

DEPS

${

DEPS

}

libcmt shlwapi

)

if

(

EXISTS

"

${

PADDLE_DIR

}

/third_party/install/snappy/lib"

)

set

(

DEPS

${

DEPS

}

snappy

)

endif

()

if

(

EXISTS

"

${

PADDLE_DIR

}

/third_party/install/snappystream/lib"

)

set

(

DEPS

${

DEPS

}

snappystream

)

endif

()

endif

(

NOT WIN32

)

if

(

WITH_GPU

)

if

(

NOT WIN32

)

if

(

USE_TENSORRT

)

set

(

DEPS

${

DEPS

}

${

PADDLE_DIR

}

/third_party/install/tensorrt/lib/libnvinfer

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

set

(

DEPS

${

DEPS

}

${

PADDLE_DIR

}

/third_party/install/tensorrt/lib/libnvinfer_plugin

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

endif

()

set

(

DEPS

${

DEPS

}

${

CUDA_LIB

}

/libcudart

${

CMAKE_SHARED_LIBRARY_SUFFIX

}

)

set

(

DEPS

${

DEPS

}

${

CUDNN_LIB

}

/libcudnn

${

CMAKE_SHARED_LIBRARY_SUFFIX

}

)

else

()

set

(

DEPS

${

DEPS

}

${

CUDA_LIB

}

/cudart

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

set

(

DEPS

${

DEPS

}

${

CUDA_LIB

}

/cublas

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

set

(

DEPS

${

DEPS

}

${

CUDA_LIB

}

/cudnn

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

endif

()

endif

()

if

(

NOT WIN32

)

set

(

DEPS

${

DEPS

}

${

OPENCV_DIR

}

/lib64/libopencv_imgcodecs

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

set

(

DEPS

${

DEPS

}

${

OPENCV_DIR

}

/lib64/libopencv_imgproc

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

set

(

DEPS

${

DEPS

}

${

OPENCV_DIR

}

/lib64/libopencv_core

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

set

(

DEPS

${

DEPS

}

${

OPENCV_DIR

}

/lib64/libopencv_highgui

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

set

(

DEPS

${

DEPS

}

${

OPENCV_DIR

}

/share/OpenCV/3rdparty/lib64/libIlmImf

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

set

(

DEPS

${

DEPS

}

${

OPENCV_DIR

}

/share/OpenCV/3rdparty/lib64/liblibjasper

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

set

(

DEPS

${

DEPS

}

${

OPENCV_DIR

}

/share/OpenCV/3rdparty/lib64/liblibpng

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

set

(

DEPS

${

DEPS

}

${

OPENCV_DIR

}

/share/OpenCV/3rdparty/lib64/liblibtiff

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

set

(

DEPS

${

DEPS

}

${

OPENCV_DIR

}

/share/OpenCV/3rdparty/lib64/libittnotify

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

set

(

DEPS

${

DEPS

}

${

OPENCV_DIR

}

/share/OpenCV/3rdparty/lib64/liblibjpeg-turbo

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

set

(

DEPS

${

DEPS

}

${

OPENCV_DIR

}

/share/OpenCV/3rdparty/lib64/liblibwebp

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

set

(

DEPS

${

DEPS

}

${

OPENCV_DIR

}

/share/OpenCV/3rdparty/lib64/libzlib

${

CMAKE_STATIC_LIBRARY_SUFFIX

}

)

endif

()

if

(

NOT WIN32

)

set

(

EXTERNAL_LIB

"-ldl -lrt -lpthread"

)

set

(

DEPS

${

DEPS

}

${

EXTERNAL_LIB

}

)

endif

()

add_executable

(

mask_detector main.cc mask_detector.cc

)

target_link_libraries

(

mask_detector

${

DEPS

}

)

if

(

WIN32

)

add_custom_command

(

TARGET mask_detector POST_BUILD

COMMAND

${

CMAKE_COMMAND

}

-E copy_if_different

${

PADDLE_DIR

}

/third_party/install/mklml/lib/mklml.dll ./mklml.dll

COMMAND

${

CMAKE_COMMAND

}

-E copy_if_different

${

PADDLE_DIR

}

/third_party/install/mklml/lib/libiomp5md.dll ./libiomp5md.dll

COMMAND

${

CMAKE_COMMAND

}

-E copy_if_different

${

PADDLE_DIR

}

/third_party/install/mkldnn/lib/mkldnn.dll ./mkldnn.dll

COMMAND

${

CMAKE_COMMAND

}

-E copy_if_different

${

PADDLE_DIR

}

/third_party/install/mklml/lib/mklml.dll ./release/mklml.dll

COMMAND

${

CMAKE_COMMAND

}

-E copy_if_different

${

PADDLE_DIR

}

/third_party/install/mklml/lib/libiomp5md.dll ./release/libiomp5md.dll

COMMAND

${

CMAKE_COMMAND

}

-E copy_if_different

${

PADDLE_DIR

}

/third_party/install/mkldnn/lib/mkldnn.dll ./release/mkldnn.dll

)

endif

()

deploy/demo/mask_detector/CMakeSettings.json

0 → 100644

浏览文件 @

b8730bce

{

"configurations"

:

[

{

"name"

:

"x64-Release"

,

"generator"

:

"Ninja"

,

"configurationType"

:

"RelWithDebInfo"

,

"inheritEnvironments"

:

[

"msvc_x64_x64"

],

"buildRoot"

:

"${projectDir}

\\

out

\\

build

\\

${name}"

,

"installRoot"

:

"${projectDir}

\\

out

\\

install

\\

${name}"

,

"cmakeCommandArgs"

:

""

,

"buildCommandArgs"

:

"-v"

,

"ctestCommandArgs"

:

""

,

"variables"

:

[

{

"name"

:

"CUDA_LIB"

,

"value"

:

"D:/projects/packages/cuda10_0/lib64"

,

"type"

:

"PATH"

},

{

"name"

:

"CUDNN_LIB"

,

"value"

:

"D:/projects/packages/cuda10_0/lib64"

,

"type"

:

"PATH"

},

{

"name"

:

"OPENCV_DIR"

,

"value"

:

"D:/projects/packages/opencv3_4_6"

,

"type"

:

"PATH"

},

{

"name"

:

"PADDLE_DIR"

,

"value"

:

"D:/projects/packages/fluid_inference1_6_1"

,

"type"

:

"PATH"

},

{

"name"

:

"CMAKE_BUILD_TYPE"

,

"value"

:

"Release"

,

"type"

:

"STRING"

}

]

}

]

}

deploy/demo/mask_detector/README.md

0 → 100644

浏览文件 @

b8730bce

# PaddleHub口罩人脸识别及分类模型C++预测部署

百度通过

`PaddleHub`

开源了业界首个口罩人脸检测及人类模型,该模型可以有效检测在密集人类区域中携带和未携带口罩的所有人脸,同时判断出是否有佩戴口罩。开发者可以通过

`PaddleHub`

快速体验模型效果、搭建在线服务,还可以导出模型集成到

`Windows`

和

`Linux`

等不同平台的

`C++`

开发项目中。

本文档主要介绍如何把模型在

`Windows`

和

`Linux`

上完成基于

`C++`

的预测部署。

主要包含两个步骤:

-

[

1. PaddleHub导出预测模型

](

#1PaddleHub导出预测模型

)

-

[

2. C++预测部署编译

](

#2C预测部署编译

)

## 1. PaddleHub导出预测模型

#### 1.1 安装 `PaddlePaddle` 和 `PaddleHub`

-

`PaddlePaddle`

的安装:

请点击

[

官方安装文档

](

https://paddlepaddle.org.cn/install/quick

)

选择适合的方式

-

`PaddleHub`

的安装:

`pip install paddlehub`

#### 1.2 从`PaddleHub`导出预测模型

在有网络访问条件下,执行

`python export_model.py`

导出两个可用于推理部署的口罩模型

其中

`pyramidbox_lite_mobile_mask`

为移动版模型, 模型更小,计算量低;

`pyramidbox_lite_server_mask`

为服务器版模型,在此推荐该版本模型,精度相对移动版本更高。

成功执行代码后导出的模型路径结构:

```

pyramidbox_lite_server_mask

|

├── mask_detector # 口罩人脸分类模型

| ├── __model__ # 模型文件

│ └── __params__ # 参数文件

|

└── pyramidbox_lite # 口罩人脸检测模型

├── __model__ # 模型文件

└── __params__ # 参数文件

```

## 2. C++预测部署编译

本项目支持在Windows和Linux上编译并部署C++项目,不同平台的编译请参考:

-

[

Linux 编译

](

./docs/linux_build.md

)

-

[

Windows 使用 Visual Studio 2019编译

](

./docs/windows_build.md

)

deploy/demo/mask_detector/docs/linux_build.md

0 → 100644

浏览文件 @

b8730bce

# Linux平台口罩人脸检测及分类模型C++预测部署

## 1. 系统和软件依赖

### 1.1 操作系统及硬件要求

-

Ubuntu 14.04 或者 16.04 (其它平台未测试)

-

GCC版本4.8.5 ~ 4.9.2

-

支持Intel MKL-DNN的CPU

-

NOTE: 如需在Nvidia GPU运行,请自行安装CUDA 9.0 / 10.0 + CUDNN 7.3+ (不支持9.1/10.1版本的CUDA)

### 1.2 下载PaddlePaddle C++预测库

PaddlePaddle C++ 预测库主要分为CPU版本和GPU版本。

其中,GPU 版本支持

`CUDA 10.0`

和

`CUDA 9.0`

:

以下为各版本C++预测库的下载链接:

| 版本 | 链接 |

| ---- | ---- |

| CPU+MKL版 |

[

fluid_inference.tgz

](

https://paddle-inference-lib.bj.bcebos.com/1.6.3-cpu-avx-mkl/fluid_inference.tgz

)

|

| CUDA9.0+MKL 版 |

[

fluid_inference.tgz

](

https://paddle-inference-lib.bj.bcebos.com/1.6.3-gpu-cuda9-cudnn7-avx-mkl/fluid_inference.tgz

)

|

| CUDA10.0+MKL 版 |

[

fluid_inference.tgz

](

https://paddle-inference-lib.bj.bcebos.com/1.6.3-gpu-cuda10-cudnn7-avx-mkl/fluid_inference.tgz

)

|

更多可用预测库版本,请点击以下链接下载:

[

C++预测库下载列表

](

https://paddlepaddle.org.cn/documentation/docs/zh/advanced_usage/deploy/inference/build_and_install_lib_cn.html

)

下载并解压, 解压后的

`fluid_inference`

目录包含的内容:

```

fluid_inference

├── paddle # paddle核心库和头文件

|

├── third_party # 第三方依赖库和头文件

|

└── version.txt # 版本和编译信息

```

**注意:**

请把解压后的目录放到合适的路径,

**该目录路径后续会作为编译依赖**

使用。

### 1.2 编译安装 OpenCV

```

shell

# 1. 下载OpenCV3.4.6版本源代码

wget

-c

https://paddleseg.bj.bcebos.com/inference/opencv-3.4.6.zip

# 2. 解压

unzip opencv-3.4.6.zip

&&

cd

opencv-3.4.6

# 3. 创建build目录并编译, 这里安装到/root/projects/opencv3目录

mkdir

build

&&

cd

build

cmake ..

-DCMAKE_INSTALL_PREFIX

=

$HOME

/opencv3

-DCMAKE_BUILD_TYPE

=

Release

-DBUILD_SHARED_LIBS

=

OFF

-DWITH_IPP

=

OFF

-DBUILD_IPP_IW

=

OFF

-DWITH_LAPACK

=

OFF

-DWITH_EIGEN

=

OFF

-DCMAKE_INSTALL_LIBDIR

=

lib64

-DWITH_ZLIB

=

ON

-DBUILD_ZLIB

=

ON

-DWITH_JPEG

=

ON

-DBUILD_JPEG

=

ON

-DWITH_PNG

=

ON

-DBUILD_PNG

=

ON

-DWITH_TIFF

=

ON

-DBUILD_TIFF

=

ON

make

-j4

make

install

```

其中参数

`CMAKE_INSTALL_PREFIX`

参数指定了安装路径, 上述操作完成后,

`opencv`

被安装在

`$HOME/opencv3`

目录(用户也可选择其他路径),

**该目录后续作为编译依赖**

。

## 2. 编译与运行

### 2.1 配置编译脚本

cd

`PaddleHub/deploy/demo/mask_detector/`

打开文件

`linux_build.sh`

, 看到以下内容:

```

shell

# Paddle 预测库路径

PADDLE_DIR

=

/PATH/TO/fluid_inference/

# OpenCV 库路径

OPENCV_DIR

=

/PATH/TO/opencv3gcc4.8/

# 是否使用GPU

WITH_GPU

=

ON

# CUDA库路径, 仅 WITH_GPU=ON 时设置

CUDA_LIB

=

/PATH/TO/CUDA_LIB64/

# CUDNN库路径,仅 WITH_GPU=ON 且 CUDA_LIB有效时设置

CUDNN_LIB

=

/PATH/TO/CUDNN_LIB64/

cd

build

cmake ..

\

-DWITH_GPU

=

${

WITH_GPU

}

\

-DPADDLE_DIR

=

${

PADDLE_DIR

}

\

-DCUDA_LIB

=

${

CUDA_LIB

}

\

-DCUDNN_LIB

=

${

CUDNN_LIB

}

\

-DOPENCV_DIR

=

${

OPENCV_DIR

}

\

-DWITH_STATIC_LIB

=

OFF

make

-j4

```

把上述参数根据实际情况做修改后,运行脚本编译程序:

```

shell

sh linux_build.sh

```

### 2.2. 运行和可视化

可执行文件有

**2**

个参数,第一个是前面导出的

`inference_model`

路径,第二个是需要预测的图片路径。

示例:

```

shell

./build/main /PATH/TO/pyramidbox_lite_server_mask/ /PATH/TO/TEST_IMAGE

```

执行程序时会打印检测框的位置与口罩是否佩戴的结果,另外result.jpg文件为检测的可视化结果。

**预测结果示例:**

deploy/demo/mask_detector/docs/windows_build.md

0 → 100644

浏览文件 @

b8730bce

# Windows平台口罩人脸检测及分类模型C++预测部署

## 1. 系统和软件依赖

### 1.1 基础依赖

-

Windows 10 / Windows Server 2016+ (其它平台未测试)

-

Visual Studio 2019 (社区版或专业版均可)

-

CUDA 9.0 / 10.0 + CUDNN 7.3+ (不支持9.1/10.1版本的CUDA)

### 1.2 下载OpenCV并设置环境变量

-

在OpenCV官网下载适用于Windows平台的3.4.6版本:

[

点击下载

](

https://sourceforge.net/projects/opencvlibrary/files/3.4.6/opencv-3.4.6-vc14_vc15.exe/download

)

-

运行下载的可执行文件,将OpenCV解压至合适目录,这里以解压到

`D:\projects\opencv`

为例

-

把OpenCV动态库加入到系统环境变量

-

此电脑(我的电脑)->属性->高级系统设置->环境变量

-

在系统变量中找到Path(如没有,自行创建),并双击编辑

-

新建,将opencv路径填入并保存,如D:

\p

rojects

\o

pencv

\b

uild

\x

64

\v

c14

\b

in

**注意:**

`OpenCV`

的解压目录后续将做为编译配置项使用,所以请放置合适的目录中。

### 1.3 下载PaddlePaddle C++ 预测库

`PaddlePaddle`

**C++ 预测库**

主要分为

`CPU`

和

`GPU`

版本, 其中

`GPU版本`

提供

`CUDA 9.0`

和

`CUDA 10.0`

支持。

常用的版本如下:

| 版本 | 链接 |

| ---- | ---- |

| CPU+MKL版 |

[

fluid_inference_install_dir.zip

](

https://paddle-wheel.bj.bcebos.com/1.6.3/win-infer/mkl/cpu/fluid_inference_install_dir.zip

)

|

| CUDA9.0+MKL 版 |

[

fluid_inference_install_dir.zip

](

https://paddle-wheel.bj.bcebos.com/1.6.3/win-infer/mkl/post97/fluid_inference_install_dir.zip

)

|

| CUDA10.0+MKL 版 |

[

fluid_inference_install_dir.zip

](

https://paddle-wheel.bj.bcebos.com/1.6.3/win-infer/mkl/post107/fluid_inference_install_dir.zip

)

|

更多不同平台的可用预测库版本,请

[

点击查看

](

https://paddlepaddle.org.cn/documentation/docs/zh/advanced_usage/deploy/inference/windows_cpp_inference.html

)

选择适合你的版本。

下载并解压, 解压后的

`fluid_inference`

目录包含的内容:

```

fluid_inference_install_dir

├── paddle # paddle核心库和头文件

|

├── third_party # 第三方依赖库和头文件

|

└── version.txt # 版本和编译信息

```

**注意:**

这里的

`fluid_inference_install_dir`

目录所在路径,将用于后面的编译参数设置,请放置在合适的位置。

## 2. Visual Studio 2019 编译

-

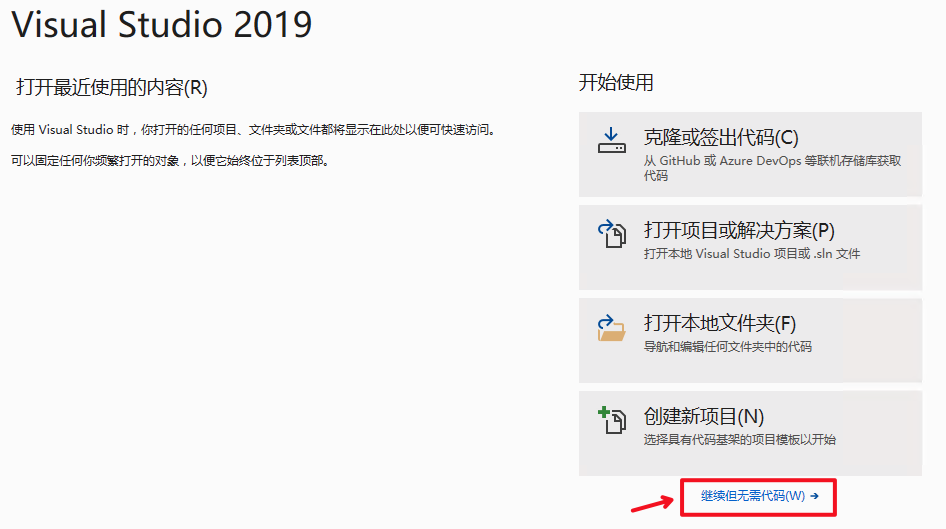

2.1 打开Visual Studio 2019 Community,点击

`继续但无需代码`

, 如下图:

-

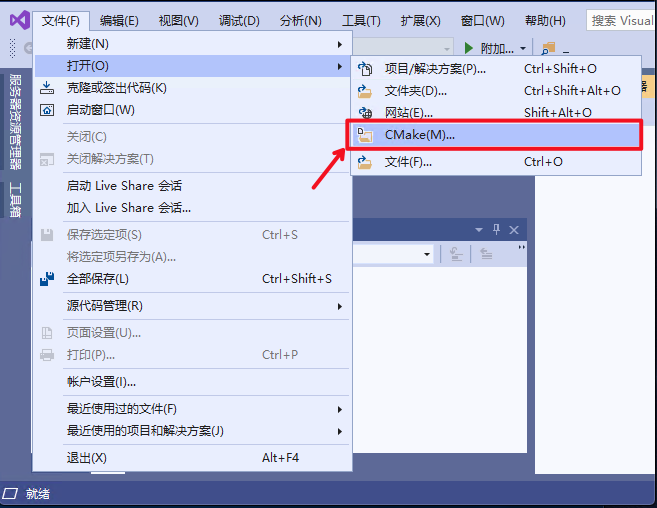

2.2 点击

`文件`

->

`打开`

->

`CMake`

, 如下图:

-

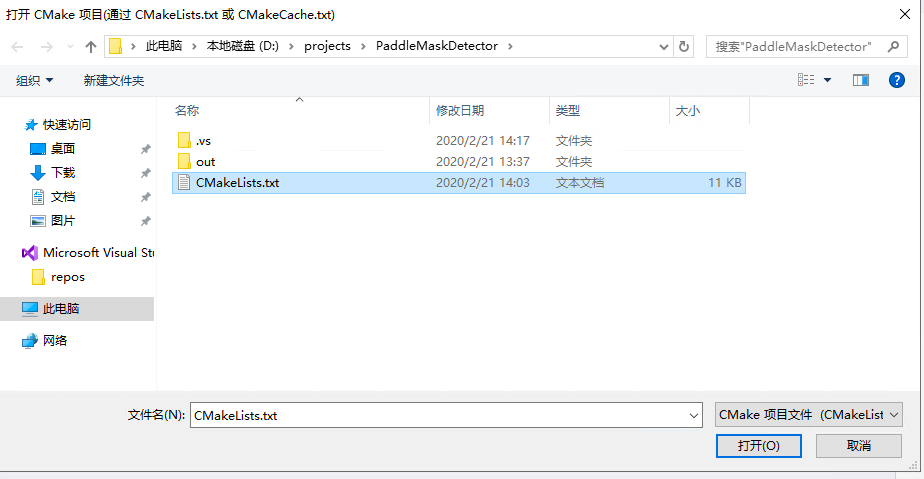

2.3 选择本项目根目录

`CMakeList.txt`

文件打开, 如下图:

-

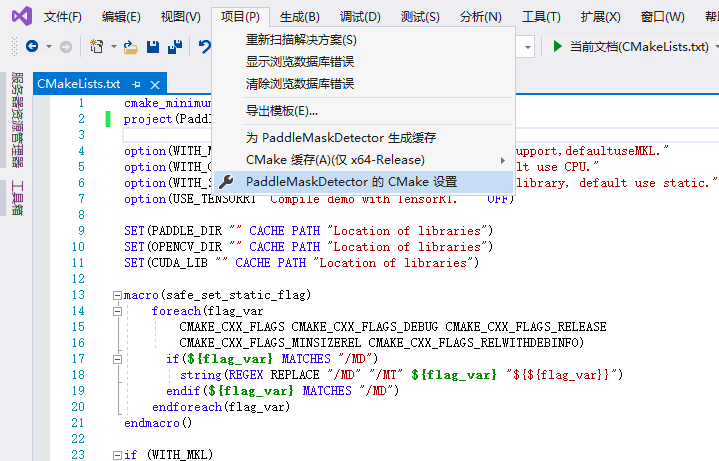

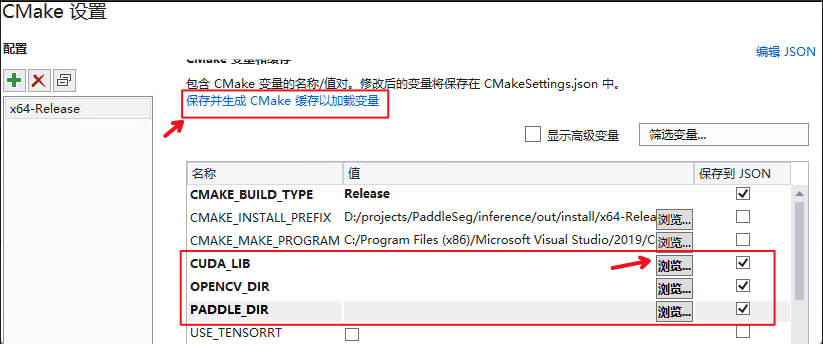

2.4 点击:

`项目`

->

`PaddleMaskDetector的CMake设置`

-

2.5 点击浏览设置

`OPENCV_DIR`

,

`CUDA_LIB`

和

`PADDLE_DIR`

3个编译依赖库的位置, 设置完成后点击

`保存并生成CMake缓存并加载变量`

-

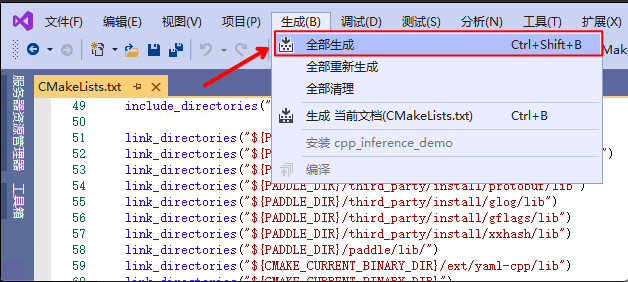

2.6 点击

`生成`

->

`全部生成`

编译项目

## 3. 运行程序

成功编译后, 产出的可执行文件在项目子目录

`out\build\x64-Release`

目录, 按以下步骤运行代码:

-

打开

`cmd`

切换至该目录

-

运行以下命令传入口罩识别模型路径与测试图片

```

shell

main.exe ./pyramidbox_lite_server_mask/ ./images/mask_input.png

```

第一个参数即

`PaddleHub`

导出的预测模型,第二个参数即要预测的图片。

运行后,预测结果保存在文件

`result.jpg`

中。

**预测结果示例:**

deploy/demo/mask_detector/export_model.py

0 → 100644

浏览文件 @

b8730bce

# Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License"

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

import

paddlehub

as

hub

# Load mask detector module from PaddleHub

module

=

hub

.

Module

(

name

=

"pyramidbox_lite_server_mask"

)

# Export inference model for deployment

module

.

processor

.

save_inference_model

(

"./pyramidbox_lite_server_mask"

)

print

(

"pyramidbox_lite_server_mask module export done!"

)

# Load mask detector (mobile version) module from PaddleHub

module

=

hub

.

Module

(

name

=

"pyramidbox_lite_mobile_mask"

)

# Export inference model for deployment

module

.

processor

.

save_inference_model

(

"./pyramidbox_lite_mobile_mask"

)

print

(

"pyramidbox_lite_mobile_mask module export done!"

)

deploy/demo/mask_detector/linux_build.sh

0 → 100644

浏览文件 @

b8730bce

WITH_GPU

=

ON

PADDLE_DIR

=

/ssd3/chenzeyu01/PaddleMaskDetector/fluid_inference

CUDA_LIB

=

/home/work/cuda-10.1/lib64/

CUDNN_LIB

=

/home/work/cudnn/cudnn_v7.4/cuda/lib64/

OPENCV_DIR

=

/ssd3/chenzeyu01/PaddleMaskDetector/opencv3gcc4.8/

rm

-rf

build

mkdir

-p

build

cd

build

cmake ..

\

-DWITH_GPU

=

${

WITH_GPU

}

\

-DPADDLE_DIR

=

${

PADDLE_DIR

}

\

-DCUDA_LIB

=

${

CUDA_LIB

}

\

-DCUDNN_LIB

=

${

CUDNN_LIB

}

\

-DOPENCV_DIR

=

${

OPENCV_DIR

}

\

-DWITH_STATIC_LIB

=

OFF

make clean

make

-j12

deploy/demo/mask_detector/main.cc

0 → 100644

浏览文件 @

b8730bce

// Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

//

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

//

// http://www.apache.org/licenses/LICENSE-2.0

//

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

#include <iostream>

#include <string>

#include "mask_detector.h" // NOLINT

int

main

(

int

argc

,

char

*

argv

[])

{

if

(

argc

<

3

||

argc

>

4

)

{

std

::

cout

<<

"Usage:"

<<

"./mask_detector ./models/ ./images/test.png"

<<

std

::

endl

;

return

-

1

;

}

bool

use_gpu

=

(

argc

==

4

?

std

::

stoi

(

argv

[

3

])

:

false

);

auto

det_model_dir

=

std

::

string

(

argv

[

1

])

+

"/pyramidbox_lite"

;

auto

cls_model_dir

=

std

::

string

(

argv

[

1

])

+

"/mask_detector"

;

auto

image_path

=

argv

[

2

];

// Init Detection Model

float

det_shrink

=

0.6

;

float

det_threshold

=

0.7

;

std

::

vector

<

float

>

det_means

=

{

104

,

177

,

123

};

std

::

vector

<

float

>

det_scale

=

{

0.007843

,

0.007843

,

0.007843

};

FaceDetector

detector

(

det_model_dir

,

det_means

,

det_scale

,

use_gpu

,

det_threshold

);

// Init Classification Model

std

::

vector

<

float

>

cls_means

=

{

0.5

,

0.5

,

0.5

};

std

::

vector

<

float

>

cls_scale

=

{

1.0

,

1.0

,

1.0

};

MaskClassifier

classifier

(

cls_model_dir

,

cls_means

,

cls_scale

,

use_gpu

);

// Load image

cv

::

Mat

img

=

imread

(

image_path

,

cv

::

IMREAD_COLOR

);

// Prediction result

std

::

vector

<

FaceResult

>

results

;

// Stage1: Face detection

detector

.

Predict

(

img

,

&

results

,

det_shrink

);

// Stage2: Mask wearing classification

classifier

.

Predict

(

&

results

);

for

(

const

FaceResult

&

item

:

results

)

{

printf

(

"{left=%d, right=%d, top=%d, bottom=%d},"

" class_id=%d, confidence=%.5f

\n

"

,

item

.

rect

[

0

],

item

.

rect

[

1

],

item

.

rect

[

2

],

item

.

rect

[

3

],

item

.

class_id

,

item

.

score

);

}

// Visualization result

cv

::

Mat

vis_img

;

VisualizeResult

(

img

,

results

,

&

vis_img

);

cv

::

imwrite

(

"result.jpg"

,

vis_img

);

return

0

;

}

deploy/demo/mask_detector/mask_detector.cc

0 → 100644

浏览文件 @

b8730bce

// Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

//

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

//

// http://www.apache.org/licenses/LICENSE-2.0

//

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

# include "mask_detector.h"

// Normalize the image by (pix - mean) * scale

void

NormalizeImage

(

const

std

::

vector

<

float

>

&

mean

,

const

std

::

vector

<

float

>

&

scale

,

cv

::

Mat

&

im

,

// NOLINT

float

*

input_buffer

)

{

int

height

=

im

.

rows

;

int

width

=

im

.

cols

;

int

stride

=

width

*

height

;

for

(

int

h

=

0

;

h

<

height

;

h

++

)

{

for

(

int

w

=

0

;

w

<

width

;

w

++

)

{

int

base

=

h

*

width

+

w

;

input_buffer

[

base

+

0

*

stride

]

=

(

im

.

at

<

cv

::

Vec3f

>

(

h

,

w

)[

0

]

-

mean

[

0

])

*

scale

[

0

];

input_buffer

[

base

+

1

*

stride

]

=

(

im

.

at

<

cv

::

Vec3f

>

(

h

,

w

)[

1

]

-

mean

[

1

])

*

scale

[

1

];

input_buffer

[

base

+

2

*

stride

]

=

(

im

.

at

<

cv

::

Vec3f

>

(

h

,

w

)[

2

]

-

mean

[

2

])

*

scale

[

2

];

}

}

}

// Load Model and return model predictor

void

LoadModel

(

const

std

::

string

&

model_dir

,

bool

use_gpu

,

std

::

unique_ptr

<

paddle

::

PaddlePredictor

>*

predictor

)

{

// Config the model info

paddle

::

AnalysisConfig

config

;

config

.

SetModel

(

model_dir

+

"/__model__"

,

model_dir

+

"/__params__"

);

if

(

use_gpu

)

{

config

.

EnableUseGpu

(

100

,

0

);

}

else

{

config

.

DisableGpu

();

}

config

.

SwitchUseFeedFetchOps

(

false

);

config

.

SwitchSpecifyInputNames

(

true

);

// Memory optimization

config

.

EnableMemoryOptim

();

*

predictor

=

std

::

move

(

CreatePaddlePredictor

(

config

));

}

// Visualiztion MaskDetector results

void

VisualizeResult

(

const

cv

::

Mat

&

img

,

const

std

::

vector

<

FaceResult

>&

results

,

cv

::

Mat

*

vis_img

)

{

for

(

int

i

=

0

;

i

<

results

.

size

();

++

i

)

{

int

w

=

results

[

i

].

rect

[

1

]

-

results

[

i

].

rect

[

0

];

int

h

=

results

[

i

].

rect

[

3

]

-

results

[

i

].

rect

[

2

];

cv

::

Rect

roi

=

cv

::

Rect

(

results

[

i

].

rect

[

0

],

results

[

i

].

rect

[

2

],

w

,

h

);

// Configure color and text size

cv

::

Scalar

roi_color

;

std

::

string

text

;

if

(

results

[

i

].

class_id

==

1

)

{

text

=

"MASK: "

;

roi_color

=

cv

::

Scalar

(

0

,

255

,

0

);

}

else

{

text

=

"NO MASK: "

;

roi_color

=

cv

::

Scalar

(

0

,

0

,

255

);

}

text

+=

std

::

to_string

(

static_cast

<

int

>

(

results

[

i

].

score

*

100

))

+

"%"

;

int

font_face

=

cv

::

FONT_HERSHEY_TRIPLEX

;

double

font_scale

=

1.

f

;

float

thickness

=

1

;

cv

::

Size

text_size

=

cv

::

getTextSize

(

text

,

font_face

,

font_scale

,

thickness

,

nullptr

);

float

new_font_scale

=

roi

.

width

*

font_scale

/

text_size

.

width

;

text_size

=

cv

::

getTextSize

(

text

,

font_face

,

new_font_scale

,

thickness

,

nullptr

);

cv

::

Point

origin

;

origin

.

x

=

roi

.

x

;

origin

.

y

=

roi

.

y

;

// Configure text background

cv

::

Rect

text_back

=

cv

::

Rect

(

results

[

i

].

rect

[

0

],

results

[

i

].

rect

[

2

]

-

text_size

.

height

,

text_size

.

width

,

text_size

.

height

);

// Draw roi object, text, and background

*

vis_img

=

img

;

cv

::

rectangle

(

*

vis_img

,

roi

,

roi_color

,

2

);

cv

::

rectangle

(

*

vis_img

,

text_back

,

cv

::

Scalar

(

225

,

225

,

225

),

-

1

);

cv

::

putText

(

*

vis_img

,

text

,

origin

,

font_face

,

new_font_scale

,

cv

::

Scalar

(

0

,

0

,

0

),

thickness

);

}

}

void

FaceDetector

::

Preprocess

(

const

cv

::

Mat

&

image_mat

,

float

shrink

)

{

// Clone the image : keep the original mat for postprocess

cv

::

Mat

im

=

image_mat

.

clone

();

cv

::

resize

(

im

,

im

,

cv

::

Size

(),

shrink

,

shrink

,

cv

::

INTER_CUBIC

);

im

.

convertTo

(

im

,

CV_32FC3

,

1.0

);

int

rc

=

im

.

channels

();

int

rh

=

im

.

rows

;

int

rw

=

im

.

cols

;

input_shape_

=

{

1

,

rc

,

rh

,

rw

};

input_data_

.

resize

(

1

*

rc

*

rh

*

rw

);

float

*

buffer

=

input_data_

.

data

();

NormalizeImage

(

mean_

,

scale_

,

im

,

input_data_

.

data

());

}

void

FaceDetector

::

Postprocess

(

const

cv

::

Mat

&

raw_mat

,

float

shrink

,

std

::

vector

<

FaceResult

>*

result

)

{

result

->

clear

();

int

rect_num

=

0

;

int

rh

=

input_shape_

[

2

];

int

rw

=

input_shape_

[

3

];

int

total_size

=

output_data_

.

size

()

/

6

;

for

(

int

j

=

0

;

j

<

total_size

;

++

j

)

{

// Class id

int

class_id

=

static_cast

<

int

>

(

round

(

output_data_

[

0

+

j

*

6

]));

// Confidence score

float

score

=

output_data_

[

1

+

j

*

6

];

int

xmin

=

(

output_data_

[

2

+

j

*

6

]

*

rw

)

/

shrink

;

int

ymin

=

(

output_data_

[

3

+

j

*

6

]

*

rh

)

/

shrink

;

int

xmax

=

(

output_data_

[

4

+

j

*

6

]

*

rw

)

/

shrink

;

int

ymax

=

(

output_data_

[

5

+

j

*

6

]

*

rh

)

/

shrink

;

int

wd

=

xmax

-

xmin

;

int

hd

=

ymax

-

ymin

;

if

(

score

>

threshold_

)

{

auto

roi

=

cv

::

Rect

(

xmin

,

ymin

,

wd

,

hd

)

&

cv

::

Rect

(

0

,

0

,

rw

/

shrink

,

rh

/

shrink

);

// A view ref to original mat

cv

::

Mat

roi_ref

(

raw_mat

,

roi

);

FaceResult

result_item

;

result_item

.

rect

=

{

xmin

,

xmax

,

ymin

,

ymax

};

result_item

.

roi_rect

=

roi_ref

;

result

->

push_back

(

result_item

);

}

}

}

void

FaceDetector

::

Predict

(

const

cv

::

Mat

&

im

,

std

::

vector

<

FaceResult

>*

result

,

float

shrink

)

{

// Preprocess image

Preprocess

(

im

,

shrink

);

// Prepare input tensor

auto

input_names

=

predictor_

->

GetInputNames

();

auto

in_tensor

=

predictor_

->

GetInputTensor

(

input_names

[

0

]);

in_tensor

->

Reshape

(

input_shape_

);

in_tensor

->

copy_from_cpu

(

input_data_

.

data

());

// Run predictor

predictor_

->

ZeroCopyRun

();

// Get output tensor

auto

output_names

=

predictor_

->

GetOutputNames

();

auto

out_tensor

=

predictor_

->

GetOutputTensor

(

output_names

[

0

]);

std

::

vector

<

int

>

output_shape

=

out_tensor

->

shape

();

// Calculate output length

int

output_size

=

1

;

for

(

int

j

=

0

;

j

<

output_shape

.

size

();

++

j

)

{

output_size

*=

output_shape

[

j

];

}

output_data_

.

resize

(

output_size

);

out_tensor

->

copy_to_cpu

(

output_data_

.

data

());

// Postprocessing result

Postprocess

(

im

,

shrink

,

result

);

}

inline

void

MaskClassifier

::

Preprocess

(

std

::

vector

<

FaceResult

>*

faces

)

{

int

batch_size

=

faces

->

size

();

input_shape_

=

{

batch_size

,

EVAL_CROP_SIZE_

[

0

],

EVAL_CROP_SIZE_

[

1

],

EVAL_CROP_SIZE_

[

2

]

};

// Reallocate input buffer

int

input_size

=

1

;

for

(

int

x

:

input_shape_

)

{

input_size

*=

x

;

}

input_data_

.

resize

(

input_size

);

auto

buffer_base

=

input_data_

.

data

();

for

(

int

i

=

0

;

i

<

batch_size

;

++

i

)

{

cv

::

Mat

im

=

(

*

faces

)[

i

].

roi_rect

;

// Resize

int

rc

=

im

.

channels

();

int

rw

=

im

.

cols

;

int

rh

=

im

.

rows

;

cv

::

Size

resize_size

(

input_shape_

[

3

],

input_shape_

[

2

]);

if

(

rw

!=

input_shape_

[

3

]

||

rh

!=

input_shape_

[

2

])

{

cv

::

resize

(

im

,

im

,

resize_size

,

0.

f

,

0.

f

,

cv

::

INTER_CUBIC

);

}

im

.

convertTo

(

im

,

CV_32FC3

,

1.0

/

256.0

);

rc

=

im

.

channels

();

rw

=

im

.

cols

;

rh

=

im

.

rows

;

float

*

buffer_i

=

buffer_base

+

i

*

rc

*

rw

*

rh

;

NormalizeImage

(

mean_

,

scale_

,

im

,

buffer_i

);

}

}

void

MaskClassifier

::

Postprocess

(

std

::

vector

<

FaceResult

>*

faces

)

{

float

*

data

=

output_data_

.

data

();

int

batch_size

=

faces

->

size

();

int

out_num

=

output_data_

.

size

();

for

(

int

i

=

0

;

i

<

batch_size

;

++

i

)

{

auto

out_addr

=

data

+

(

out_num

/

batch_size

)

*

i

;

int

best_class_id

=

0

;

float

best_class_score

=

*

(

best_class_id

+

out_addr

);

for

(

int

j

=

0

;

j

<

(

out_num

/

batch_size

);

++

j

)

{

auto

infer_class

=

j

;

auto

score

=

*

(

j

+

out_addr

);

if

(

score

>

best_class_score

)

{

best_class_id

=

infer_class

;

best_class_score

=

score

;

}

}

(

*

faces

)[

i

].

class_id

=

best_class_id

;

(

*

faces

)[

i

].

score

=

best_class_score

;

}

}

void

MaskClassifier

::

Predict

(

std

::

vector

<

FaceResult

>*

faces

)

{

Preprocess

(

faces

);

// Prepare input tensor

auto

input_names

=

predictor_

->

GetInputNames

();

auto

in_tensor

=

predictor_

->

GetInputTensor

(

input_names

[

0

]);

in_tensor

->

Reshape

(

input_shape_

);

in_tensor

->

copy_from_cpu

(

input_data_

.

data

());

// Run predictor

predictor_

->

ZeroCopyRun

();

// Get output tensor

auto

output_names

=

predictor_

->

GetOutputNames

();

auto

out_tensor

=

predictor_

->

GetOutputTensor

(

output_names

[

1

]);

std

::

vector

<

int

>

output_shape

=

out_tensor

->

shape

();

// Calculate output length

int

output_size

=

1

;

for

(

int

j

=

0

;

j

<

output_shape

.

size

();

++

j

)

{

output_size

*=

output_shape

[

j

];

}

output_data_

.

resize

(

output_size

);

out_tensor

->

copy_to_cpu

(

output_data_

.

data

());

Postprocess

(

faces

);

}

deploy/demo/mask_detector/mask_detector.h

0 → 100644

浏览文件 @

b8730bce

// Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

//

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

//

// http://www.apache.org/licenses/LICENSE-2.0

//

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

#pragma once

#include <string>

#include <vector>

#include <memory>

#include <utility>

#include <opencv2/core/core.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/highgui/highgui.hpp>

#include "paddle_inference_api.h" // NOLINT

// MaskDetector Result

struct

FaceResult

{

// Detection result: face rectangle

std

::

vector

<

int

>

rect

;

// Detection result: cv::Mat of face rectange

cv

::

Mat

roi_rect

;

// Classification result: confidence

float

score

;

// Classification result : class id

int

class_id

;

};

// Load Paddle Inference Model

void

LoadModel

(

const

std

::

string

&

model_dir

,

bool

use_gpu

,

std

::

unique_ptr

<

paddle

::

PaddlePredictor

>*

predictor

);

// Visualiztion MaskDetector results

void

VisualizeResult

(

const

cv

::

Mat

&

img

,

const

std

::

vector

<

FaceResult

>&

results

,

cv

::

Mat

*

vis_img

);

class

FaceDetector

{

public:

explicit

FaceDetector

(

const

std

::

string

&

model_dir

,

const

std

::

vector

<

float

>&

mean

,

const

std

::

vector

<

float

>&

scale

,

bool

use_gpu

=

false

,

float

threshold

=

0.7

)

:

mean_

(

mean

),

scale_

(

scale

),

threshold_

(

threshold

)

{

LoadModel

(

model_dir

,

use_gpu

,

&

predictor_

);

}

// Run predictor

void

Predict

(

const

cv

::

Mat

&

img

,

std

::

vector

<

FaceResult

>*

result

,

float

shrink

);

private:

// Preprocess image and copy data to input buffer

void

Preprocess

(

const

cv

::

Mat

&

image_mat

,

float

shrink

);

// Postprocess result

void

Postprocess

(

const

cv

::

Mat

&

raw_mat

,

float

shrink

,

std

::

vector

<

FaceResult

>*

result

);

std

::

unique_ptr

<

paddle

::

PaddlePredictor

>

predictor_

;

std

::

vector

<

float

>

input_data_

;

std

::

vector

<

float

>

output_data_

;

std

::

vector

<

int

>

input_shape_

;

std

::

vector

<

float

>

mean_

;

std

::

vector

<

float

>

scale_

;

float

threshold_

;

};

class

MaskClassifier

{

public:

explicit

MaskClassifier

(

const

std

::

string

&

model_dir

,

const

std

::

vector

<

float

>&

mean

,

const

std

::

vector

<

float

>&

scale

,

bool

use_gpu

=

false

)

:

mean_

(

mean

),

scale_

(

scale

)

{

LoadModel

(

model_dir

,

use_gpu

,

&

predictor_

);

}

void

Predict

(

std

::

vector

<

FaceResult

>*

faces

);

private:

void

Preprocess

(

std

::

vector

<

FaceResult

>*

faces

);

void

Postprocess

(

std

::

vector

<

FaceResult

>*

faces

);

std

::

unique_ptr

<

paddle

::

PaddlePredictor

>

predictor_

;

std

::

vector

<

float

>

input_data_

;

std

::

vector

<

int

>

input_shape_

;

std

::

vector

<

float

>

output_data_

;

const

std

::

vector

<

int

>

EVAL_CROP_SIZE_

=

{

3

,

128

,

128

};

std

::

vector

<

float

>

mean_

;

std

::

vector

<

float

>

scale_

;

};

编辑

预览

Markdown

is supported

0%

请重试

或

添加新附件

.

添加附件

取消

You are about to add

0

people

to the discussion. Proceed with caution.

先完成此消息的编辑!

取消

想要评论请

注册

或

登录