add psgan and fom tutorials doc (#49)

* add psgan and fom tutorial doc

Showing

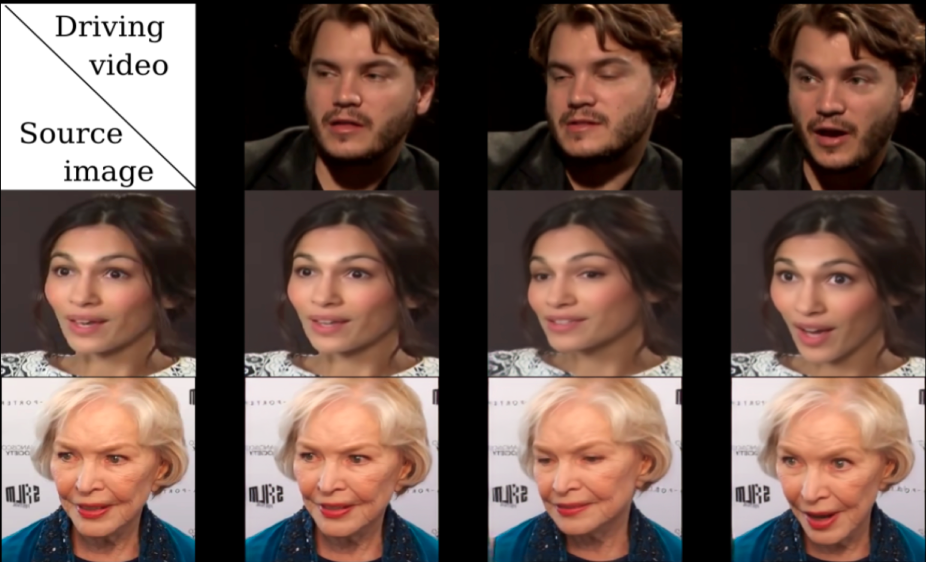

docs/imgs/fom_demo.png

0 → 100644

659.3 KB

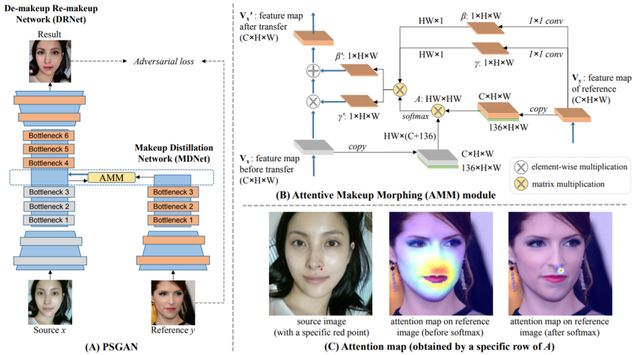

docs/imgs/psgan_arc.png

0 → 100644

266.2 KB

docs/tutorials/psgan_en.md

0 → 100644