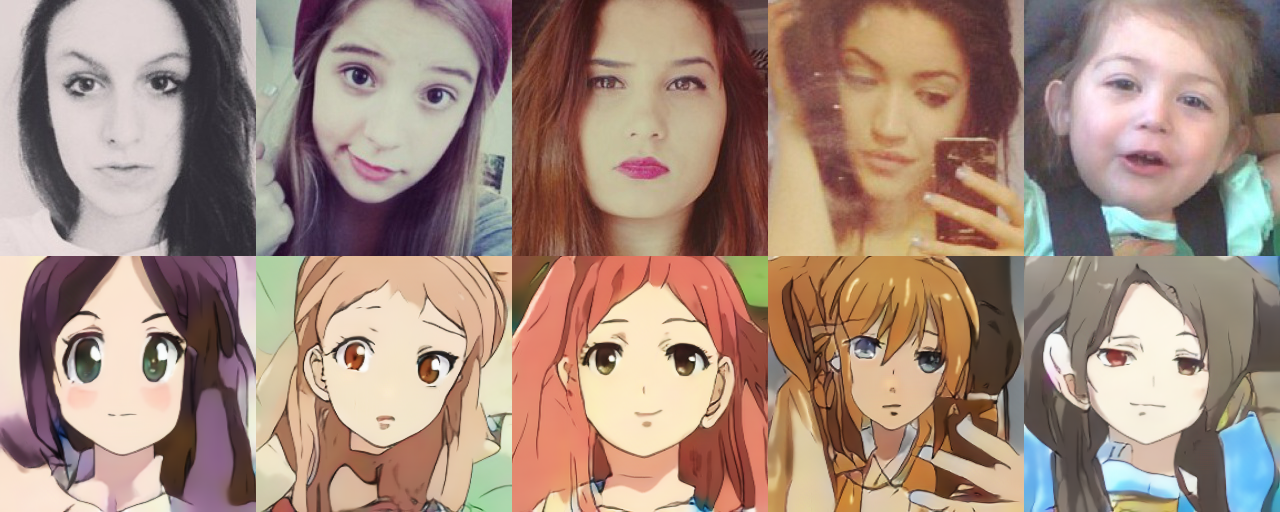

Add UGATIT model (#87)

* add ugatit model

Showing

docs/imgs/ugatit.png

0 → 100644

824.9 KB

ppgan/models/ugatit_model.py

0 → 100644

ppgan/modules/utils.py

0 → 100644

* add ugatit model

824.9 KB