add stylegan2 and pSp predictor (#113)

* add stylegan2 and pixel2style2pixel models, predictors and documents

Showing

applications/tools/styleganv2.py

0 → 100644

docs/imgs/pSp-input-crop.png

0 → 100644

73.3 KB

docs/imgs/pSp-input.jpg

0 → 100644

29.7 KB

docs/imgs/pSp-inversion.png

0 → 100644

86.1 KB

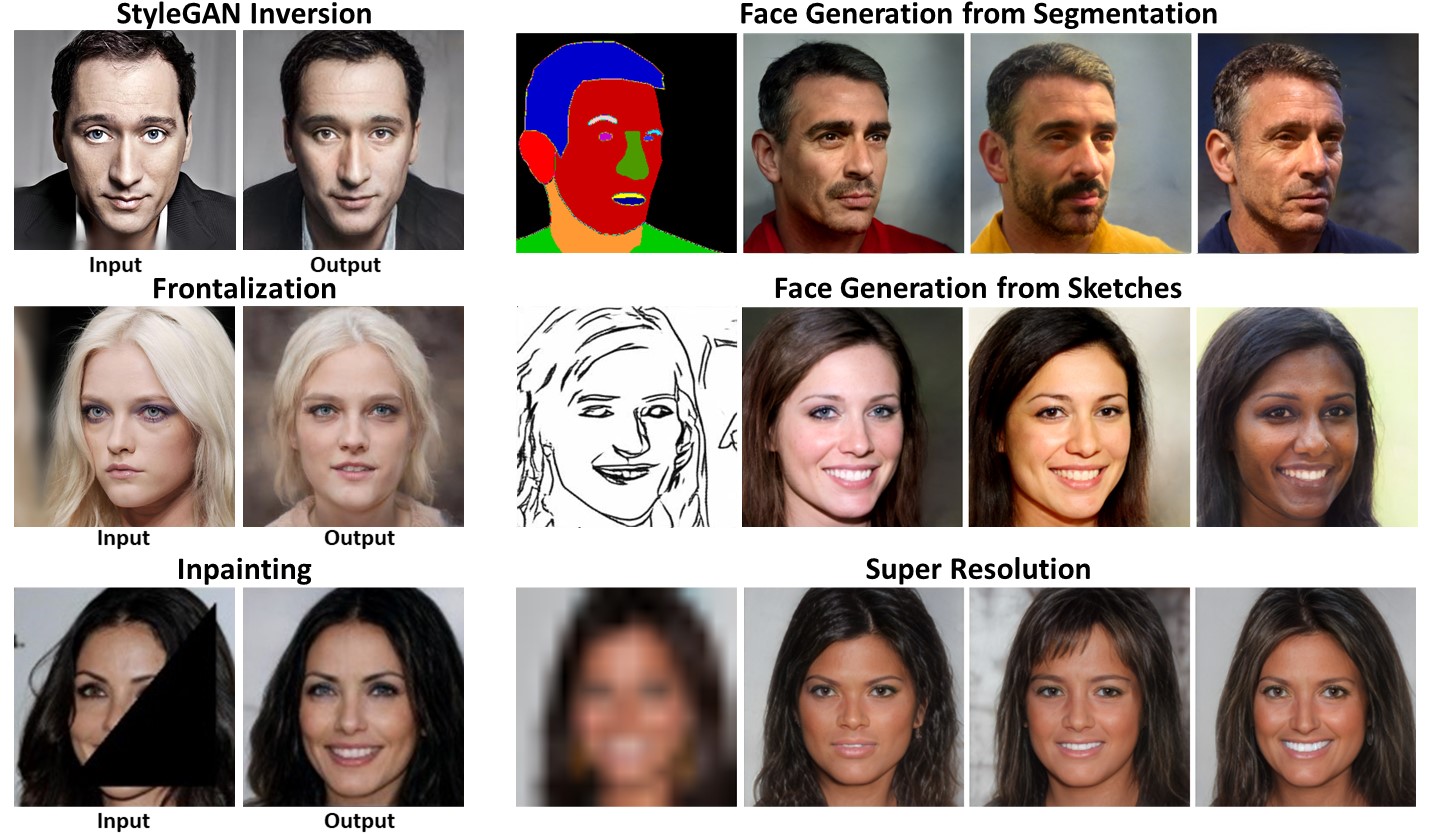

docs/imgs/pSp-teaser.jpg

0 → 100644

248.5 KB

docs/imgs/pSp-toonify.png

0 → 100644

93.3 KB

175.6 KB

docs/imgs/stylegan2-sample.png

0 → 100644

115.4 KB

420.9 KB

ppgan/modules/equalized.py

0 → 100644

ppgan/modules/fused_act.py

0 → 100644

ppgan/modules/upfirdn2d.py

0 → 100644