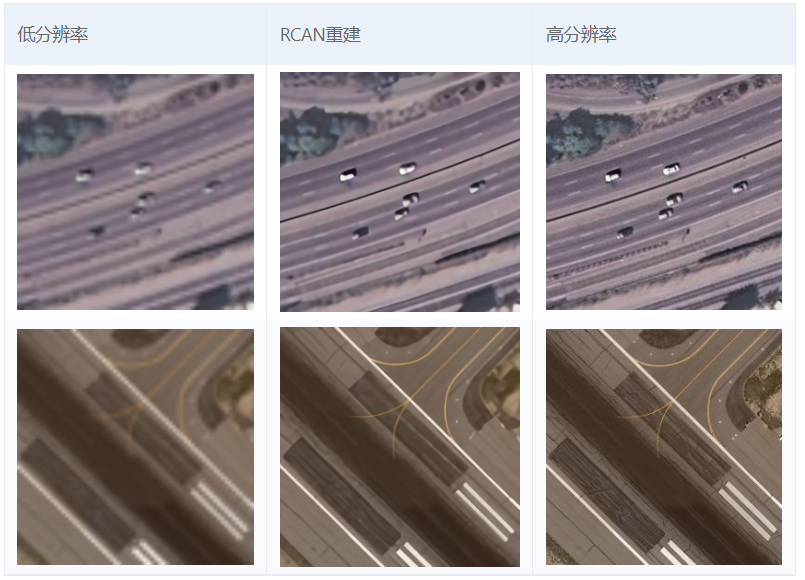

[feature] add rcan model for remote sensing image super-resolution (#610)

* [feature] add rcan model for super-resolution

Showing

configs/rcan_rssr_x4.yaml

0 → 100644

docs/imgs/RSSR.png

0 → 100644

435.0 KB

ppgan/models/generators/rcan.py

0 → 100644

ppgan/models/rcan_model.py

0 → 100644