You need to sign in or sign up before continuing.

Merge pull request #112 from jhjiangcs/smc612

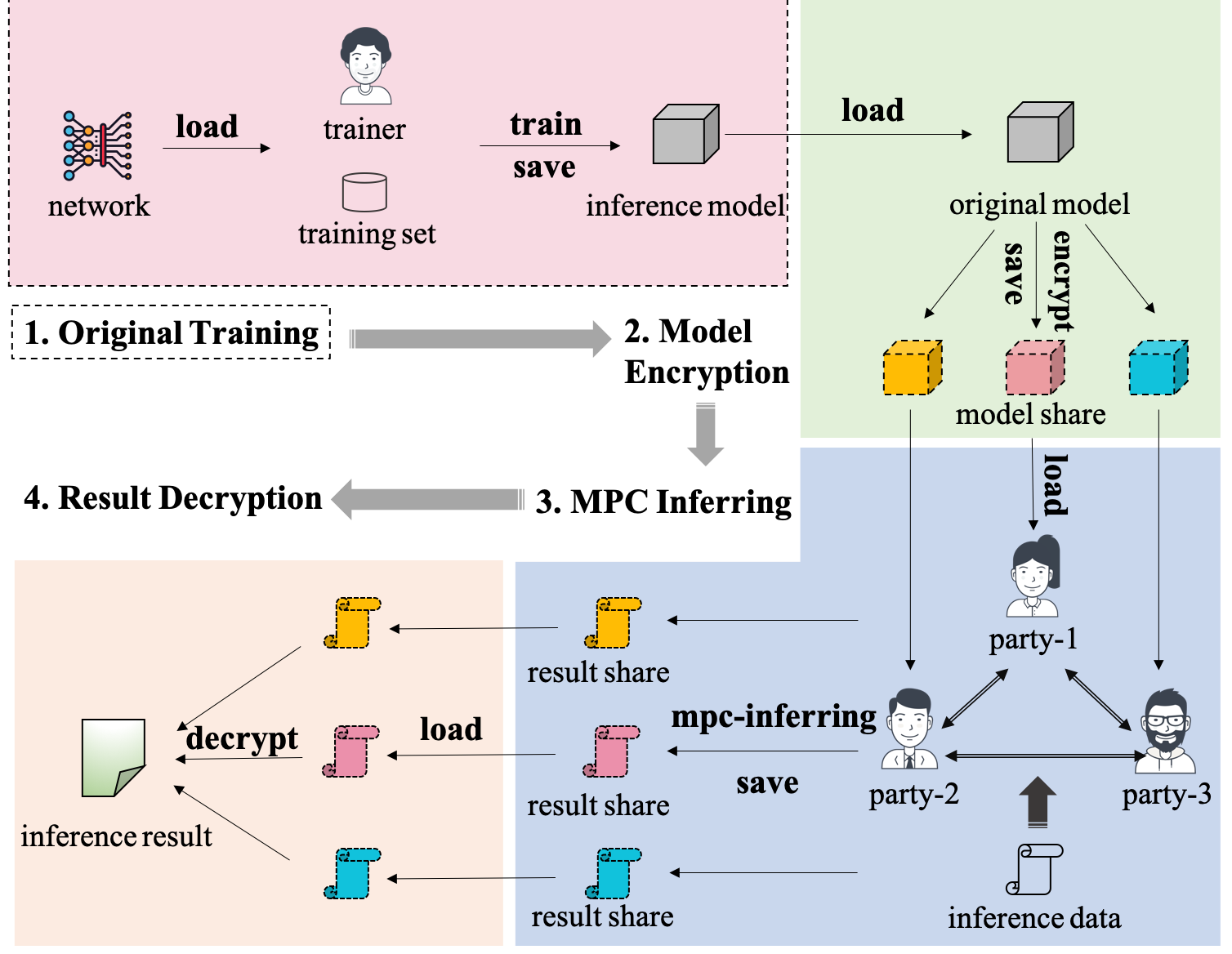

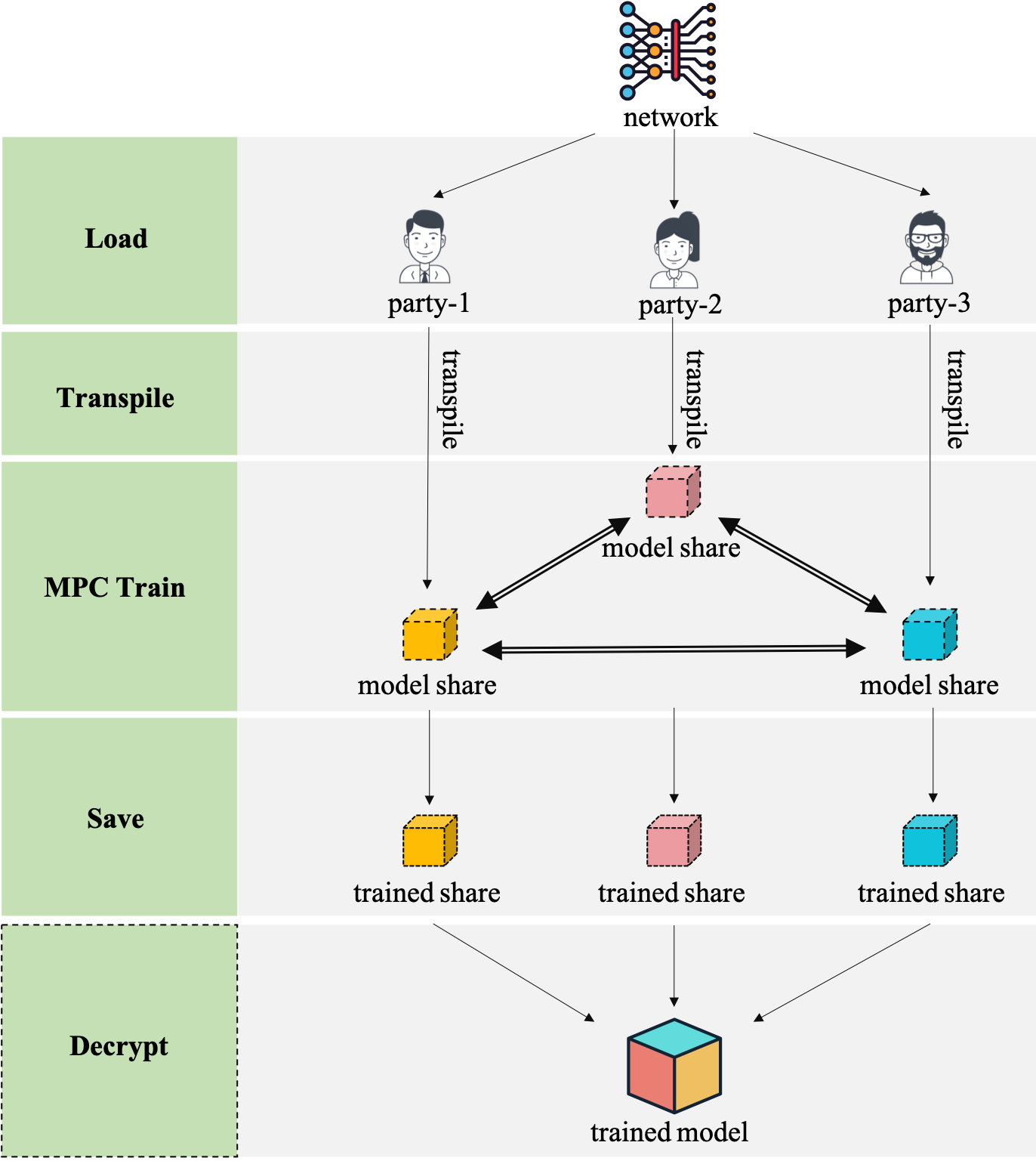

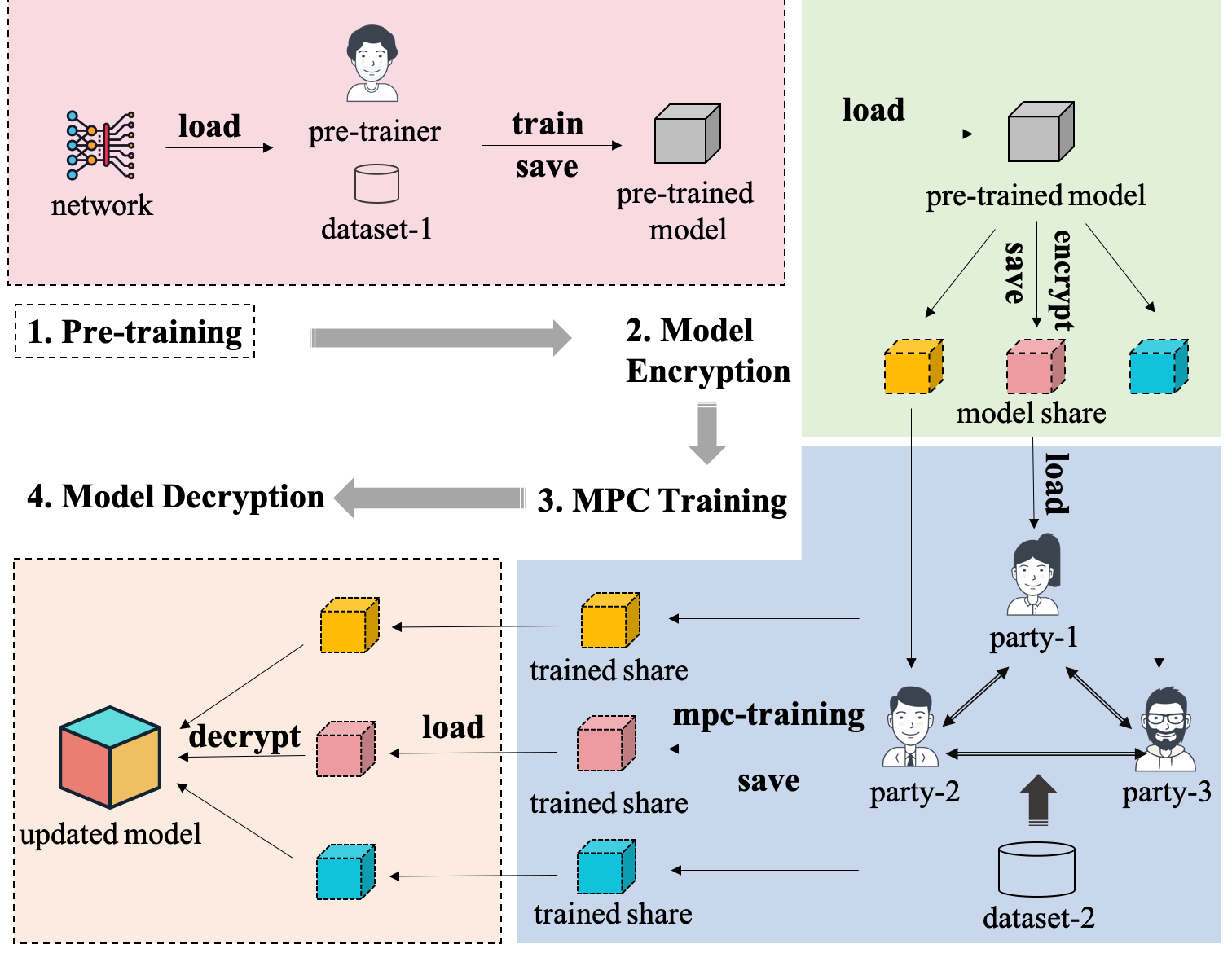

add model encryption and decryption demos.

Showing

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

259.1 KB

186.3 KB

258.5 KB