Merge pull request #32 from qjing666/document

Update PaddleFL document and quick start instructions

Showing

| W: | H:

| W: | H:

| W: | H:

| W: | H:

Update PaddleFL document and quick start instructions

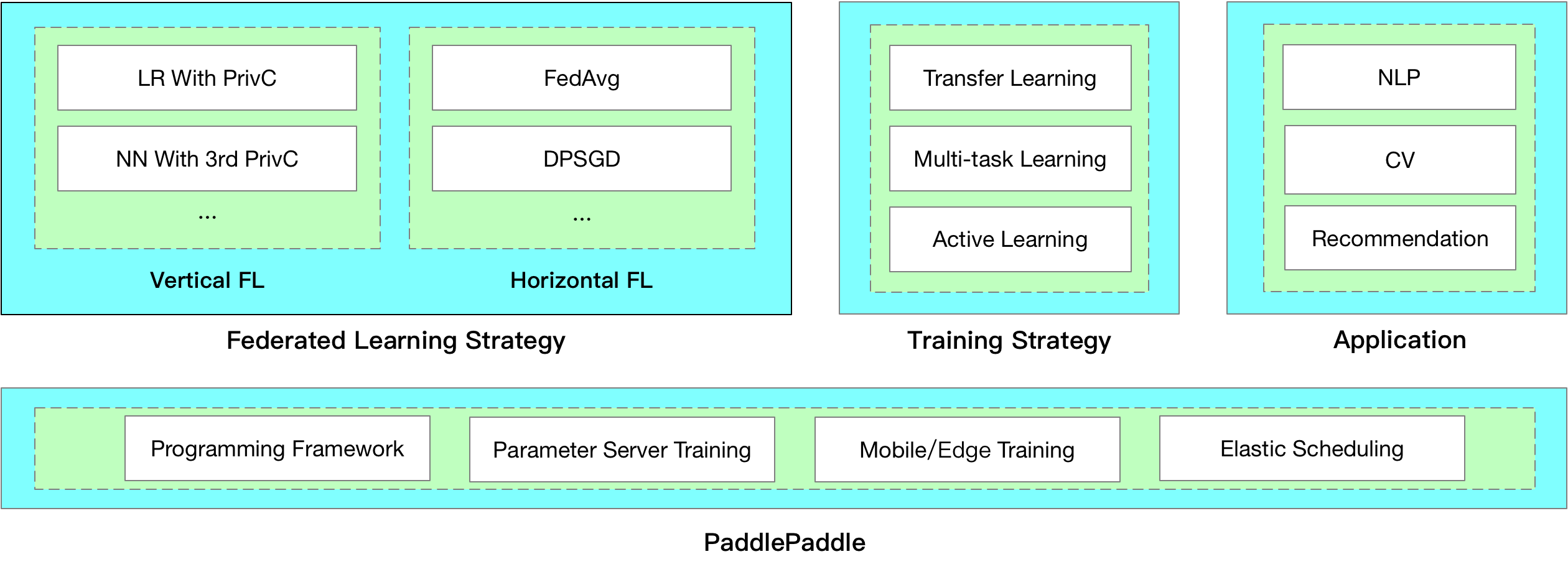

121.8 KB | W: | H:

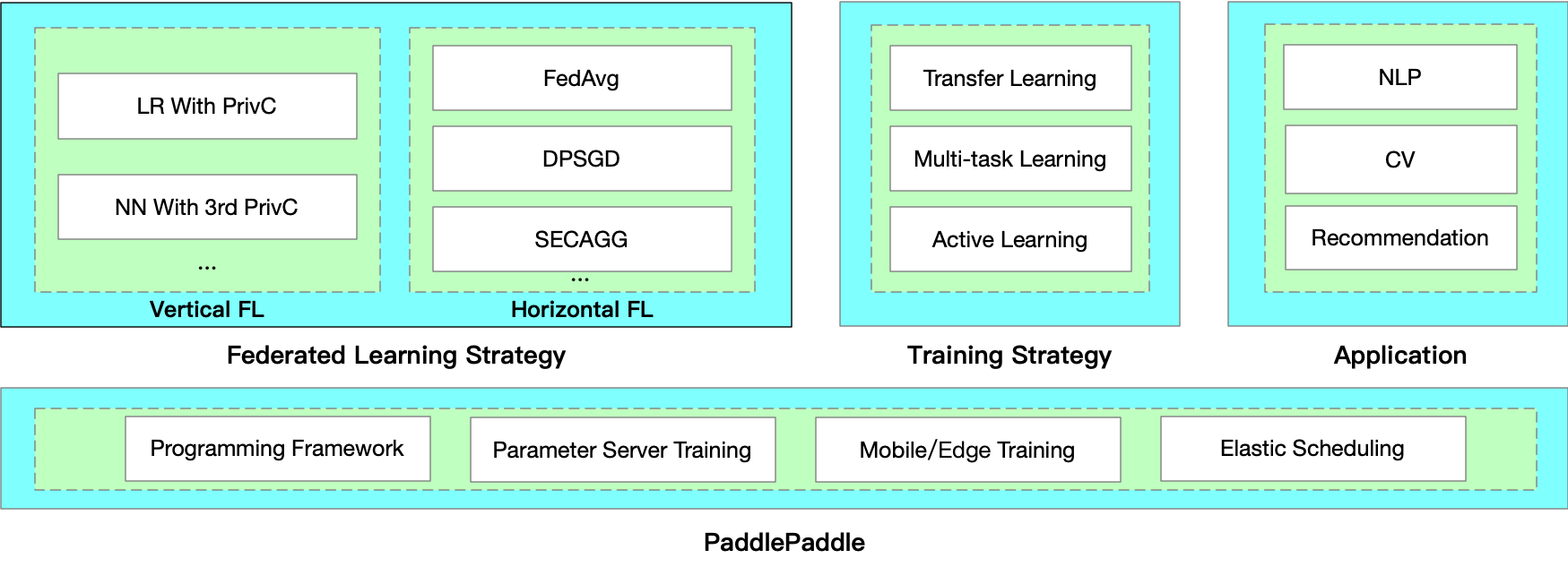

84.5 KB | W: | H:

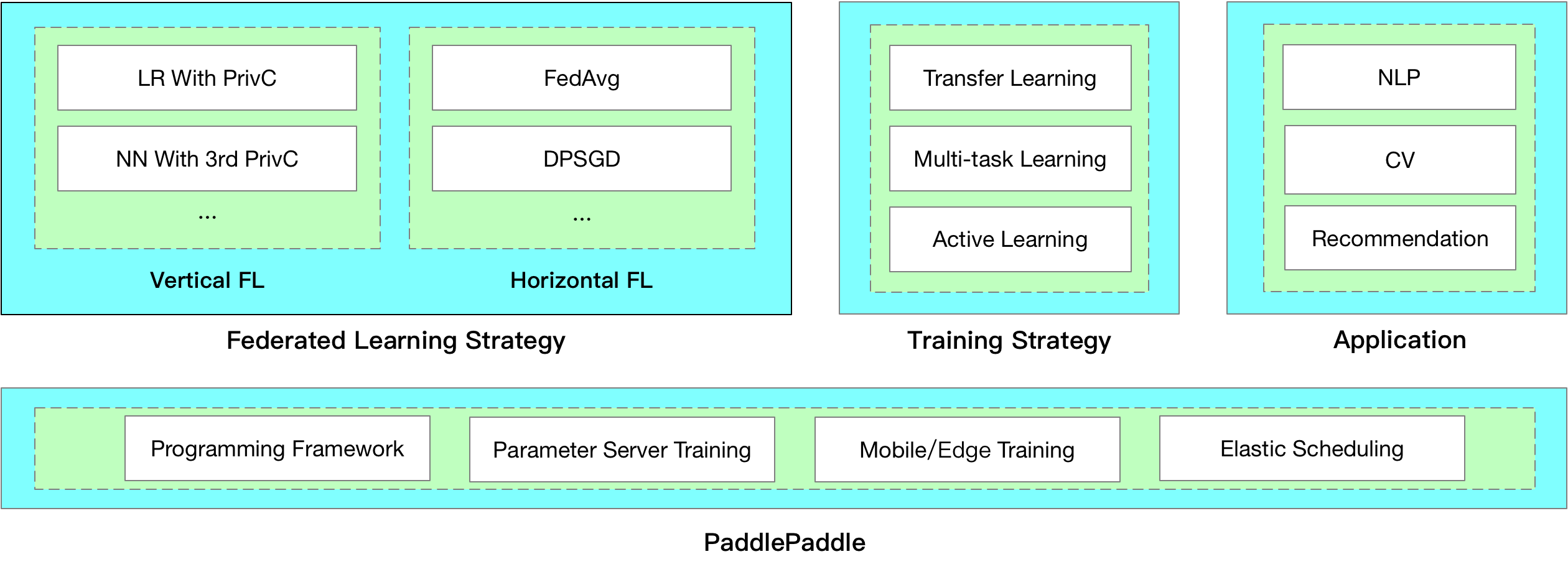

202.8 KB | W: | H:

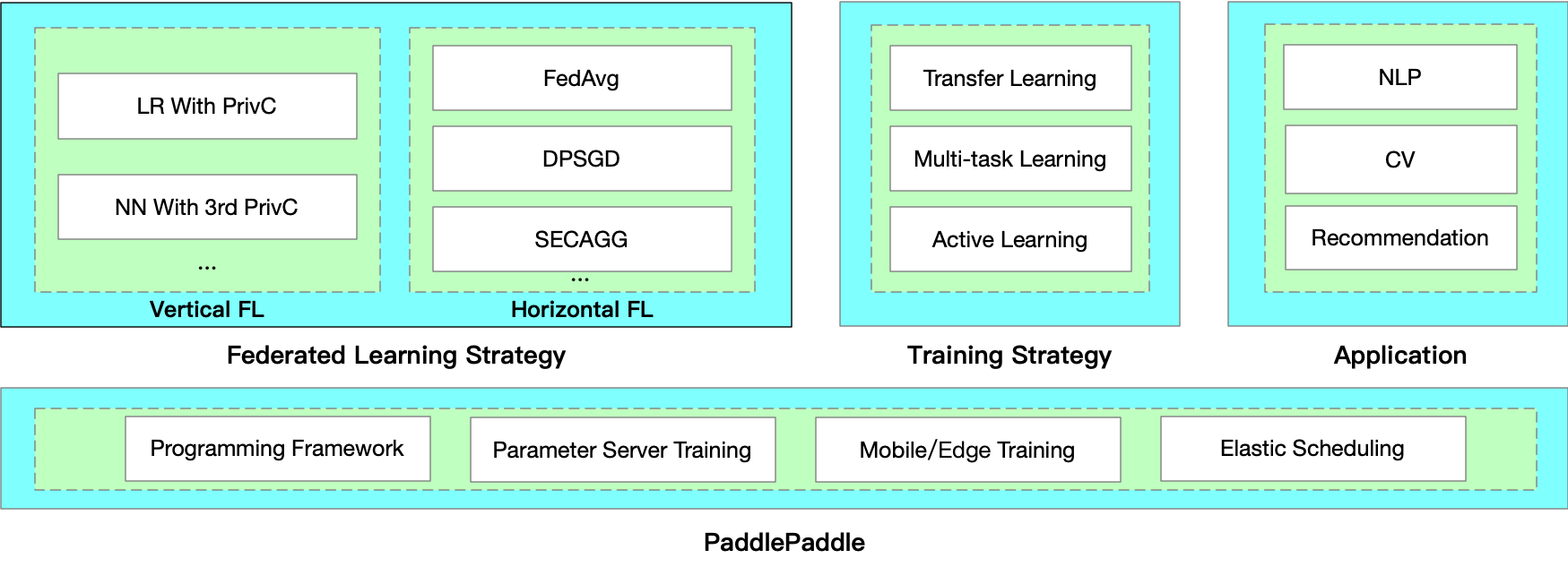

125.4 KB | W: | H: