Merge pull request #73 from qjing666/add_document

Update document in new_version

Showing

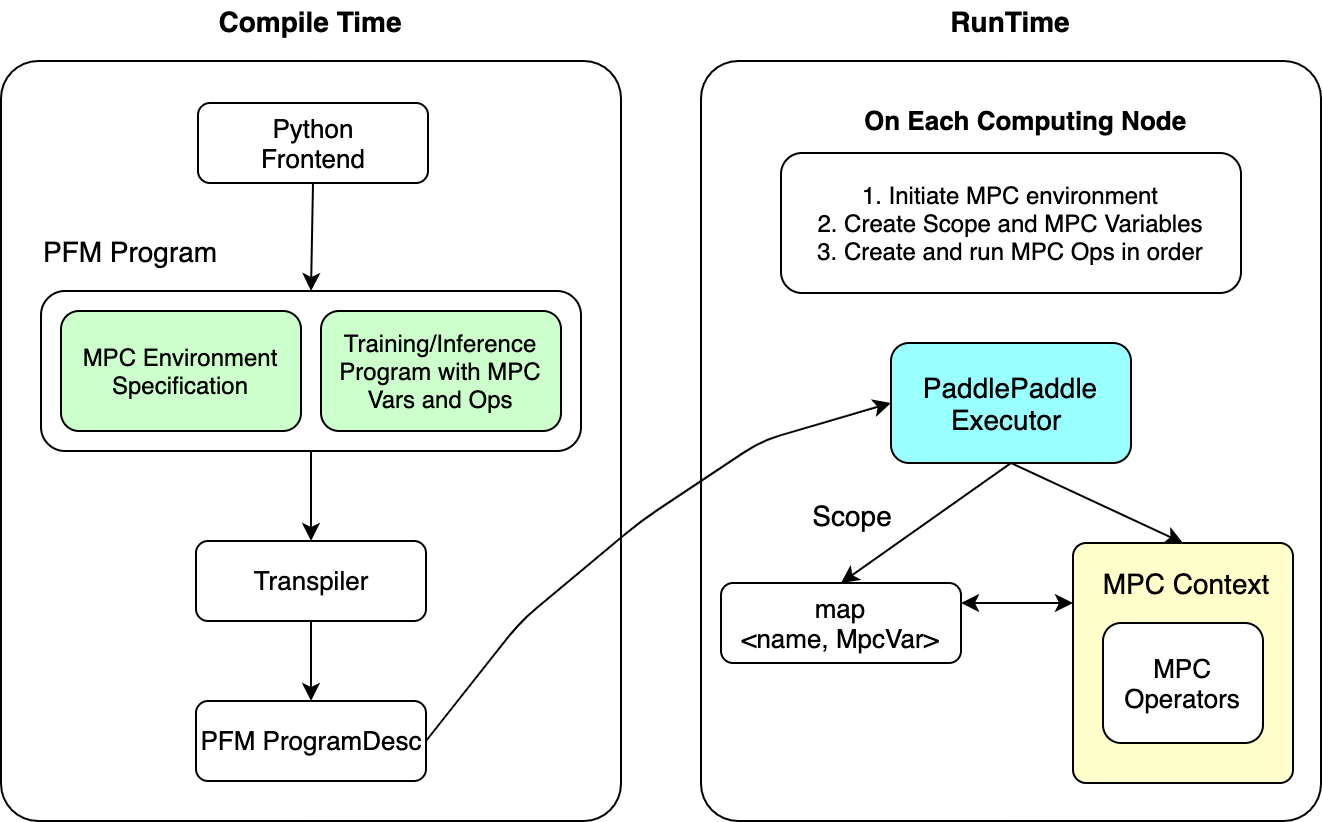

images/PFM-design.png

0 → 100644

144.5 KB

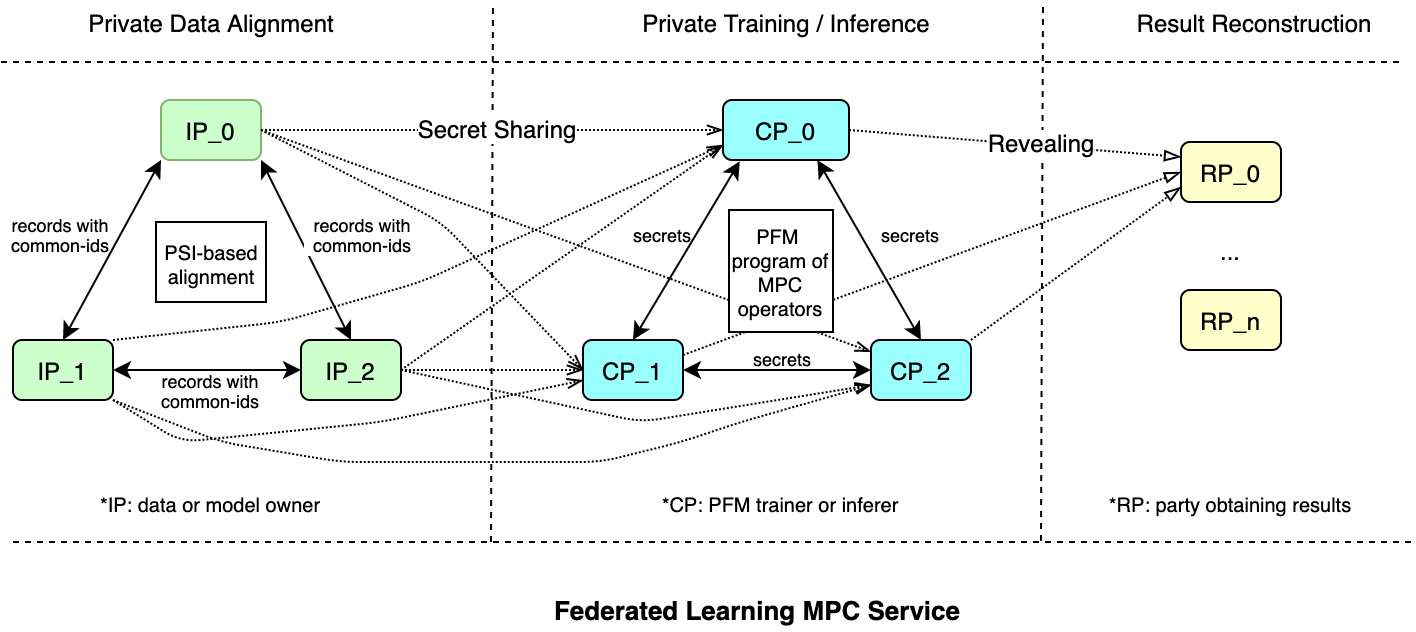

images/PFM-overview.png

0 → 100644

125.2 KB

Update document in new_version

144.5 KB

125.2 KB