Merge pull request #22 from qjing666/master

create dataset api and training demo for femnist

Showing

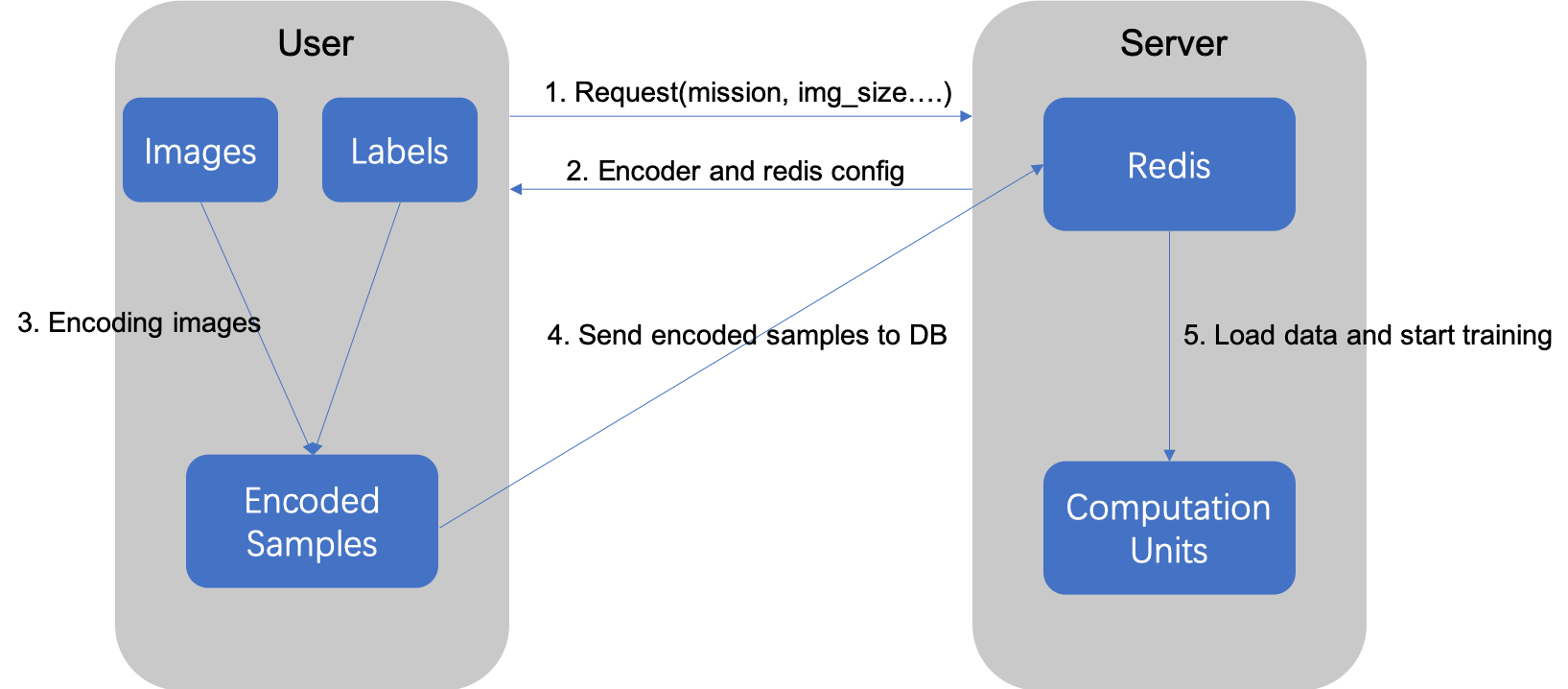

images/split_flow.png

0 → 100644

101.2 KB

paddle_fl/dataset/femnist.py

0 → 100644

create dataset api and training demo for femnist

101.2 KB