FasterRCNN训练MAP评估差别大

Created by: GitLD

基于Fruit数据集

训练配置:基于PaddleDetection/configs/faster_rcnn_se154_vd_fpn_s1x.yml改写,主要修改数据集为fruit-detection,和评估指标VOC,11Point

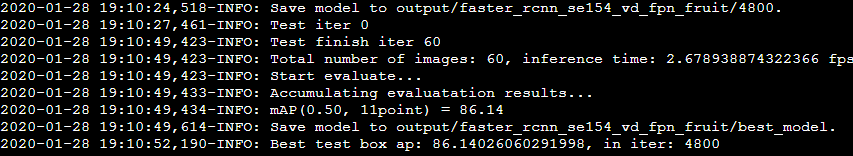

训练过程给出的MAP:

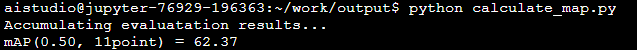

实际评估的MAP:

实际评估的MAP:

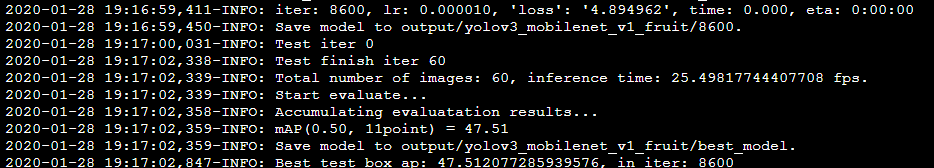

但是同样的问题在YoloV3配置训练上没有问题:

训练MAP

但是同样的问题在YoloV3配置训练上没有问题:

训练MAP

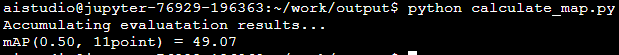

评估MAP

评估MAP

评估代码如下:

calculate_map.py

评估代码如下:

calculate_map.py

import os

import json

import numpy as np

import xml.etree.ElementTree as ET

from map_utils import DetectionMAP

import argparse

def parse_args():

parser = argparse.ArgumentParser("Evaluation Parameters")

parser.add_argument(

'--anno_dir',

type=str,

default='/home/aistudio/work/insects/val/annotations/xmls',

help='the directory of annotations')

parser.add_argument(

'--pred_result',

type=str,

default='./pred_results.json',

help='the file path of prediction results')

args = parser.parse_args()

return args

args = parse_args()

# 存放测试集标签的目录

annotations_dir = args.anno_dir

# 存放预测结果的文件

pred_result_file = args.pred_result

# pred_result_file 中保存着测试结果,是包含所有图片预测结果的list,其构成如下

# [[img_name, [[label, score, x1, x2, y1, y2],..., [label, score, x1, x2, y1, y2]]],

overlap_thresh = 0.5

map_type = '11point'

fruit_name = ['apple', 'orange', 'banana']

if __name__ == '__main__':

cname2cid = {}

for i, item in enumerate(fruit_name):

cname2cid[item] = i

filename = pred_result_file

results = json.load(open(filename))

num_classes = len(fruit_name)

detection_map = DetectionMAP(class_num=num_classes,

overlap_thresh=overlap_thresh,

map_type=map_type,

is_bbox_normalized=False,

evaluate_difficult=False)

for result in results:

image_name = str(result[0])

bboxes = np.array(result[1]).astype('float32')

anno_file = os.path.join(annotations_dir, image_name + '.xml')

tree = ET.parse(anno_file)

objs = tree.findall('object')

im_w = float(tree.find('size').find('width').text)

im_h = float(tree.find('size').find('height').text)

gt_bbox = np.zeros((len(objs), 4), dtype=np.float32)

gt_class = np.zeros((len(objs), 1), dtype=np.int32)

difficult = np.zeros((len(objs), 1), dtype=np.int32)

for i, obj in enumerate(objs):

cname = obj.find('name').text

gt_class[i][0] = cname2cid[cname]

_difficult = int(obj.find('difficult').text)

x1 = float(obj.find('bndbox').find('xmin').text)

y1 = float(obj.find('bndbox').find('ymin').text)

x2 = float(obj.find('bndbox').find('xmax').text)

y2 = float(obj.find('bndbox').find('ymax').text)

x1 = max(0, x1)

y1 = max(0, y1)

x2 = min(im_w - 1, x2)

y2 = min(im_h - 1, y2)

gt_bbox[i] = [x1, y1, x2, y2]

difficult[i][0] = _difficult

detection_map.update(bboxes, gt_bbox, gt_class, difficult)

print("Accumulating evaluatation results...")

detection_map.accumulate()

map_stat = 100. * detection_map.get_map()

print("mAP({:.2f}, {}) = {:.2f}".format(overlap_thresh,

map_type, map_stat))

map_utils.py

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

from __future__ import unicode_literals

import sys

import numpy as np

import logging

logger = logging.getLogger(__name__)

__all__ = ['bbox_area', 'jaccard_overlap', 'DetectionMAP']

def bbox_area(bbox, is_bbox_normalized):

"""

Calculate area of a bounding box

"""

norm = 1. - float(is_bbox_normalized)

width = bbox[2] - bbox[0] + norm

height = bbox[3] - bbox[1] + norm

return width * height

def jaccard_overlap(pred, gt, is_bbox_normalized=False):

"""

Calculate jaccard overlap ratio between two bounding box

"""

if pred[0] >= gt[2] or pred[2] <= gt[0] or \

pred[1] >= gt[3] or pred[3] <= gt[1]:

return 0.

inter_xmin = max(pred[0], gt[0])

inter_ymin = max(pred[1], gt[1])

inter_xmax = min(pred[2], gt[2])

inter_ymax = min(pred[3], gt[3])

inter_size = bbox_area([inter_xmin, inter_ymin, inter_xmax, inter_ymax],

is_bbox_normalized)

pred_size = bbox_area(pred, is_bbox_normalized)

gt_size = bbox_area(gt, is_bbox_normalized)

overlap = float(inter_size) / (pred_size + gt_size - inter_size)

return overlap

class DetectionMAP(object):

"""

Calculate detection mean average precision.

Currently support two types: 11point and integral

Args:

class_num (int): the class number.

overlap_thresh (float): The threshold of overlap

ratio between prediction bounding box and

ground truth bounding box for deciding

true/false positive. Default 0.5.

map_type (str): calculation method of mean average

precision, currently support '11point' and

'integral'. Default '11point'.

is_bbox_normalized (bool): whther bounding boxes

is normalized to range[0, 1]. Default False.

evaluate_difficult (bool): whether to evaluate

difficult bounding boxes. Default False.

"""

def __init__(self,

class_num,

overlap_thresh=0.5,

map_type='11point',

is_bbox_normalized=False,

evaluate_difficult=False):

self.class_num = class_num

self.overlap_thresh = overlap_thresh

assert map_type in ['11point', 'integral'], \

"map_type currently only support '11point' "\

"and 'integral'"

self.map_type = map_type

self.is_bbox_normalized = is_bbox_normalized

self.evaluate_difficult = evaluate_difficult

self.reset()

def update(self, bbox, gt_box, gt_label, difficult=None):

"""

Update metric statics from given prediction and ground

truth infomations.

"""

if difficult is None:

difficult = np.zeros_like(gt_label)

# record class gt count

for gtl, diff in zip(gt_label, difficult):

if self.evaluate_difficult or int(diff) == 0:

self.class_gt_counts[int(np.array(gtl))] += 1

# record class score positive

visited = [False] * len(gt_label)

for b in bbox:

label, score, xmin, ymin, xmax, ymax = b.tolist()

pred = [xmin, ymin, xmax, ymax]

max_idx = -1

max_overlap = -1.0

for i, gl in enumerate(gt_label):

if int(gl) == int(label):

overlap = jaccard_overlap(pred, gt_box[i],

self.is_bbox_normalized)

#print('---------', int(gl), int(label), overlap)

if overlap > max_overlap:

max_overlap = overlap

max_idx = i

if max_overlap > self.overlap_thresh:

if self.evaluate_difficult or \

int(np.array(difficult[max_idx])) == 0:

if not visited[max_idx]:

self.class_score_poss[int(label)].append([score, 1.0])

visited[max_idx] = True

else:

self.class_score_poss[int(label)].append([score, 0.0])

else:

self.class_score_poss[int(label)].append([score, 0.0])

def reset(self):

"""

Reset metric statics

"""

self.class_score_poss = [[] for _ in range(self.class_num)]

self.class_gt_counts = [0] * self.class_num

self.mAP = None

def accumulate(self):

"""

Accumulate metric results and calculate mAP

"""

mAP = 0.

valid_cnt = 0

for score_pos, count in zip(self.class_score_poss,

self.class_gt_counts):

if count == 0 or len(score_pos) == 0:

continue

accum_tp_list, accum_fp_list = \

self._get_tp_fp_accum(score_pos)

precision = []

recall = []

for ac_tp, ac_fp in zip(accum_tp_list, accum_fp_list):

precision.append(float(ac_tp) / (ac_tp + ac_fp))

recall.append(float(ac_tp) / count)

if self.map_type == '11point':

max_precisions = [0.] * 11

start_idx = len(precision) - 1

for j in range(10, -1, -1):

for i in range(start_idx, -1, -1):

if recall[i] < float(j) / 10.:

start_idx = i

if j > 0:

max_precisions[j - 1] = max_precisions[j]

break

else:

if max_precisions[j] < precision[i]:

max_precisions[j] = precision[i]

mAP += sum(max_precisions) / 11.

valid_cnt += 1

elif self.map_type == 'integral':

import math

ap = 0.

prev_recall = 0.

for i in range(len(precision)):

recall_gap = math.fabs(recall[i] - prev_recall)

if recall_gap > 1e-6:

ap += precision[i] * recall_gap

prev_recall = recall[i]

mAP += ap

valid_cnt += 1

else:

logger.error("Unspported mAP type {}".format(self.map_type))

sys.exit(1)

self.mAP = mAP / float(valid_cnt) if valid_cnt > 0 else mAP

def get_map(self):

"""

Get mAP result

"""

if self.mAP is None:

logger.error("mAP is not calculated.")

return self.mAP

def _get_tp_fp_accum(self, score_pos_list):

"""

Calculate accumulating true/false positive results from

[score, pos] records

"""

sorted_list = sorted(score_pos_list, key=lambda s: s[0], reverse=True)

accum_tp = 0

accum_fp = 0

accum_tp_list = []

accum_fp_list = []

for (score, pos) in sorted_list:

accum_tp += int(pos)

accum_tp_list.append(accum_tp)

accum_fp += 1 - int(pos)

accum_fp_list.append(accum_fp)

return accum_tp_list, accum_fp_list推断文件

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import os

import glob

import json

import numpy as np

from PIL import Image

def set_paddle_flags(**kwargs):

for key, value in kwargs.items():

if os.environ.get(key, None) is None:

os.environ[key] = str(value)

# NOTE(paddle-dev): All of these flags should be set before

# `import paddle`. Otherwise, it would not take any effect.

set_paddle_flags(

FLAGS_eager_delete_tensor_gb=0, # enable GC to save memory

)

from paddle import fluid

from ppdet.utils.cli import print_total_cfg

from ppdet.core.workspace import load_config, merge_config, create

from ppdet.modeling.model_input import create_feed

from ppdet.data.data_feed import create_reader

from ppdet.utils.eval_utils import parse_fetches

from ppdet.utils.cli import ArgsParser

from ppdet.utils.check import check_gpu

from ppdet.utils.visualizer import visualize_results

import ppdet.utils.checkpoint as checkpoint

import logging

FORMAT = '%(asctime)s-%(levelname)s: %(message)s'

logging.basicConfig(level=logging.INFO, format=FORMAT)

logger = logging.getLogger(__name__)

def get_save_image_name(output_dir, image_path):

"""

Get save image name from source image path.

"""

if not os.path.exists(output_dir):

os.makedirs(output_dir)

image_name = os.path.split(image_path)[-1]

name, ext = os.path.splitext(image_name)

return os.path.join(output_dir, "{}".format(name)) + ext

def get_test_images(infer_dir, infer_img):

"""

Get image path list in TEST mode

"""

assert infer_img is not None or infer_dir is not None, \

"--infer_img or --infer_dir should be set"

assert infer_img is None or os.path.isfile(infer_img), \

"{} is not a file".format(infer_img)

assert infer_dir is None or os.path.isdir(infer_dir), \

"{} is not a directory".format(infer_dir)

images = []

# infer_img has a higher priority

if infer_img and os.path.isfile(infer_img):

images.append(infer_img)

return images

infer_dir = os.path.abspath(infer_dir)

assert os.path.isdir(infer_dir), \

"infer_dir {} is not a directory".format(infer_dir)

exts = ['jpg', 'jpeg', 'png', 'bmp']

exts += [ext.upper() for ext in exts]

for ext in exts:

images.extend(glob.glob('{}/*.{}'.format(infer_dir, ext)))

assert len(images) > 0, "no image found in {}".format(infer_dir)

logger.info("Found {} inference images in total.".format(len(images)))

return images

def main():

cfg = load_config(FLAGS.config)

if 'architecture' in cfg:

main_arch = cfg.architecture

else:

raise ValueError("'architecture' not specified in config file.")

merge_config(FLAGS.opt)

# check if set use_gpu=True in paddlepaddle cpu version

check_gpu(cfg.use_gpu)

print_total_cfg(cfg)

if 'test_feed' not in cfg:

test_feed = create(main_arch + 'TestFeed')

else:

test_feed = create(cfg.test_feed)

test_images = get_test_images(FLAGS.infer_dir, FLAGS.infer_img)

test_feed.dataset.add_images(test_images)

place = fluid.CUDAPlace(0) if cfg.use_gpu else fluid.CPUPlace()

exe = fluid.Executor(place)

model = create(main_arch)

startup_prog = fluid.Program()

infer_prog = fluid.Program()

with fluid.program_guard(infer_prog, startup_prog):

with fluid.unique_name.guard():

_, feed_vars = create_feed(test_feed, use_pyreader=False)

test_fetches = model.test(feed_vars)

infer_prog = infer_prog.clone(True)

reader = create_reader(test_feed)

feeder = fluid.DataFeeder(place=place, feed_list=feed_vars.values())

exe.run(startup_prog)

if cfg.weights:

checkpoint.load_params(exe, infer_prog, cfg.weights)

# parse infer fetches

assert cfg.metric in ['COCO', 'VOC', 'WIDERFACE'], \

"unknown metric type {}".format(cfg.metric)

extra_keys = []

if cfg['metric'] == 'COCO':

extra_keys = ['im_info', 'im_id', 'im_shape']

if cfg['metric'] == 'VOC' or cfg['metric'] == 'WIDERFACE':

extra_keys = ['im_id', 'im_shape']

keys, values, _ = parse_fetches(test_fetches, infer_prog, extra_keys)

# parse dataset category

if cfg.metric == 'COCO':

from ppdet.utils.coco_eval import bbox2out, mask2out, get_category_info

if cfg.metric == "VOC":

from ppdet.utils.voc_eval import bbox2out, get_category_info

if cfg.metric == "WIDERFACE":

from ppdet.utils.widerface_eval_utils import bbox2out, get_category_info

anno_file = getattr(test_feed.dataset, 'annotation', None)

with_background = getattr(test_feed, 'with_background', True)

use_default_label = getattr(test_feed, 'use_default_label', False)

clsid2catid, catid2name = get_category_info(anno_file, with_background,

use_default_label)

# whether output bbox is normalized in model output layer

is_bbox_normalized = False

if hasattr(model, 'is_bbox_normalized') and \

callable(model.is_bbox_normalized):

is_bbox_normalized = model.is_bbox_normalized()

# use tb-paddle to log image

if FLAGS.use_tb:

from tb_paddle import SummaryWriter

tb_writer = SummaryWriter(FLAGS.tb_log_dir)

tb_image_step = 0

tb_image_frame = 0 # each frame can display ten pictures at most.

imid2path = reader.imid2path

total_results = []

for iter_id, data in enumerate(reader()):

outs = exe.run(infer_prog,

feed=feeder.feed(data),

fetch_list=values,

return_numpy=False)

res = {

k: (np.array(v), v.recursive_sequence_lengths())

for k, v in zip(keys, outs)

}

logger.info('Infer iter {}'.format(iter_id))

bbox_results = None

mask_results = None

if 'bbox' in res:

bbox_results = bbox2out([res], clsid2catid, is_bbox_normalized)

if 'mask' in res:

mask_results = mask2out([res], clsid2catid,

model.mask_head.resolution)

print(f"Num of BBox : {len(bbox_results)}")

# Create json

im_ids = res['im_id'][0]

result = []

for im_id in im_ids:

image_path = imid2path[int(im_id)]

img_name = image_path.split('/')[-1].split('.')[0]

result.append(img_name)

bbox_list = []

for dt in np.array(bbox_results):

catid, bbox, score = dt['category_id'], dt['bbox'], dt['score']

xmin, ymin, w, h = bbox

xmax = xmin + w

ymax = ymin + h

bbox_list.append([catid, score, xmin, ymin, xmax, ymax])

result.append(bbox_list)

total_results.append(result)

json.dump(total_results, open(os.path.join(FLAGS.output_dir,'pred_results.json'), 'w'))

print("Output finish!")

if __name__ == '__main__':

parser = ArgsParser()

parser.add_argument(

"--infer_dir",

type=str,

default=None,

help="Directory for images to perform inference on.")

parser.add_argument(

"--infer_img",

type=str,

default=None,

help="Image path, has higher priority over --infer_dir")

parser.add_argument(

"--output_dir",

type=str,

default="output",

help="Directory for storing the output visualization files.")

parser.add_argument(

"--draw_threshold",

type=float,

default=0.5,

help="Threshold to reserve the result for visualization.")

parser.add_argument(

"--use_tb",

type=bool,

default=False,

help="whether to record the data to Tensorboard.")

parser.add_argument(

'--tb_log_dir',

type=str,

default="tb_log_dir/image",

help='Tensorboard logging directory for image.')

FLAGS = parser.parse_args()

main()