merge

Showing

README_cn.md

0 → 100644

README_en.md

已删除

100644 → 0

configs/ppyolo/README.md

0 → 100644

configs/ppyolo/README_cn.md

0 → 100644

configs/ppyolo/ppyolo.yml

0 → 100644

configs/ppyolo/ppyolo_2x.yml

0 → 100644

configs/ppyolo/ppyolo_reader.yml

0 → 100644

configs/ppyolo/ppyolo_test.yml

0 → 100644

configs/ppyolo/ppyolo_tiny.yml

0 → 100755

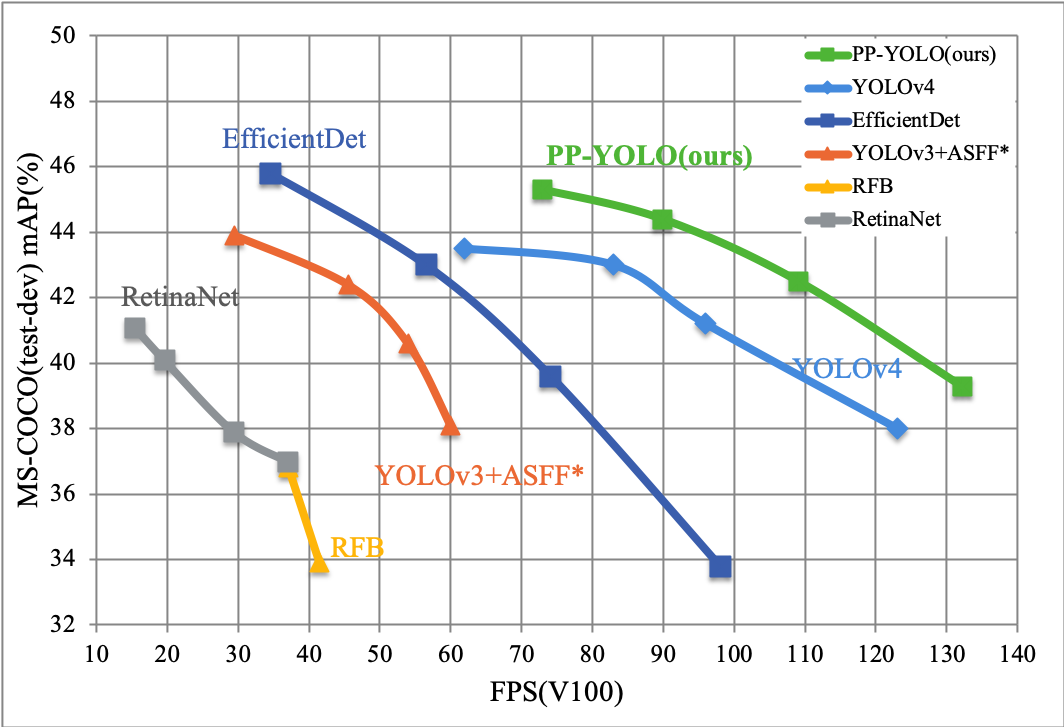

docs/images/ppyolo_map_fps.png

0 → 100644

131.9 KB