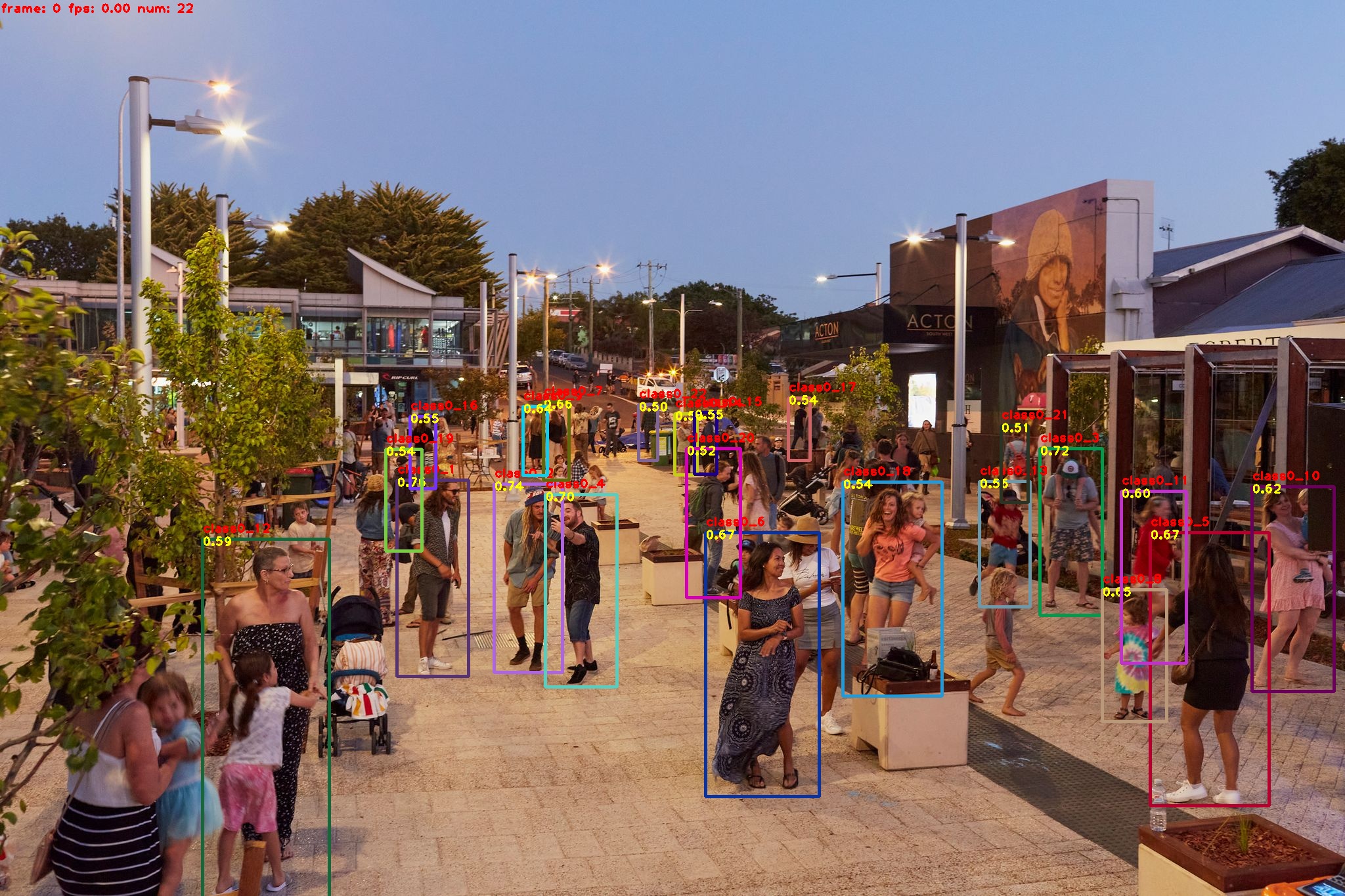

[Tutorial] Added a tutorial for OpenVINO with a demonstration for FairMOT model (#5368)

* added OpenVINO fairmort tutorial * added Chinese version Readme. * removed unused code

Showing

500.0 KB

951.2 KB

* added OpenVINO fairmort tutorial * added Chinese version Readme. * removed unused code

500.0 KB

951.2 KB