solve conficts

Showing

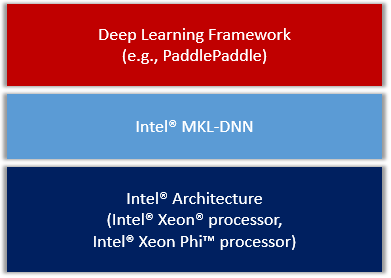

doc/design/mkldnn/README.MD

0 → 100644

9.7 KB

paddle/cuda/src/hl_batch_norm.cu

0 → 100644

paddle/framework/attribute.cc

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

paddle/operators/.clang-format

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

paddle/pybind/CMakeLists.txt

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

paddle/scripts/submit_local.sh.in

100644 → 100755

文件模式从 100644 更改为 100755

paddle/setup.py.in

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

文件已移动

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。