Update PicoDet and GFl (#4044)

* Update PicoDet adn GFl

Showing

configs/gfl/gfl_r18vd_1x_coco.yml

0 → 100644

configs/gfl/gfl_r34vd_1x_coco.yml

0 → 100644

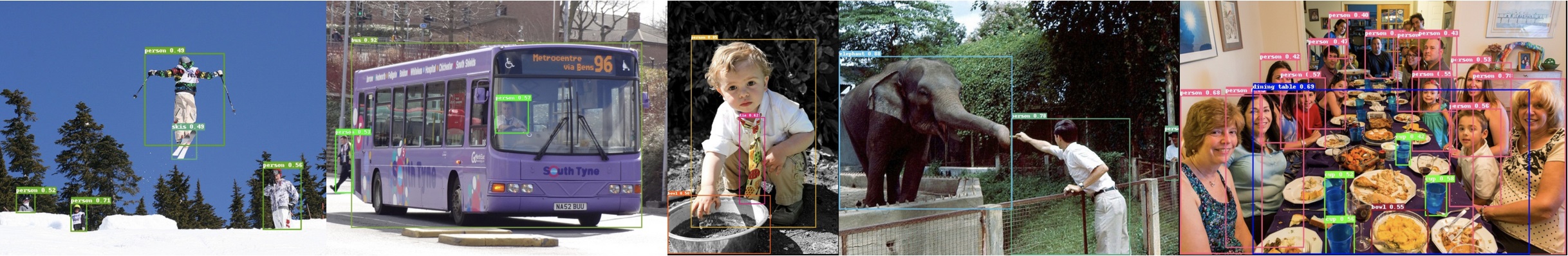

docs/images/picedet_demo.jpeg

0 → 100644

428.2 KB