Merge branch 'develop' of https://github.com/PaddlePaddle/Paddle into cross_channel_norm

Showing

doc/design/kernel_hint_design.md

0 → 100644

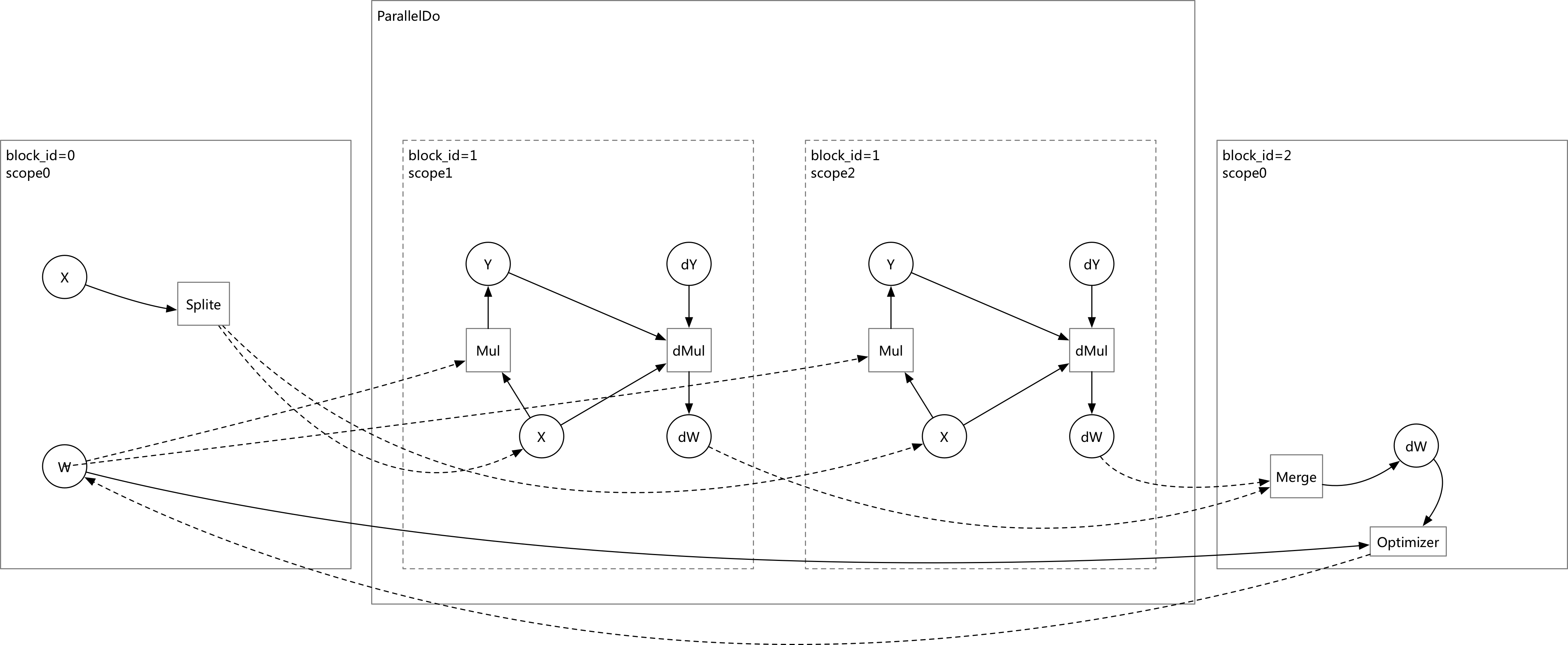

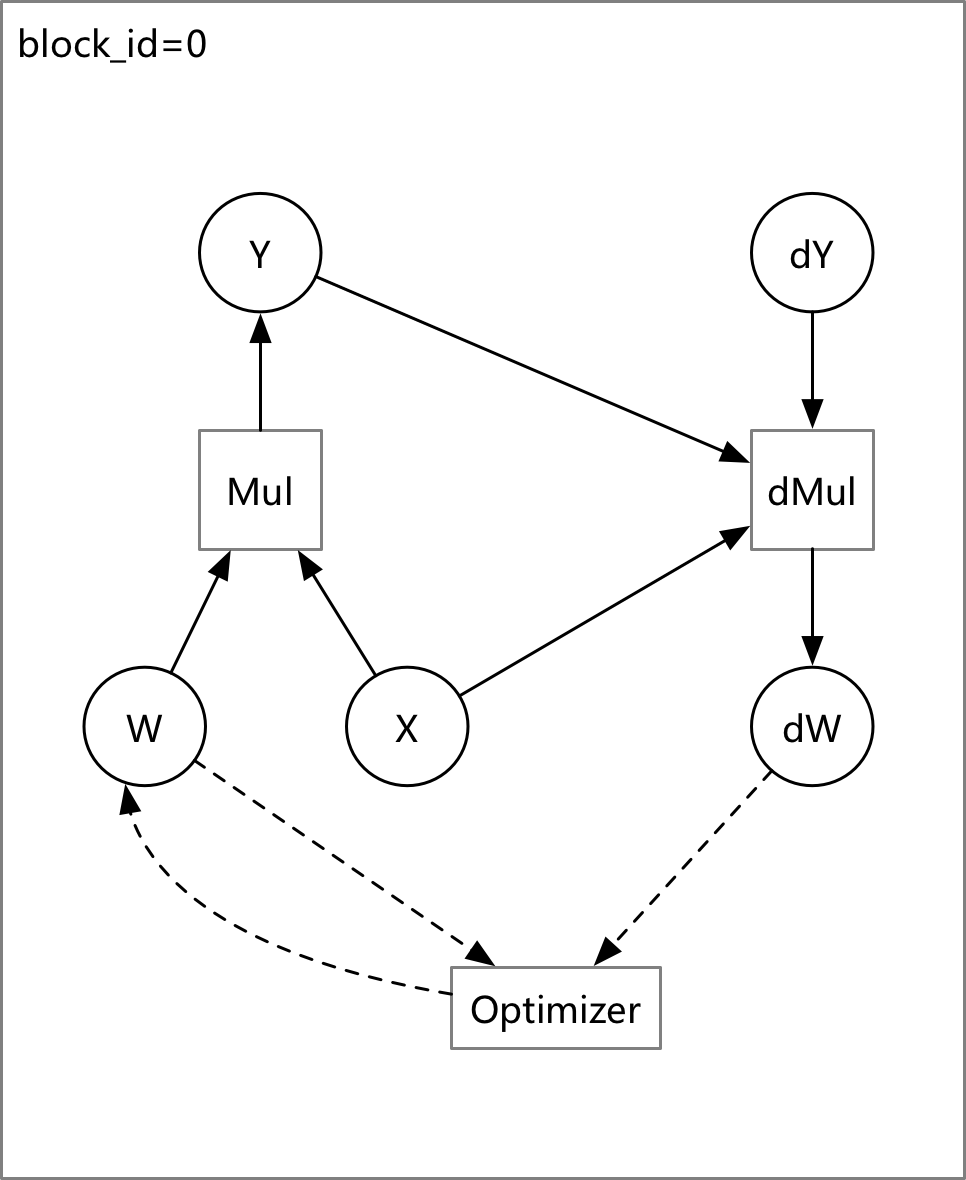

doc/design/refactor/multi_cpu.md

0 → 100644

文件已添加

350.4 KB

76.3 KB

doc/design/switch_kernel.md

0 → 100644

paddle/framework/data_layout.h

0 → 100644

paddle/framework/library_type.h

0 → 100644

文件已移动

此差异已折叠。

文件已移动

此差异已折叠。

文件已移动

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。