Merge branch 'develop' of https://github.com/PaddlePaddle/Paddle into fix_warning

Showing

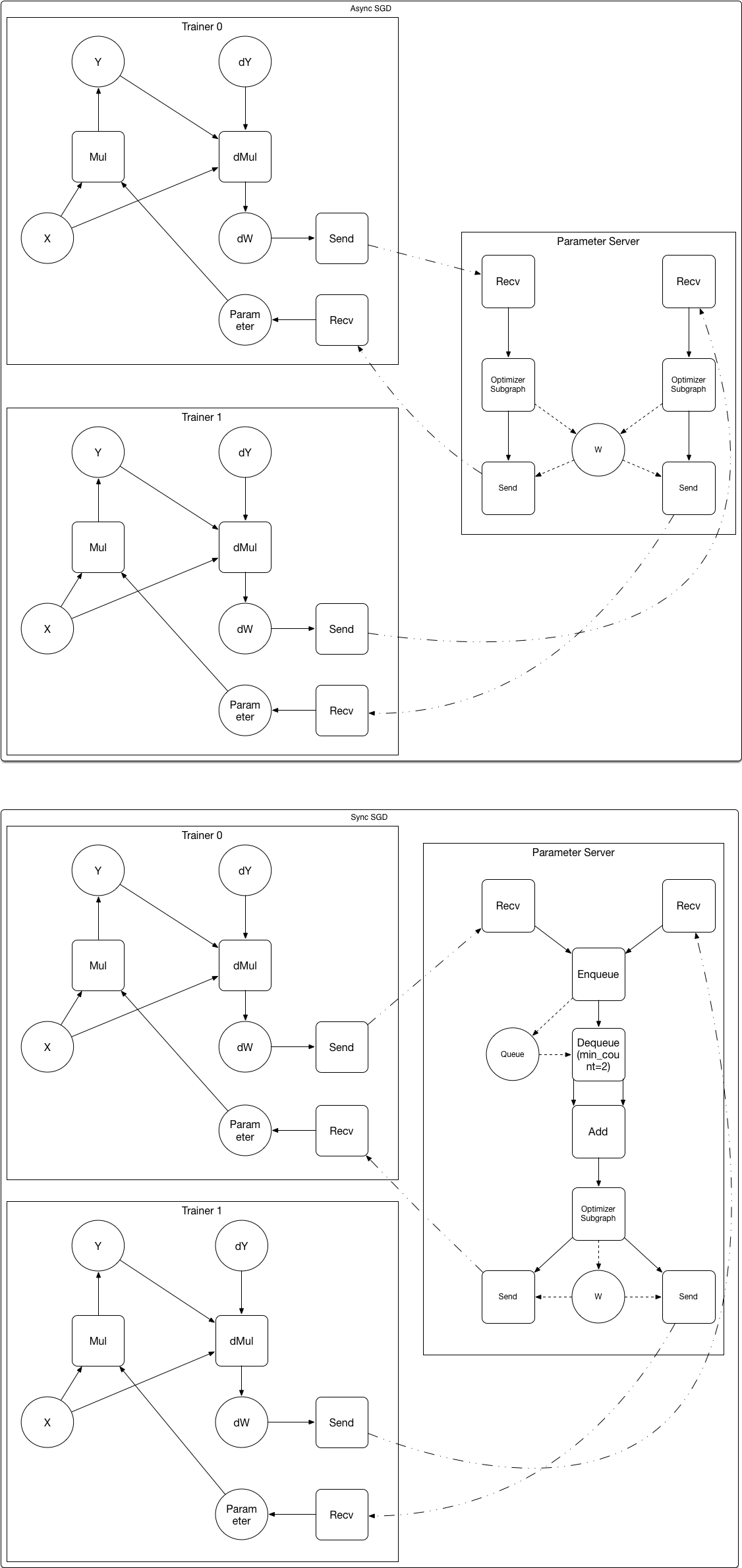

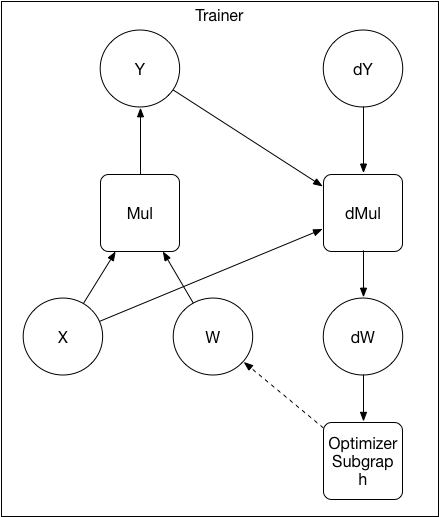

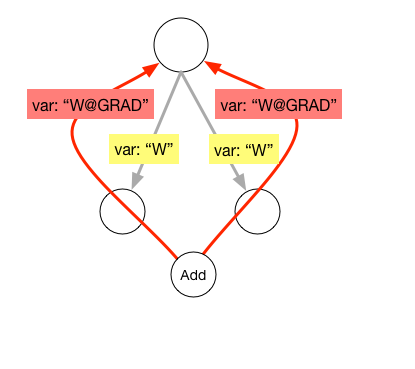

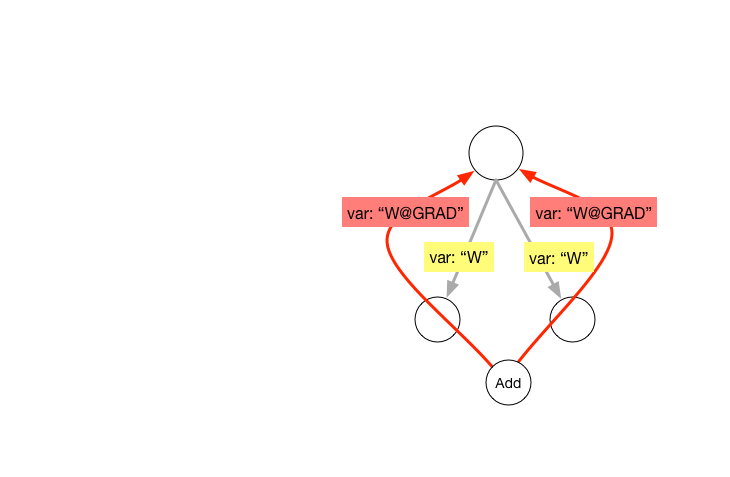

doc/design/ops/dist_train.md

0 → 100644

文件已添加

doc/design/ops/src/dist-graph.png

0 → 100644

222.2 KB

文件已添加

27.9 KB

| W: | H:

| W: | H:

paddle/operators/top_k_op.cc

0 → 100644

paddle/operators/top_k_op.cu

0 → 100644

paddle/operators/top_k_op.h

0 → 100644