Merge branch 'master' of https://github.com/paddlepaddle/PaddleDetection into add_solov2_new

Showing

README.md

已删除

100644 → 0

README.md

0 → 120000

README_en.md

0 → 100644

demo/road554.png

0 → 100644

188.8 KB

deploy/serving/README.md

0 → 100644

deploy/serving/test_client.py

0 → 100644

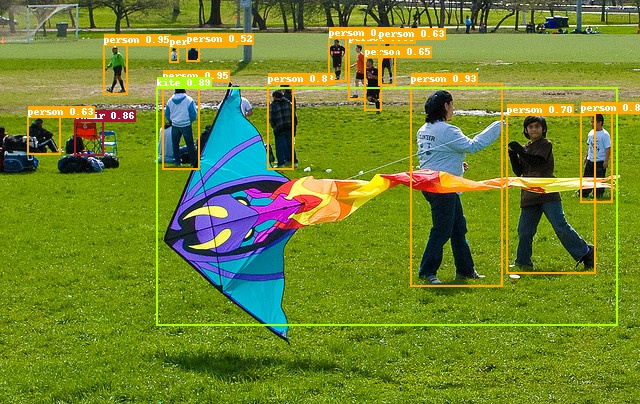

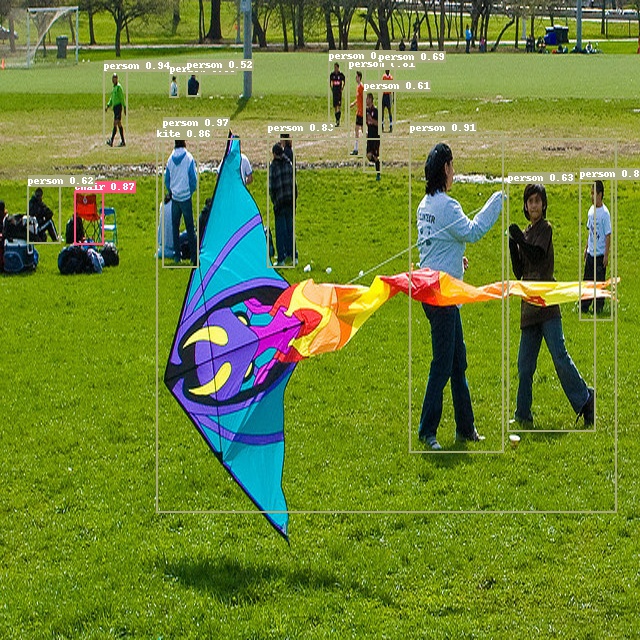

docs/images/000000014439.jpg

0 → 100644

202.7 KB

273.6 KB

docs/images/football.gif

0 → 100644

因为 它太大了无法显示 image diff 。你可以改为 查看blob。

docs/images/road554.png

0 → 100644

142.3 KB

docs/tutorials/PrepareDataSet.md

0 → 100644

tools/anchor_cluster.py

0 → 100644