Merge pull request #1652 from RainFrost1/ppshitu_lite

添加PP-ShiTu Lite

Showing

deploy/lite_shitu/Makefile

0 → 100644

deploy/lite_shitu/README.md

0 → 100644

deploy/lite_shitu/include/utils.h

0 → 100644

deploy/lite_shitu/src/main.cc

0 → 100644

deploy/lite_shitu/src/utils.cc

0 → 100644

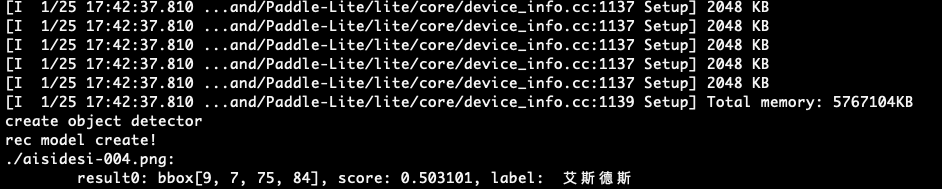

docs/images/ppshitu_lite_demo.png

0 → 100644

53.7 KB