Merge branch 'dygraph' of https://github.com/PaddlePaddle/PaddleClas into dygraph

Showing

.clang_format.hook

0 → 100644

此差异已折叠。

README_cn.md

0 → 100644

此差异已折叠。

configs/Xception/Xception41.yaml

0 → 100644

configs/Xception/Xception65.yaml

0 → 100644

configs/Xception/Xception71.yaml

0 → 100644

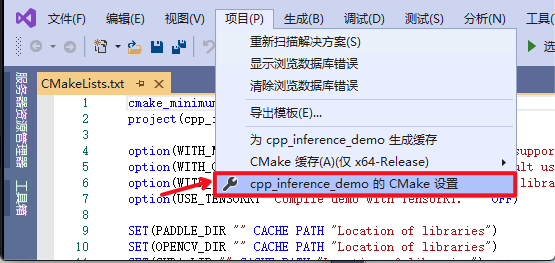

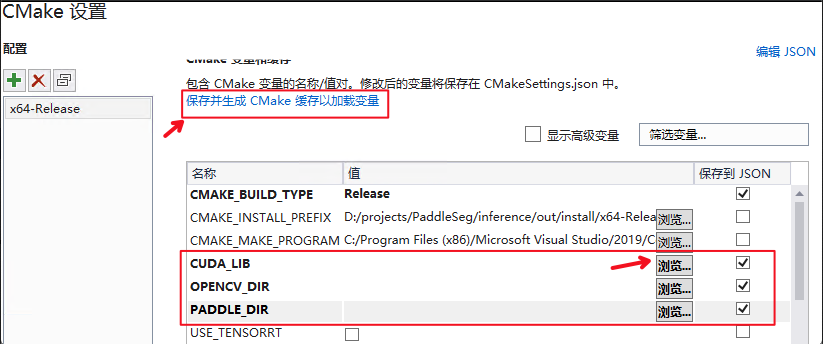

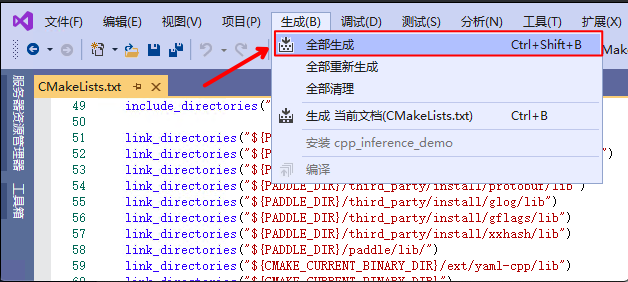

deploy/cpp_infer/CMakeLists.txt

0 → 100755

125.7 KB

28.5 KB

75.3 KB

84.3 KB

56.9 KB

44.9 KB

62.4 KB

83.3 KB

deploy/cpp_infer/include/cls.h

0 → 100644

deploy/cpp_infer/include/config.h

0 → 100644

deploy/cpp_infer/readme.md

0 → 100644

deploy/cpp_infer/readme_en.md

0 → 100644

deploy/cpp_infer/src/cls.cpp

0 → 100644

deploy/cpp_infer/src/config.cpp

0 → 100755

deploy/cpp_infer/src/main.cpp

0 → 100644

deploy/cpp_infer/src/utility.cpp

0 → 100644

deploy/cpp_infer/tools/build.sh

0 → 100755

deploy/cpp_infer/tools/config.txt

0 → 100755

deploy/cpp_infer/tools/run.sh

0 → 100755

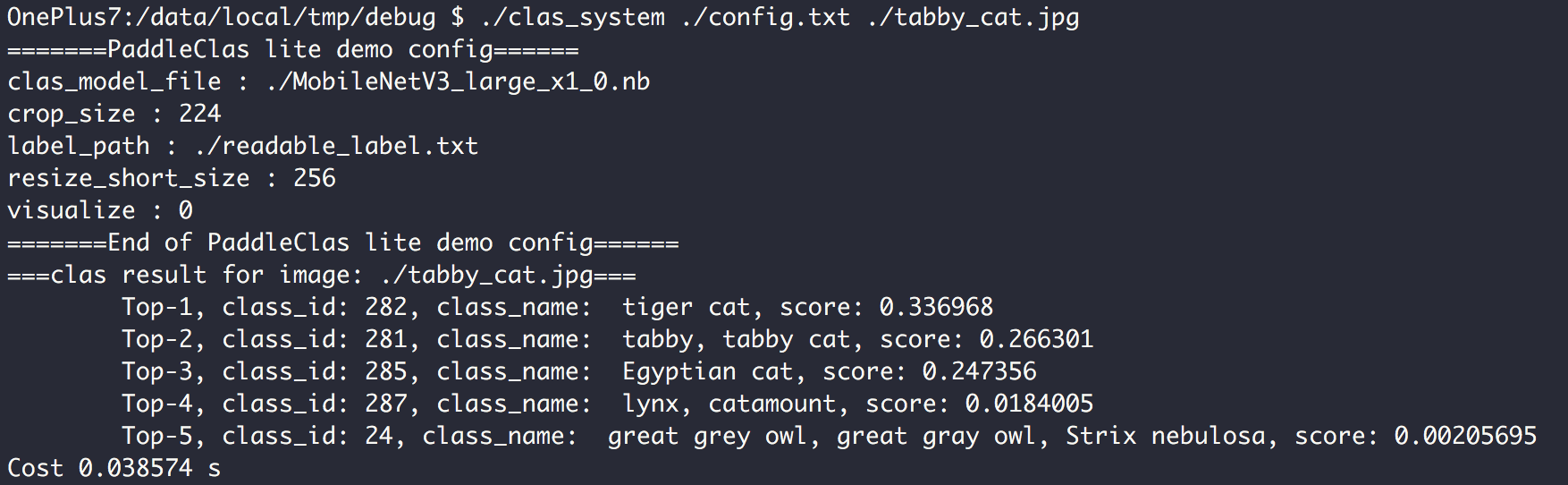

deploy/lite/Makefile

0 → 100644

deploy/lite/config.txt

0 → 100644

142.7 KB

deploy/lite/imgs/tabby_cat.jpg

0 → 100644

24.3 KB

deploy/lite/prepare.sh

0 → 100644

deploy/lite/readme.md

0 → 100644

deploy/lite/readme_en.md

0 → 100644

此差异已折叠。

docs/en/competition_support_en.md

0 → 100644

docs/en/faq_en.md

0 → 100644

此差异已折叠。

docs/en/models/DPN_DenseNet_en.md

0 → 100644

此差异已折叠。

此差异已折叠。

docs/en/models/HRNet_en.md

0 → 100644

此差异已折叠。

docs/en/models/Inception_en.md

0 → 100644

此差异已折叠。

docs/en/models/Mobile_en.md

0 → 100644

此差异已折叠。

docs/en/models/Others_en.md

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

docs/en/models/Tricks_en.md

0 → 100644

此差异已折叠。

docs/en/models/models_intro_en.md

0 → 100644

此差异已折叠。

docs/en/tutorials/config_en.md

0 → 100644

此差异已折叠。

docs/en/tutorials/data_en.md

0 → 100644

此差异已折叠。

此差异已折叠。

docs/en/tutorials/install_en.md

0 → 100644

此差异已折叠。

此差异已折叠。

docs/en/update_history_en.md

0 → 100644

此差异已折叠。

| W: | H:

| W: | H:

1.3 MB

| W: | H:

| W: | H:

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

tools/benchmark/benchmark.sh

0 → 100644

此差异已折叠。

tools/benchmark/benchmark_acc.py

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。