update code

Showing

docs/en/models/MobileViT_en.md

0 → 100644

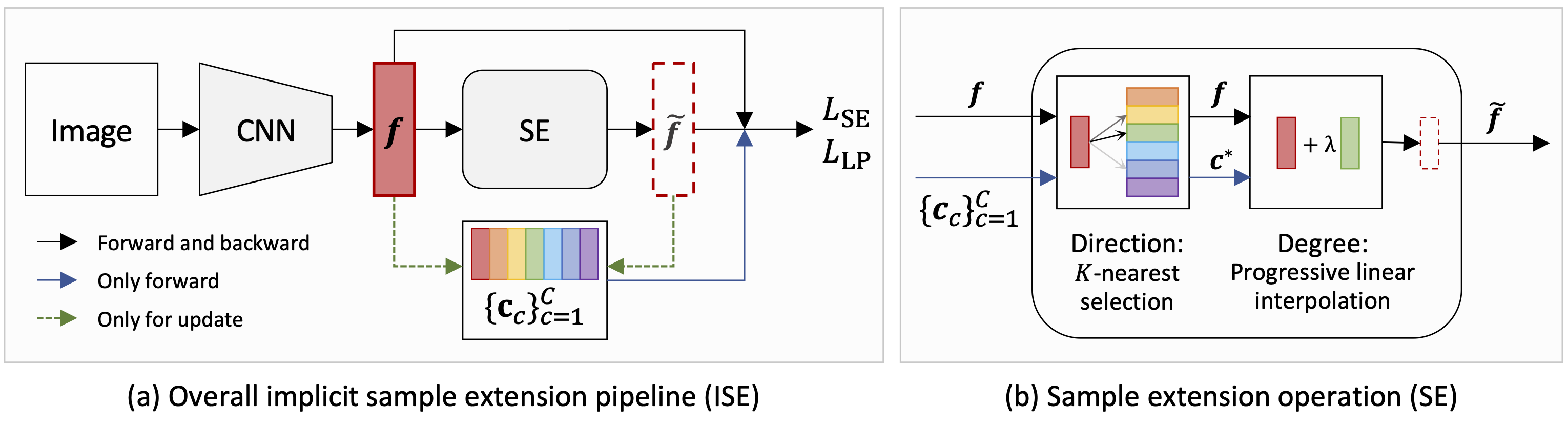

docs/images/ISE_pipeline.png

0 → 100644

177.5 KB

docs/zh_CN/models/MobileViT.md

0 → 100644

961.8 KB

文件已移动

文件已移动

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

test_tipc/config/VAN/VAN_tiny.txt

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

test_tipc/prepare_lite_cpp.sh

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。