Merge branch 'develop' into add_ShiTuV2_tipc

Showing

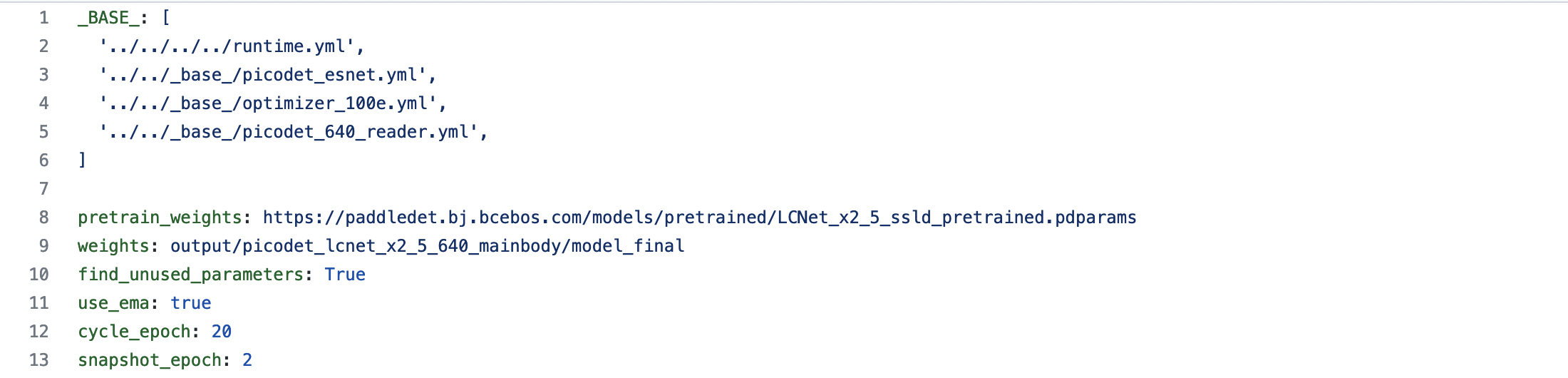

deploy/lite_shitu/README.md

已删除

100644 → 0

deploy/lite_shitu/README.md

0 → 120000

此差异已折叠。

3.8 KB

2.0 KB

23.6 KB

1.9 KB

3.5 KB

1.5 KB

2.2 KB

3.1 KB

| W: | H:

| W: | H:

27.1 KB

29.0 KB

411.3 KB

161.3 KB

23.1 KB

18.3 KB

153.9 KB

25.4 KB

24.1 KB

18.4 KB

9.9 KB

11.1 KB

21.8 KB

14.9 KB

371.3 KB

64.9 KB

231.0 KB

26.6 KB

12.0 KB

14.1 KB

38.0 KB

100.7 KB

220.6 KB

文件已移动

2.6 KB

22.6 KB

| W: | H:

| W: | H:

191.3 KB

111.3 KB

753.8 KB

1.2 MB

767.4 KB

56.2 KB

| W: | H:

| W: | H:

此差异已折叠。

此差异已折叠。

此差异已折叠。