Created by: Joejiong

PR types

Function optimization

PR changes

OPs

Describe

- 合并 elementwise_pow 和 pow,命名为 pow,完全兼容elementwise_pow 和 pow。

- 修复Power 函数动静态图支持float64<-pow(int64, float64)

- Power 函数动静态图支持float64<-pow(float64, int64)

- 兼容直接输入python type(float, int),与 paddle data type(Variable和Tensor)

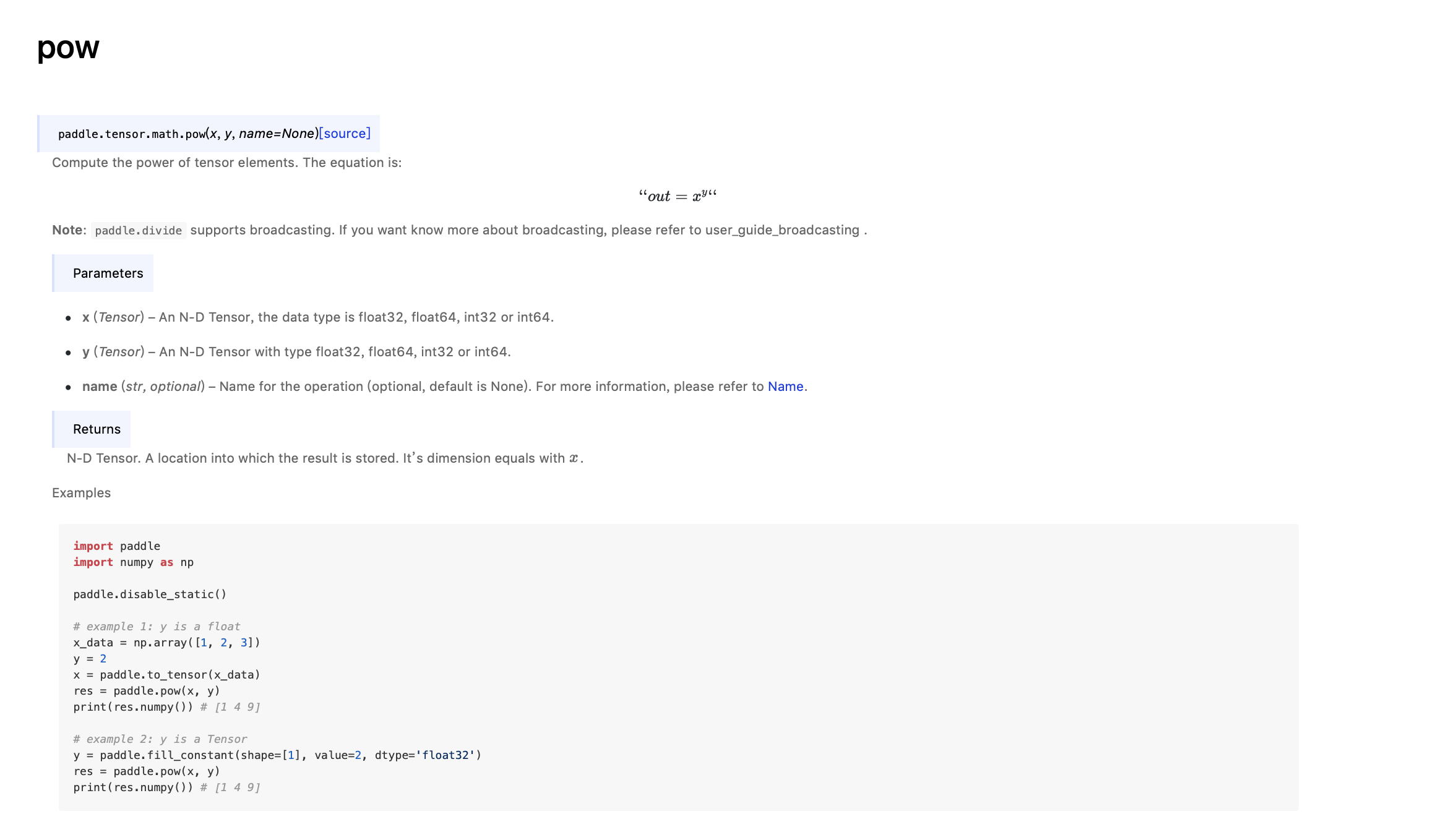

文档预览:

测试代码:

from __future__ import print_function

import paddle

import paddle.tensor as tensor

import paddle.fluid as fluid

from paddle.static import Program, program_guard

import numpy as np

import unittest

DYNAMIC = 1

STATIC = 2

def _run_power(mode, x, y):

# dynamic mode

if mode == DYNAMIC:

paddle.disable_static()

# y is scalar

if isinstance(y, (int, float)):

x_ = paddle.to_tensor(x)

# print(x_)

y_ = y

res = paddle.pow(x_, y_)

return res.numpy()

# y is tensor

else:

x_ = paddle.to_tensor(x)

y_ = paddle.to_tensor(y)

res = paddle.pow(x_, y_)

return res.numpy()

# static mode

elif mode == STATIC:

paddle.enable_static()

# y is scalar

if isinstance(y, (int, float)):

with program_guard(Program(), Program()):

x_ = paddle.data(name="x", shape=x.shape, dtype=x.dtype)

y_ = y

res = paddle.pow(x_, y_)

place = fluid.CPUPlace()

exe = fluid.Executor(place)

outs = exe.run(feed={'x': x}, fetch_list=[res])

return outs[0]

# y is tensor

else:

with program_guard(Program(), Program()):

x_ = paddle.data(name="x", shape=x.shape, dtype=x.dtype)

y_ = paddle.data(name="y", shape=y.shape, dtype=y.dtype)

res = paddle.pow(x_, y_)

place = fluid.CPUPlace()

exe = fluid.Executor(place)

outs = exe.run(feed={'x': x, 'y': y}, fetch_list=[res])

return outs[0]

class TestPowerAPI(unittest.TestCase):

"""TestPowerAPI."""

def test_power(self):

"""test_power."""

np.random.seed(7)

# test 1-d float tensor ** float scalar

dims = (np.random.randint(200, 300), )

x = (np.random.rand(*dims) * 10).astype(np.float64)

y = np.random.rand() * 10

res = _run_power(DYNAMIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

res = _run_power(STATIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

# test 1-d float tensor ** int scalar

dims = (np.random.randint(200, 300), )

x = (np.random.rand(*dims) * 10).astype(np.float64)

y = int(np.random.rand() * 10)

res = _run_power(DYNAMIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

res = _run_power(STATIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

x = (np.random.rand(*dims) * 10).astype(np.int64)

y = int(np.random.rand() * 10)

res = _run_power(DYNAMIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

res = _run_power(STATIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

# test 1-d float tensor ** 1-d float tensor

dims = (np.random.randint(200, 300), )

x = (np.random.rand(*dims) * 10).astype(np.float64)

y = (np.random.rand(*dims) * 10).astype(np.float64)

res = _run_power(DYNAMIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

res = _run_power(STATIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

# test 1-d float tensor ** 1-d int tensor

dims = (np.random.randint(200, 300), )

x = (np.random.rand(*dims) * 10).astype(np.float64)

y = (np.random.rand(*dims) * 10).astype(np.int64)

res = _run_power(DYNAMIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

res = _run_power(STATIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

# test 1-d int tensor ** 1-d float tensor

dims = (np.random.randint(200, 300), )

x = (np.random.rand(*dims) * 10).astype(np.int64)

y = (np.random.rand(*dims) * 10).astype(np.float64)

# print(np.power(x, y))

res = _run_power(DYNAMIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

res = _run_power(STATIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

# test 1-d int tensor ** 1-d int tensor

dims = (np.random.randint(200, 300), )

x = (np.random.rand(*dims) * 10).astype(np.int64)

y = (np.random.rand(*dims) * 10).astype(np.int64)

res = _run_power(DYNAMIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

res = _run_power(STATIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

# test 1-d int tensor ** 1-d int tensor

dims = (np.random.randint(200, 300), )

x = (np.random.rand(*dims) * 10).astype(np.int32)

y = (np.random.rand(*dims) * 10).astype(np.int32)

res = _run_power(DYNAMIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

res = _run_power(STATIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

# test 1-d int tensor ** 1-d int tensor

dims = (np.random.randint(200, 300), )

x = (np.random.rand(*dims) * 10).astype(np.float32)

y = (np.random.rand(*dims) * 10).astype(np.float32)

res = _run_power(DYNAMIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

res = _run_power(STATIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

# test 1-d int tensor ** 1-d int tensor

dims = (np.random.randint(200, 300), )

x = (np.random.rand(*dims) * 10).astype(np.float64)

y = (np.random.rand(*dims) * 10).astype(np.float32)

res = _run_power(DYNAMIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

res = _run_power(STATIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

# test 1-d int tensor ** 1-d int tensor

dims = (np.random.randint(200, 300), )

x = (np.random.rand(*dims) * 10).astype(np.float64)

y = (np.random.rand(*dims) * 10).astype(np.int32)

res = _run_power(DYNAMIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

res = _run_power(STATIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

# test 1-d int tensor ** 1-d int tensor

dims = (np.random.randint(200, 300), )

x = (np.random.rand(*dims) * 10).astype(np.float32)

y = (np.random.rand(*dims) * 10).astype(np.int64)

res = _run_power(DYNAMIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

res = _run_power(STATIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

# test broadcast

dims = (np.random.randint(1, 10), np.random.randint(5, 10),

np.random.randint(5, 10))

x = (np.random.rand(*dims) * 10).astype(np.float64)

y = (np.random.rand(dims[-1]) * 10).astype(np.float64)

res = _run_power(DYNAMIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

res = _run_power(STATIC, x, y)

self.assertTrue(np.allclose(res, np.power(x, y)))

class TestPowerError(unittest.TestCase):

"""TestPowerError."""

def test_errors(self):

"""test_errors."""

np.random.seed(7)

# test dynamic computation graph: inputs must be broadcastable

dims = (np.random.randint(1, 10), np.random.randint(5, 10),

np.random.randint(5, 10))

x = (np.random.rand(*dims) * 10).astype(np.float64)

y = (np.random.rand(dims[-1] + 1) * 10).astype(np.float64)

self.assertRaises(fluid.core.EnforceNotMet, _run_power, DYNAMIC, x, y)

self.assertRaises(fluid.core.EnforceNotMet, _run_power, STATIC, x, y)

# test dynamic computation graph: inputs must be broadcastable

dims = (np.random.randint(1, 10), np.random.randint(5, 10),

np.random.randint(5, 10))

x = (np.random.rand(*dims) * 10).astype(np.float64)

y = (np.random.rand(dims[-1] + 1) * 10).astype(np.int8)

self.assertRaises(TypeError, paddle.pow, x, y)

if __name__ == '__main__':

unittest.main()

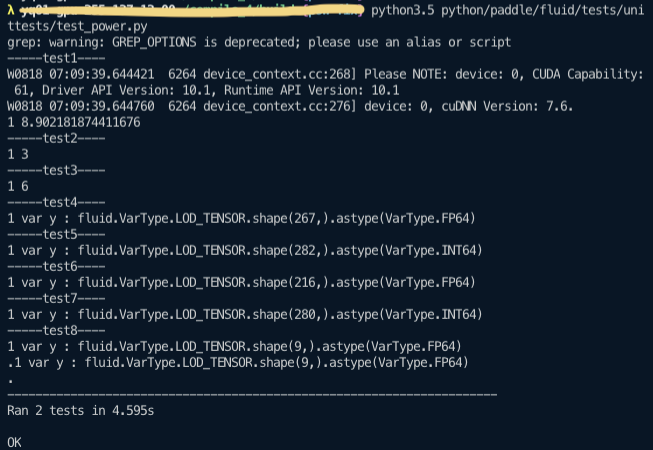

输出: