Created by: cryoco

PR types

Function optimization

PR changes

Others

Describe

TRT support of PaddleSlim quant models

Remove restraints of quantizing extra ops for TRT inference

While using Paddle-TRT int8 to preform inference with models trained by PaddleSlim quant-aware training, there are some restraints during the training phase: if there are ops like elementwise_add, pool2d, leaky_relu, etc. in the model, they must be quantized, or TRT will throw an error during inference. This damages the flexibility for the utilization of the int8 quantization. We added support to ensure that while training with PaddleSlim QAT, users doesn't have to add extra quant ops for inferencing with TensorRT, with little performance damaging.

quant_conv2d_dequant_fuse_pass refactor

quant_conv2d_dequant_fuse_pass is used to extract input and output scales from quant/dequant op and set them into attributes of quantized op. The former quant_conv2d_dequant_fuse_pass has problems as following:

-

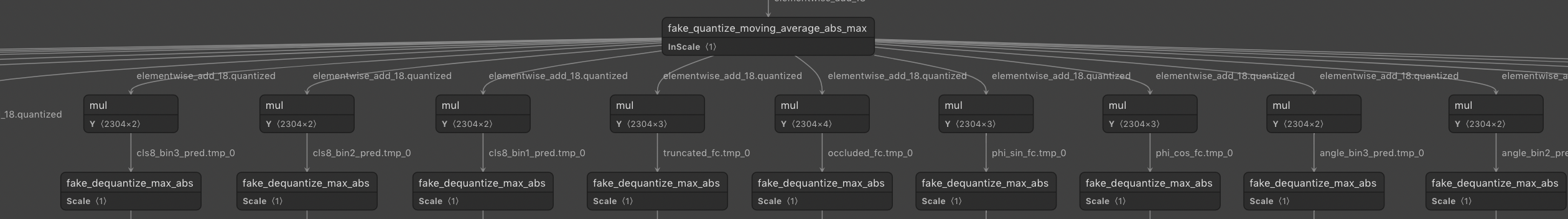

Using a matrix-like structure to store IR nodes requires a threshold of the max number of quantized nodes preceded by the same quant node, which leads to serious problems when model structure is like:

-

Converting weights from int8 range to fp32 range happens in tensorrt_subgraph_pass, which means if some quantized op is outside trt subgraph, it's weight will not be converted. This might cause wrong results when trt subgraph can't cover all quantized nodes.

We refactored this pass by splitting the fusion into 2 phases, DeleteQuant Fuse and Dequant fuse, so that the threshold of branch nodes is not needed. Moreover, the range of quantized weights in conv/mul/fc is converted to fp32 in this pass instead of tensorrt_subgraph_pass, to produce the right result when trt subgraph can't cover all quantized nodes.